STA 4273 Winter 2021: Minimizing Expectations

Overview and Motivation

The COVID-19 pandemic has subsided, and you are going out to dinner for the first time in a year. You are trying to decide between two restaurants: your old favourite and a new popular one. Do you go with your trusted favourite or take a risk on the new restaurant? This is an example of decision-making under uncertainty. It is a problem that has been studied for a long time under various guises in many fields including statistics, economics, operations research, and computer science.

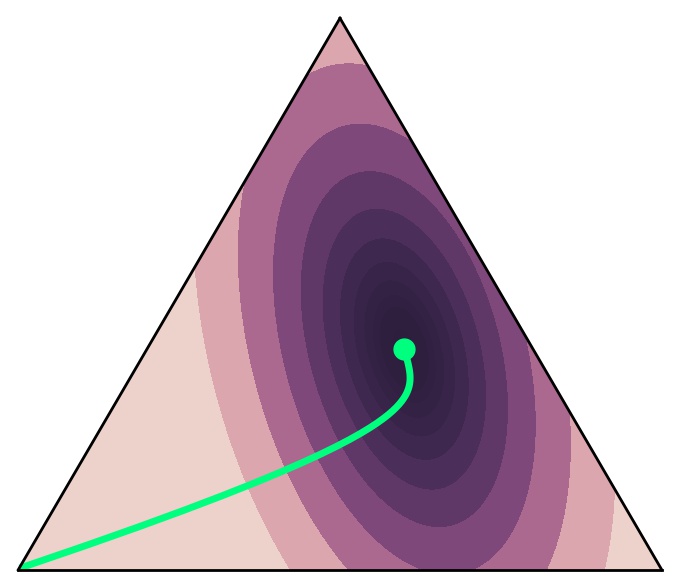

Decision-making under uncertainty is typically formalized as the problem of minimizing an expected cost (or maximizing an expected reward). The decision-maker takes an action by sampling from a distribution over actions, and it receives a cost for that action. The problem is to find the action distribution that minimizes the decision-maker's expected cost.

This problem may seem rather specific, but it appears throughout machine learning and statistics. The most prominent example is Bayesian inference, which can be cast in this paradigm as a variational optimization problem. More broadly, progress on optimizing expected values would improve generative models of real-world data, neural network models with calibrated uncertainties, reinforcement learning algorithms, and many other application areas.

This seminar course introduces students to the various methodological issues at stake in the problem of optimizing expected values and leads them in a discussion of its recent developments in machine learning. The course emphasizes the interplay between reinforcement learning and Bayesian inference. While most of the readings are applied or methodological, there are topics for more theoretically-minded students. Students will be expected to present a paper, prepare code notebooks, and complete a final project on a topic of their choice.

This course's structure is heavily inspired by Learning to Search by Prof. Duvenaud.

Course Information

Teaching Staff

Instructor: Chris Maddison

Instructor Office Hours: 4:00PM–5:00PM on Thursdays via GatherTown

TAs: Cait Harrigan, Farnam Mansouri

Email Instructor and TA: sta2473-min-expectations@cs.toronto.edu

Where and When

Thursdays 1:00PM–3:00PM via Zoom

Online Delivery

Class will be held synchronously online every week via Zoom. The lectures will be recorded for asynchronous viewing by enrolled students. All students are encouraged to attend class each week. Information on attending class, attending office hours, viewing recorded lectures, and using Piazza is available on Quercus.

Course videos and materials belong to your instructor, the University, and/or another source depending on the specific facts of each situation, and are protected by copyright. In this course you are permitted to download session videos and materials for your own academic use, but you should not copy, share, or use them for any other purpose without the explicit permission of the instructor. For questions about recording and use of videos in which you appear please contact your instructor.

Assignments and Grading

Assignments for the course include a paper presentation and a final project. The marking scheme is as follows:

Course Syllabus and Policies

The course information and policies can be found in the syllabus.

Schedule

This is a preliminary schedule, and it may change throughout the term. With the exception of the first two weeks, each week students will be presenting a recent paper from the literature. Every student will present a paper once during the course.

The weeks are organized into themes and associated with a list of recent reference. No one is expected to read every paper on the list for each week, but there will be some recommended readings for the whole class.

| # |

Date |

Topic |

Notes |

| 1 |

14/1 |

A common problem

Expected values are routinely optimized in statistics and machine learning. We review the basic terminology in this area and discuss some major applications, incuding generative models, (approximate) Bayesian inference, reinforcement learning, and control.

|

Readings

Lecture

|

| 2 |

21/1 |

Basic tools

Iterative methods are essential tools for optimization. We will introduce the basics of iterative methods, including stochastic gradient descent (SGD), value estimation, policy iteration. The question that we will pose throughout the course is: what structure in the problem is being exploited by the method?

|

SGD readings

- If you do not have time, read Chap. 2 & 3 of (Hazan, 2019) for a terse introduction (it is a working draft).

- If you have more time, read Chap. 3 & 4 of (Bottou et al., 2018), which is more thorough than what we will discuss in class.

Dynamic programming readings

- Sect. 1.1 -1.4 of (Agarwal et al., 2020). Again this is a working draft, so it is terse. Still, it's an efficient introduction. I will describe the context in class.

Lecture

|

| 3 |

28/1 |

Gradient estimation I

Gradient information is very useful for optimization, and computing gradients is a key subroutine of many optimization methods. We will review basic gradient estimation techniques, including policy gradients and reparameterization gradients. Student presentations will focus on recent extensions of these methods.

|

Readings

Student presentations on:

Lecture

|

| 4 |

4/2 |

Gradient estimation II

Gradient estimation is sometimes desirable in more exotic settings. Student presentations will focus on gradient estimation for off-policy settings, for higher-order derivatives, or for implicit distributions.

|

Readings

Student presentations on:

Lecture

|

| 5 |

11/2 |

Variational objectives I

Bayesian inference can be cast as a variational problem of minimizing an expectation. In recent years, this point of view has lead to a variety of useful loss functions for deep generative models and principled information-theoretic regularization. Student presentations will focus on recent developments in this subfield.

|

Readings

Student presentations on:

Lecture

|

| 6 |

25/2 |

Variational objectives II

Student presentations will focus on applications to Bayesian neural networks, extensions to functional settings, and other developments. We will see how some of our efforts on gradient estimation can pay off.

|

Readings

Student presentations on:

Lecture

|

| 7 |

4/3 |

Policy optimization I

For the next four classes, we will shift focus to reinforcement learning, but connections to Bayesian inference will be omnipresent. The standard setting for policy optimization assumes than an agent can collect data by interacting with an environment. Student presentations will focus on recent developments in the problem of (mostly) online policy optimization.

|

Readings

Student presentations on:

Lecture

|

| 8 |

11/3 |

Offline policy evaluation

In modern applications of reinforcement learning it is important to be able to evaluate a policy without interacting with the environment. Student presentations will focus on recent developments in the problem of offline policy evaluation.

|

Readings

Student presentations on:

Guest lecture:

|

| 9 |

18/3 |

Policy optimization II

Learning optimal behaviour without interacting with an environment is very challenging. Student presentations will focus on recent developments in the problem of (mostly) offline policy optimization.

|

Readings

Student presentations on:

Lecture

|

| 10 |

25/3 |

Search and policy optimization

Monte Carlo Tree Search revolutionized game-playing AIs. Student presentations will focus on connections between search and policy optimization.

|

Readings

Student presentations on:

Lecture

|

| 11 |

1/4 |

Inference and control I

In the final two classes we will return to one of the central themes: the connections between control and inference.

|

Readings

Student presentations on:

Lecture

|

| 12 |

8/4 |

Inference and control II

In the final two classes we will return to one of the central themes: the connections between control and inference.

|

Readings

Student presentations on:

Guest lecture:

|

Recent references

This is a selected list of recent references relevant to this course, organized by the topic for each week. Broken or incorrect links are likely, please let me know if you find one. This is not an exhaustive list of references. If you're intrigued by a subtopic, you should start here and follow the citation graph to find more related literature.

A common problem and basic tools

General reference:

Variational inference:

Reinforcement learning:

-

Reinforcement Learning: An Introduction, Richard S. Sutton and Andrew G. Barto.

-

Reinforcement Learning: Theory and Algorithms, Alekh Agarwal, Nan Jiang, Sham M. Kakade, and Wen Sun.

-

Dynamic Programming and Optimal Control, Dimitri P. Bertsekas.

-

Bayesian Reinforcement Learning: A Survey, Mohammad Ghavamzadeh, Shie Mannor, Joelle Pineau, and Aviv Tamar.

-

A Tutorial on Thompson Sampling, Daniel Russo, Benjamin Van Roy, Abbas Kazerouni, Ian Osband, and Zheng Wen.

-

Spinning Up in Deep RL, OpenAI.

Optimization:

Gradient estimation I

Recent work on relaxed estimators:

Some recent work on reparameterization gradients:

Some recent work on online policy gradients:

Gradient estimation II

Higher-order derivatives:

Some recent work on offline policy gradients:

Generalized perspectives:

Variational objectives I

Some deep variational objectives:

Extended state space objectives:

Gradient estimators for variational objectives:

Related variational objectives:

Variational objectives II

Variational Bayesian neural networks:

Advanced training methods, other objectives:

-

SA–VAE (Kim et al., 2018)

-

REM (Dieng & Paisley, 2019)

-

TVO (Masrani et al., 2019)

-

MSC (Naesseth et al., 2020)

Offline policy evaluation

Distribution Correction Estimation:

Policy optimization I

KL–regularized descent:

-

NPG (Kakade, 2001)

-

REPS (Peters et al., 2010)

-

TRPO (Schulman et al., 2015)

-

PPO (Schulman et al., 2017)

-

ACKTR (Wu et al., 2017)

-

MPO (Abdolmaleki et al., 2018)

-

V-MPO (Song et al., 2020)

Policy optimization II

Batch RL:

-

BCQ (Fujimoto et al., 2018)

-

BEAR (Kumar et al., 2019)

-

BRAC (Wu et al., 2019)

-

MOPO (Yu et al., 2020)

Search and policy optimization

Search and policy optimization:

Inference and control

Inference as control:

-

Systematic Stochastic Search (Mansinghka et al., 2009)

-

A* Sampling (Maddison et al., 2014)

-

Reinforced VI (Weber et al., 2015)

-

Softstar (Monfort et al., 2015)

-

BBVI with trust-region optimization (Regier et al., 2017)

-

Gradient-Free VI using Policy Search (Arenz et al., 2018)

-

Controlled SMC (Heng et al., 2019)

-

Trust Region Sequential VI (Kim et al., 2019)

-

Approximate Inference with MCTS (Buesing et al., 2020)

-

VI with Future Likelihood Estimates (Kim et al., 2020)