What is Distributed Computing?

Paraphrasing Wikipedia:

A distributed system consists of multiple autonomous computers that communicate through a computer network for the purpose of achieving a common goal."We've gone through changes in the past," says Craig Mundie, Microsoft's chief research and strategy officer. But this one, he says, is the most "conceptually different" change "in the history of modern computing."

Excerpted from CNN Money article - A Chip too far?

Multicore processors are examples of distributed systems in which the cores cooperate to solve problems. Carefully written distributed algorithms must be employed to to take maximal advantage of parallelism provided by the cores and ensure situations such as deadlock , resource starvation, and race conditions are avoided. Research in distributed computing also attempts to create programming models to help simplify the complex task of splitting programs into multiple simultaneously executing threads.

Linearizability III

Linearizable histories and linearizable objects

Had a breakthrough in my understanding of linearizability and linearizable objects.

Part of my problem had to do with examples of histories in which the question was

posed "is this history linearizable?". Hence, intuitively it appeared to me that

linearizability was a property of histories and hence what needed to be constrained

was the system that made calls on the object. In other words, it appeared

that what we were looking for was a concurrent system which was constrained in such

a way that it only made sequences calls to objects that were linearizable. Recall

the following definition :

A concurrent object A is linearizable if, for every history H of every concurrent system { P1, ..., Pn ; A1, ..., Am }, H | Aj is linearizable.

The other issue I was stumbling over was the existence of linearizable histories. Again, looking at some of the examples: we are given a history and asked "is this history linearizable?". A history is essentially just a sequence of strings representing matching invocations and responses. If there is no constraint of what these string sequences can be composed of (besides matching), then we can always construct a sequence that violates the specification of any object of interest. In other words, we can always construct non-linearizable histories for any linearizable obejct. At least this was my thinking at the time!

A better way to pose these questions is perhaps as follows (consider some history where one or more processes access a single object A:

Consider the following history. Assuming that object A is linearizable, is this history possible?

The other Eureka moment I had was in class this past Friday where we were shown regular and safe registers. It was extremely illuminating to see examples of non-linearizable objects.

Regular register: A regular register can have any number of writers and any number of readers. The writes appear as if they were executed sequentially, this sequence complying with their real time order (i.e., if two writes w1 and w2 are concurrent, they can appear in any order, but if w1 terminates before w2 starts, w1 has to appear as being executed before w2). As far as a read operation is concerned we have the following:

- 1) Returns the value of the last completed write, or

- 2) If concurrent with another write, it can return the value of that write.

w(0) w(1)

[---------------] [-------------------]

[-------------------------] [----------------------]

r(?) r(?)

Below we see a possibile result for the two reads according to the specification of a regular register. It is aso linearizable as seen by the linearization points below:

w(0) w(1)

[--------x------] [----------x--------]

[-------------x-----------] [----------x-----------]

r(0) r(1)

However, the following is also a possibility accoring to the spec:

w(0) w(1)

[---------------] [-------------------]

[-------------------------] [----------------------]

r(0)

This is possible by (1) in the definition, since 0 is the value of the last completed write.

w(0) w(1)

[---------------] [-------------------]

[-------------------------] [----------------------]

r(1) r(0)

This is possible by (2) in the definition, since the first read is concurrent with the second write w(1). This is clearly not linearizable since:

w(0) w(1)

[---------------] [--------|----------]

[--------|-------|--------] [----------------------]

| r(1) | | r(0)

linearization } | | |

point of w(0) }<-] | |

must occur } | |

|<--+--->|

| |

linearization point of } | |

of w(1) and r(1) must }<---+ |

occur with w(1) <= r(1) } | {

[-->{ r(0) impossible here

| {

So this provides a great example of a type I had not seen: a legal history for an object that is not linearizable.

Linearizability II

I feel I've resolved the issues I had with the Wait-Free paper I mentioned in my last post.

First, in the Linearizability paper, its made clear that the <H partial order is strictly irrefelexive. This cleared up a question I had.

Second, the main problem I had was that:

A common example is to show that a linearizable sequence of invocations and responses for a LIFO queue X may not be linearizable if we replace X with a FIFO queue. But it's not clear to me how the definition above can distinguish between these two cases due the purely syntactic definition of matching.

This was resolved by the use of I/O Automata as a

formalism for concurrent systems in "Wait-free" (more on this,

and how it contrasts with the model in "Linearizable" below).

First, the term

linearizable can be quite different depending on what follows

the word linearizable. e.g.: We have

- Linearizable history

- Linearizable concurrent system

- Linearizable object

Linearizable History (from "Linearizable")

A history H is linearizable if

there exists a legal sequential history S such

that H can be extended (by appending

zero or more response events) to some history H’ and :

(1) for all Pi, complete(H’) | Pi = S | Pi

(2) <H ⊆<S

If H is a history, complete(H) is the maximal subsequence of H consisting only of invocations and matching responses.

Linearizable object (from "Wait-free")

A concurrent object A is linearizable if, for every history H of every concurrent

system { P1, ..., Pn ; A1, ..., Am },

H | Aj is linearizable.

Linearizable concurrent system (from "Wait-free")

A concurrent system { P1, ..., Pn ; A1, ..., Am } is

linearizable if, for each history H, there exists a sequential

history S such that

(1) for all Pi, H | Pi = S | Pi

(2) <H ⊂<S

(1) says that the system is locally* sequential, and (2) says that the local operation ordering respects the global operation ordering.

A history H is sequential if:

- (1) The first event of H is an invocation./li>

- (2) Each invocation, except possibly the last, is

immediately followed by a matching response.

Each response is immediately followed by a matching invocation.

We note that nowhere in the paper is it defined what a 'matching invocation to a response' is, and its not even clear what that could mean, since this means all sequential histories are infinite, yet the paper gives at least one finite history H1' that it says is sequential: e.g.: H1' has a response that is not immediately followed by any invocation—matching or otherwise (whatever 'matching invocation' could possibly mean). I assume this entire last sentence is an error and should be omitted in its entirety.

Effect of I/O Automata Formalism on definitions

Note that in "Wait-free" we no longer see the notion of 'legal sequential histories' appear in the definitions as we did in "Linearizable".

Linearizable object (from "Linearizable")

A linearizable object is one whose concurrent histories are

linearizable with respect to some sequential specification.

Linearizable object (from "Wait-free")

A concurrent object A is linearizable if, for every history H of every concurrent

system { P1, ..., Pn ; A1, ..., Am },

H | Aj is linearizable.

Presumably this is because if a concurrent system is modeled as a composite I/O automaton, then all histories are immediately legal since—for example—succesfully dequeing an object from an empty queue is impossible since the queue sub-automata should transition to an error state if this is attempted. In "Linearizable", however, histories are modelled as simple lists of invocations and responses. In this case we need to rule out 'impossible' histories like removing objects from empty data structures:

H

p: obj.dequeue()

p: obj.resp(y)

The definition of linearizable object states that for an object to be classified as linearizable, it's local history must be linearizable in EVERY history H of EVERY concurrent system. So when histories are simply lists of invocations and responses (i.e.: as they are in "Linearizable"), we need to add the following additional constraint on the behaviour of the concurrent system:

Legal Concurrent System

A concurrent system { P1, ..., Pn ; A1, ..., An } is legal if

for every history H and every object A in {A1, ..., Am},

H | A

is a legal sequential history of A.

We could then use in "Linearizable" the definition of linearizable object found in "Wait-free"—as long as we replaced concurrent system with legal concurrent system. With this additional constraint we ensure that our concurrent systems are realistic and aren't allowed to have histories with impossible sequences of operations on its shared objects.

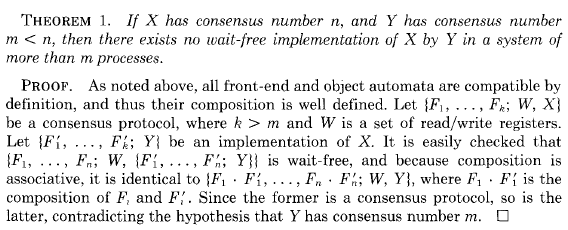

Proof of Theorem 1 of "Wait-free"

My proof of Theorem 1 of "Wait-free"

Same proof except for the fact I do not make the jump from k to

n as the proof above. i.e.: In the proof above Herlihy

implements X with Y using k front-ends, then in the next

sentence there is an implementation of X with Y using

n front-ends. Its not clear to me how we can

make this jump since we may have n strictly larger than k.

Besides—such a jump is not needed. Since k is already strictly

larger than m, we can avoid this jump and still get our

contradiction. See below.

Theorem 1. If X has consensus number n, and Y has consensus number m < n, then there exists no wait-free implementation of X by Y in a system of more than m processes.

PROOF:

Let { F1, ..., Fk ; W , X } be a k-process consensus protocol where k > m

and W is a set of r/w registers. Then clearly k ≤ n

(otherwise X would have a consensus number greater than

n). So we have that n ≥ k > m. Let { F1' , ..., Fk'

; Y }

implement X (note that this is not a consensus protocol, just an

implementation of X). Then :

{ F1, ..., Fk ; W , X }

≈ { F1, ..., Fk ; W

, { F1' , ..., Fk',

W , Y } }

≈ { F1·F1', ..., Fk·Fk'

; W , Y }

This last step is possible due to compatibility of front ends, and the fact that since X has consensus number n ≥ k, it can solve k-process consensus. But then we have shown that Y has consensus number k > m—a contradiction.

Linearizability

My main endeavor at this point is to read and absorb Maurice Herlihy's paper Wait-free Synchronization.

I recently read through the first half of Lynch and Tuttle's paper Intro to I/O Automata in order to get a more fleshed-out background in the I/O-Automaton formalism. I also happen to have copy of Lynch's book "Distributed Algorithms" for additional background if need be.

I'm currently reading the paper of Herlihy and Wing entitled Linearizability: A Correctness Condition for Concurrent Objects to more fully flesh out the notion of linearizability. So far it has been enormously helpful as it uses the same terminology and notation found in "Wait-free" but also includes a host of enlightening examples. I had become a bit unclear on how the definition of linearizability given in "Wait-free" took into account the fact that what constitutes—in the terminology of "Linearizable"—an "acceptable" linearization, is a function of the behaviour of the specific object(s) whose operations we are trying to linearize. As of now, the definition of a sequential execution as a sequence of invocations and responses where "the first event is an invocation and it alternates between matching invocations and responses (where matching simply means the invocation and response's process and object names agree)" seems to be an insufficient building block upon which to build the definition of linearizable. The definition of matching is a purely syntactic one (only caring about invocation and response strings), and as such, would appear to be oblivious to object semantics.

Some definitions... An execution history is defined to be sequential if it is derived from a sequential execution.

Quoting from "Wait-free":

Each history H [of a concurrent system] induces a partial "real-time" order <H on it's operations:

op0 <H op1 if the response for op0 precedes the invocation of op1. Operations unrelated by <H are said to be concurrent. If H is sequential, <H is a total order. A concurrent system { P1 , ... , Pn , A1 , ... , Am } is linearizable if for each history H, there exists a sequential history S such that

- for all Pi , H | Pi = S | Pi

- <H is a subset of <S

A common example is to show that a linearizable sequence of invocations and responses for a LIFO queue X may not be linearizable if we replace X with a FIFO queue. But it's not clear to me how the definition above can distinguish between these two cases due the purely syntactic definition of matching.

However, reading through "Linearizability" and studying the examples is slowly clearing this up for me (on page 4 they imply that they intend to tackle this issue head-on :)

Group Action notation

The notation used to represent group actions can be very difficult to parse, and sometimes potentially ambiguous, however this notation still may be the best of the alternatives....

If G is a group, then a group action G on a set X is a homomorphism u:G -> SX, where SX is the symmetric group of X.

A canonical example would be the map u:Dn -> Sn, where Dn is the dihedral group of order 2n (the group of symmetry-preserving rotations and reflections of an n-gon) and Sn is the symmetric group of {1,...,n}. The idea is to think of the integers 1,...,n as the vertices of the n-gon, and use the rotation and reflection 'actions' of Dn to manipulate them.

Getting back to the general definition above (where u is any homomorphism u:G -> SX), the first problem (notation-wise), is that SX is a set of permutations of X, and hence it is a set of functions. So for any g in G, u(g) is a permutation function on X. So we have three sets, G, X, and the permutation functions of X.

So if one wants to calculate how an element g in G acts on an element x in X, we need to look at:

(u(g))( x )

Some things to note: since u is a map from G to SX, the image of G is a subset of SX, and the natural operation of SX is function composition. Now, let a,b be in G (remembering u(ab), u(a), and u(b) are permutations on X), let x be in X, and let o represent function composition, then

(u(ab))(x) = (u(a))(u(b(x))) = (u(a) o u(b))(x)

This simply expresses that u is a homomorphism, but already the notation is getting unwieldy.

To 'simplify' this, the convention is to remove u from the notation entirely! So instead of

(u(a))( x ) ,

we write

a(x)

Hence we would write the example above as simply

(ab)(x) = a(b(x)) = (a o b)(x)

Seems reasonable enough, yes? Well, take a look at the following concrete example:

Here we have the integers Z acting on the reals R, letting SR be the set of permutation functions on R (SR is a HUGE group :)

Let u be defined such that

(u(k))( x ) = x + k

or in the new notation,

k(x) = x + k

We now prove that u is a homomorphism: Let j,k be in Z, and let x be in R, then

(j + k)(x) = x + (j + k) = (x + k) + j = j(x + k) = j(k(x))

Wow! It has become *very* hard to parse where we are dealing with j,k the integers and j, k the permutation functions! In the old notation this would have been very messy, but perhaps clearer:

(u(j+k))( x ) = x + (j + k) = (x + k) + j = (u(j))(x + k) = (u(j))( (u(k))( x ) ) = (u(j) o u(k))( x )

Now that I've gone through this and am feeling more comfortable with the simplified notation, I must say it really does control bracket-mania.

However, my favourite idea is the use of subscripts, i.e.: ug = u(g) works well:

u(j + k)(x) = x + (j + k) = (x + k) + j = uj(x + k) = uj( uk( x ) ) = ( uj o uk )(x)

It compares nicely to some previous examples as well, and handles the concrete example above much better in my opinion:

original recipe:

(u(j+k))( x ) = x + (j + k) = (x + k) + j = (u(j))(x + k) = (u(j))( (u(k))( x ) ) = (u(j) o u(k))( x )

crunchy:

(j + k)(x) = x + (j + k) = (x + k) + j = j(x + k) = j(k(x)) = (j o k)(x)

extra spicy:

u(j + k)(x) = x + (j + k) = (x + k) + j = uj(x + k) = uj( uk( x ) ) = ( uj o uk )(x)

To summarize, the subscript notation can be marginally more obtuse than the simplified method in some cases, but I think it handles certain situations (i.e.: the concrete example) much better.