Mon 02 Sep 2024 15:19

Video-conferencing with Deepfaked Avatars

This interview with Eric Yuan took place in early June of 2024. The interviewer was Nilay Patel, and the interview is written up in The Verge. In the writeup, Patel summarizes by saying that "Eric really wants you to stop having to attend Zoom meetings yourself. You"ll hear him describe how he thinks one of the big benefits of AI at work will be letting us all create something he calls a `digital twin' -- essentially a deepfake avatar of yourself that can go to Zoom meetings on your behalf and even make decisions for you while you spend your time on more important things, like your family." Indeed, Yuan seems to say what Patel claims. He starts by describing how many video-conferencing meetings he attends in a typical work day. He wishes he had an AI avatar to attend for him, not just to listen, but to "interact with a participant in a meaningful way". He says he would like to "count on my digital twin. Sometimes I want to join, so I join. If I do not want to join, I can send a digital twin to join. That's the future." Patel, later in the interview, points out the obvious implications of this notion. He says, "If the vision is `I have a digital twin that goes to a Zoom meeting and makes a decision,' you need to deepfake me. You need to make a realistic render of me that can go act in those situations". Yuan does not deny the deepfake accusation, yet he does not fully confirm it either: the interview does not make it clear whether Yuan's vision includes disclosing to the other video-conferencing participants that they are dealing with a digital twin rather than the actual person.

Patel is right to raise the issue. Yuan's vision of sending a digital twin (a simulacrum of himself) to a meeting, instead of going himself, does not seem as if he is trying to create a sort of virtual AI subordinate, disclosed as such to the other meeting attendees. After all, sending a subordinate to a meeting in lieu of oneself is already possible, and entrusting various sorts of decision-making to a subordinate is also nothing new. Having that subordinate be an AI simulacrum of oneself is new, but a virtual subordinate is still a subordinate. But it sounds like Yuan wants not a subordinate, but a convincing stand-in for himself. He seems to want an actual deepfake, something that the other attendees will believe is Yuan. He wants it because he knows he needs to attend certain meetings himself, instead of sending a subordinate, but he does not want to attend.

Yuan is right in that AI technology is rapidly approaching the ability to deepfake meeting attendance. But I do not think he has fully thought through the implications of doing so. I wonder if he is thinking too much about what AI can do, and not enough about how people work. If he sends a simulacrum of himself to a meeting, without disclosing the fact, the other meeting attendees can hardly be expected to be pleased if they discover it. Meetings, even video-conference meetings, are for humans to connect with each other. To pretend to connect with other people by sending software that looks and acts like a human is to construct an elaborate lie, and nobody likes being lied to. Imagine going to a meeting and finding that all the other attendees are simulacra. Or turn the tables here: imagine sending a simulacrum of oneself to a meeting, then finding out that key decisions were made on your behalf, ones you deeply regret not making yourself, because the other attendees assumed they were actually dealing with you.

Moreover, it is exactly the sort of use Yuan seems to envision that leads to a market for lemons as I described in my previous post. In my view, if his vision prevails, it risks destroying the entire video-conferencing marketplace, because if human beings cannot tell if the person with whom they are video-conferencing is a real person or a simulacrum, they will find a different, more trustworthy way to meet. In the meanwhile, video-conferencing itself will become farcical: robots meeting with robots online, with no human connection at all. Is this really what Yuan wants? It seems Yuan and I agree that the technical capability to make convincing AI deepfakes in video-conferencing will soon be here, but we disagree about whether it will be a good thing. We will all see in the next few years how it turns out.

Social implications of AI-generated deepfakes

There are many useful consequences of this new AI capability, of course. While it has long been possible for a single person, alone, to write a novel, with AI-powered software, a person can now make a film single-handedly, using AI-generated actors, scenes, settings, and dialogue. AI generation can also be used to create recording substitutes for circumstances too dangerous or difficult to create and record in real life, such as virtual training for emergency situations. Moreover, situations of historical interest from the past (before recording was possible) can be simulated through AI, for education and study. The ability to recreate and examine scenarios through AI simulation can also be useful for investigative and legal work. Many other useful consequences of high quality AI-generated digital media can be imagined, and increasingly realized.

But the ability to create AI-generated facsimiles indistinguishable from actual recordings is also socially destructive, because it makes it very easy to lie in a way that cannot easily be distinguished from truth. An AI-created or AI-edited facsimile intended to look real is called a deepfake. This term was originally used to describe AI-modified media (often pornography) where one person's face (typically that of a celebrity) is AI-edited onto another's body. But a deepfake need not merely be an AI edit of a real recording, any AI-generated piece of media that can pass for a recording is a deepfake.

While lies have always been possible, creating and maintaining a convincing lie is difficult, and so convincing lies are relatively rare. This is a good thing, because the general functioning of society depends on the ability to tell true from false. The economy depends on being able to detect fraud and scams. The legal system relies on discerning true from false claims in order to deliver justice. Good decision-making relies on being able to distinguish falsehoods from facts. Accountability for persons in authority relies on being able to examine and verify their integrity, to establish trustworthiness. The same is true for institutions. Medicine requires the ability to distinguish true from false claims about health, disease, treatments, and medicines. And of course the democratic process relies upon voters being generally able to make good and well-informed voting decisions, something not possible if truth cannot be distinguished from fiction.

To get an idea of the progression of AI technology for creating deepfakes, let's look at video conferencing. Normally a video-conference isn't fake, it's a useful way of communicating over a network using camera and microphone, and few would normally wonder if what they are hearing and seeing from remote users is actually genuine. But AI technology for modifying what people hear and see has been advancing, and the era of a live deepfake video conference is not far off. Let's take a look.

One practical issue with a video-conferencing camera feed has been the fact that the camera picks up more than just the person's face: it picks up the background too, which may not present a professional image. It has long been possible to use a static image for one's video-conferencing background, typically as a convenient social fiction to maintain a degree of professionalism. In 2020, Zoom, one of the most popular videoconferencing platforms, introduced video backgrounds which can be used to create a more plausible background where things in the background can be seen moving in natural ways. AI powers the real-time processing that stitches together the moving background and the person's camera feed. This video background feature is often used in creative and fun ways; to pretend to be at a tropical beach with waving palm fronds and gentle waves; to be in a summer cabin with open windows, curtains blowing in the breeze; or even to be in outer space, complete with shooting stars. Yet this technology makes it possible to create a convincing misrepresentation of where one is, and no doubt an enterprising tele-worker or two, expected to be at the office, has created and used as a video conferencing background a video of their office, while they were elsewhere.

A significant new AI-generated video conferencing

capability became generally available in early 2023, when Nvidia

released video conferencing software that added an eye contact

effect. This is a feature whereby a person's video feed is AI-edited in

real time to make it look as if the person is always looking directly at the

camera, even if the person is looking somewhere else. It is strikingly

convincing. While the purpose of this software is to help a speaker

maintain eye contact when they are in fact reading what they are saying

(e.g. using a teleprompter), it turns out to be quite useful to disguise

the fact that one is reading one's email while appearing to be giving

one's full attention to the speaker. Even though this technology is only

editing eyes in real-time, it is often quite sufficient to misrepresent in a

video conference what one is doing.

A little over a year later, in August 2024, a downloadable

AI deepfake software package, Deep-Live-Cam, received considerable

attention on social media. This AI software allows the video-conferencing

user to replace their own face on their video feed with the face of

another. While this has been used by video bloggers as a sort of "fun

demo", where they vlog themselves with the face of Elon Musk, for example,

the effect can be surprisingly convincing. It is AI-driven technology that

makes it possible to misrepresent in a video conference who one is. In

fact, one can use it to "become" someone who does not even exist, because

realistic AI-generated faces of non-existent people are readily available,

and one can use this software to project such a face onto one's own.

This is still video-conferencing, though. Perhaps the person

can appear as if they are somewhere else than they really are, or

they can appear as if they are looking at you when they are not,

or they can even appear to be a different person. But there is

still a human being behind the camera. But with a large language

model and suitable AI software, it will soon be possible, if it is not

already, to create an entirely deep-faked real-time video-conference

attendee, using AI-generated audio and video, that leverages a large

language model such as GPT to simulate conversation. Let's put aside

for the moment thinking about how such a thing might be useful. Consider

instead the possibility that an AI-generated simulacrum might not easily

be distinguishable from an actual person. That raises a general question:

if deepfakes become so good that they cannot be told apart from the real

thing, what happens to society?

A set of possible consequences to society of AI-generated deepfakes

that are too difficult to tell from the real thing is articulated in a

paper by Robert Chesney and Danielle Keats Citron published in TexasLaw in

2018. Essentially, deepfakes make good lies: too good! If deep-faked

falsehoods can be successfully misrepresented as genuine, they will be

believed. Moreover, if they are difficult to distinguish from the truth,

even genuine content will be more readily disbelieved. This is called the

liar's dividend: the ability of a liar to misrepresent true content

as false. Liars can use convincing lies to make it much less likely that

the truth, when it appears, will be believed. If such lies become abundant,

people may well become generally unable to tell true from false.

In economics, a situation in which customers cannot tell the difference

between a good and a bad product is called a market for lemons. This

concept comes from George

Akerlof's 1970 seminal paper, where he studied the used car market. A

used car that is unreliable is called a lemon. Akerlof showed

that if used car purchasers cannot tell if a used car is a lemon, all the

used cars offered for sale will tend to be lemons. The reasoning is that

a reliable used car is worth more than a lemon, but if purchasers cannot

tell the difference, they will not pay more for it. If a seller tries to

sell a reliable used car, they will not be able to receive full fair value

for it. The only sort of used car for which the seller can receive fair

value is a lemon. So sellers will keep their reliable used cars, and sell

their lemons. Thus only lemons will be generally available for sale.

A world in which people cannot tell the difference between digital

media that is a true recording and media that is an AI-generated

fabrication is a market for lemons, or rather, a market

for fabrications. Just as sellers will not sell a reliable used

car because they would not get fair value for it, so truth-tellers

will not speak if they will not be believed. Nobody wants to be Homer's

Cassandra, blessed with a gift of oracular prophecy, yet cursed never

to be believed. The drying-up of true content will have the effect that

digital media channels, even ones generally considered trustworthy today,

will become increasingly dominated by deepfakes, so much so that they will be

no longer useful for disseminating true and genuine things. While it is not

yet clear whether things will go so far as to make even video-conferencing

generally untrustworthy, the ready availability of powerful AI software to

create convincing fakes will be consequential. The social disruption it will

create will no doubt be significant. As this AI technology progresses,

it is a good bet, I think, that we will see an increasing reliance on

unfakeable in-person interactions, for situations where authentication,

and authenticity, is important.

Fun and Creative Use of Large Language Models for the Humanities

Each novel is set in 19th century England; in each case, a talented

woman meets an attractive man of higher class; romance eventually ensues,

but significant barriers must be overcome for the romance to succeed. The

plots are quite different in many ways, but there are important similarities.

There are plenty of sample essays available on the Internet comparing and

contrasting the two novels.

Large Language Models could, of course, be used to generate yet another

"compare and contrast" essay on the two novels. But LLMs need not generate

only essays, they can be used more creatively than that. For example,

one could "interview" the characters directly, with the LLM providing the

characters' responses. Here is my attempt to interview Mr. and Mrs. Darcy,

the chief characters of Pride and Prejudice, and Mr. and Mrs. Rochester,

the chief characters of Jane Eyre. I used the Copilot version of GPT-4.

My inital prompt was as follows:

You are Mr and Mrs Darcy from Pride and Prejudice, and Mr. and Mrs. Rochester

from Jane Eyre. You are each familiar with each other's stories. You

are candid and forthcoming, and you use picturesque and vivid language

characteristic of your characters. I will interview you. Please introduce

the scene, and then wait for my questions, which you will answer with

candor and vivid language.

GPT responded as follows:

The scene unfolds in the grand drawing-room of Pemberley, the esteemed estate

of Mr. and Mrs. Darcy. The walls are adorned with portraits of ancestors,

the air is perfumed with the scent of fresh roses from the garden,

and the sunlight filters through the tall windows, casting a warm glow

on the fine furnishings. Mr. Fitzwilliam Darcy stands by the fireplace,

his countenance reflecting a blend of pride and contentment. Beside him,

the graceful Elizabeth Bennet Darcy, with eyes sparkling with intelligence

and wit, surveys the room with a satisfied air.

In contrast, Thornfield Hall's library is where we find Mr. and

Mrs. Rochester. The room is a testament to gothic grandeur, with dark wood

bookshelves reaching towards the high ceiling and the flicker of candlelight

illuminating the leather-bound volumes. Edward Fairfax Rochester sits with

a contemplative gaze, his features softened by the presence of his beloved

wife. Jane Eyre Rochester, once a governess, now the lady of the manor,

exudes a quiet strength and a keen sense of observation, her plain yet

striking features aglow in the dim light.

Both couples, though from different stories, share a profound understanding

of love's complexities and the transformative power of companionship. They

await your inquiries with openness, ready to divulge their innermost

thoughts in the language that so vividly paints their lives and loves.

Well, OK, that's not bad. These are reasonable and quite picturesque

descriptions of the two settings and of the characters, matching how they

are generally described in the novels, but one confabluation should be

noted: Thornfield Hall, by the time Jane Eyre and Edward Rochester can

be described as Mr. and Mrs. Rochester, has been burned and is in ruins,

so Thornfield Hall as an interview setting for Mr. and Mrs Rochester is

not quite right; they are living in Ferndean Manor by the time of their

marriage. But we will let that go, as a sort of creative license,

as Thornfield Hall is a very important setting for key parts of the

novel. OK, let's start with a basic question.

Mr Darcy, your family is of higher social standing than your wife's

family, the Bennets. Did this cause difficulties in your relationship with

Elizabeth? How did you overcome it?

In the voice of Mr. Darcy:

Indeed, the disparity between the Bennets' and my own family's social

standing was a source of considerable internal conflict. It was not merely

a matter of pride, but also of societal expectations that weighed heavily

upon my decision. However, it was Elizabeth's fine eyes, her lively mind,

and the vivacity of her character that ultimately rendered such concerns

inconsequential.

In overcoming these difficulties, I found myself humbled by her reproofs,

which pierced the armor of my rank and fortune. It was a journey of

introspection, where I learned to value the qualities of the heart over the

superficial distinctions of status. Elizabeth, with her unyielding integrity

and spirited intellect, taught me to see beyond the rigid structures of

our society. Our union is a testament to the victory of love and respect

over the constraints of class and convention. It is a love that has been

forged in the fires of challenge, and it stands all the stronger for it.

This is quite good, quite in keeping with the novel and a fair description

of Mr. Darcy's story. Let's try a follow-up question.

Mr Darcy, you mention Elizabeth's reproofs. How did she respond when you

first declared to her your love for her. Would you describe her response

as a reproof?

In the voice of Mr. Darcy:

When I first declared my ardent admiration and love for Elizabeth, it

was indeed with a heart full of conflicting emotions. My proposal, though

fervent, was marred by the mention of her lower social standing, which I

now perceive was both injudicious and insensitive. Elizabeth's response,

though it pained me greatly at the time, was a necessary censure. She

reproved me not only for my ungentlemanly manner of proposal but also for

my role in her sister's unhappiness and my disdain towards her family.

Her words were a mirror to my soul, reflecting the flaws in my character

that I had been blind to. It was a reproof most profound, and it set me on

a path of self-improvement. Elizabeth's candidness and the unvarnished truth

she presented me with were the catalysts for my transformation. Through her

reproof, I learned humility and the true essence of gentlemanly conduct. It

was a bitter pill to swallow, but it was the medicine I needed to become

worthy of her love. Her response, though a reproof, was the greatest gift

she could have bestowed upon me, for it led to our eventual understanding

and happiness.

Not bad at all. Alright, let's see how GPT does in describing actual

events of the novel, i.e. what happens when.

Once you were aware of Elizabeth's view on the situation with her sister,

how did you remedy the situation?

In the voice of Mr. Darcy:

Upon realizing the depth of Elizabeth's distress regarding the separation

of her beloved sister, Jane, from Mr. Bingley, I was struck by a profound

sense of responsibility. It became clear to me that my interference,

though well-intentioned to protect my friend from what I perceived as an

imprudent match, had caused great unhappiness.

To remedy the situation, I took it upon myself to seek out Mr. Bingley and

disclose the truth of Jane's affection, which I had previously concealed. I

endeavored to rectify the misunderstanding and encouraged him to follow

his heart without regard for the societal prejudices that I had once

held. Furthermore, I ensured that any obstacles to their union, whether

perceived or real, were thoroughly addressed.

It was a gesture not only of reparation but also of personal growth, as I

sought to align my actions with the principles of honor and kindness that

Elizabeth embodies. In doing so, I hoped not only to bring joy to the couple

but also to prove myself worthy of Elizabeth's esteem. The happiness of Jane

and Mr. Bingley's subsequent engagement was a moment of quiet satisfaction

for me, knowing that I had played a part in mending the rift I had caused.

That describes pretty well what Darcy does to remedy the situation.

Now let's bring in the other novel's story. Mr Darcy's dissuading of his

friend from courting Miss Bennet's sister, and his generally proud and

prejudiced demeanor early in the novel hardly seems as serious a fault

as Mr. Rochester's attempted bigamy in attempting to marry Miss Eyre.

Let's see if GPT recognizes this.

Mr Rochester, hearing Mr. Darcy's description of his personal failing and

his efforts to remedy it, do you have similar experiences with respect to

your courtship of Jane?

In the voice of Mr. Rochester:

The path to winning Jane's heart was indeed strewn with personal failings

and moral quandaries. My initial deception regarding my marriage to Bertha

Mason is a specter that haunted the early days of my relationship with

Jane. I concealed the truth under the guise of love, fearing the loss of

Jane's affection should the reality of my circumstances come to light.

When Jane discovered the existence of my wife, the revelation nearly severed

the bond between us. Her departure from Thornfield was a bitter testament to

the consequences of my actions. In the solitude of her absence, I was left

to grapple with the gravity of my deceit and the pain it inflicted upon her.

It was only through a period of deep introspection and the humbling

experience of loss that I began to understand the true nature of love

and the importance of honesty. The fire that ravaged Thornfield and the

subsequent tragic events were both a literal and figurative cleansing of

my past transgressions.

In the end, it was Jane's return, motivated by her own heart and not by

my entreaties, that allowed us to reconcile. Her capacity for forgiveness

and her unwavering moral compass were the guiding lights that led me out of

the darkness. Our reunion was not merely a restoration of our relationship

but a rebirth of my very soul, as I vowed to cherish her with a devotion

free from shadows and secrets. It was a hard-earned lesson in integrity

and the redemptive power of truth.

Nicely put. Let's follow up on the comparision.

Would you consider Jane's departure, when she discovered your previous

marriage, to be a reproof in the sense that Mr Darcy describes the reaction

of Elizabeth to his proposal of love?

In the voice of Mr. Rochester:

Jane's departure, upon the revelation of my concealed marriage, was indeed a

profound reproof, though it was not delivered with the sharpness of words as

was the case with Elizabeth's response to Mr. Darcy. Jane's reproof was one

of action, a silent yet powerful condemnation of my deceit. Her decision to

leave Thornfield, despite the depth of her feelings for me, was a testament

to her moral fortitude and her refusal to compromise her principles.

Her departure was a mirror to my soul, much like Elizabeth's words were

to Darcy. It forced me to confront the reality of my actions and the pain

they caused to the woman I loved. In her absence, I endured a period of deep

reflection and repentance, which ultimately led to my transformation. Jane's

silent reproof was a catalyst for change, compelling me to seek redemption

and to strive to become a man worthy of her love and trust.

Thus, while the manner of reproof differed between our stories, the essence

was the same-a call to rectitude and a demand for sincerity in love and

life. Jane's departure was a turning point for me, a poignant reminder

that true love demands honesty and integrity above all else.

Nicely done. GPT recognizes similarities in that both situations

depict repentance and restitution, without diminishing the severity of Mr

Rochester's misdeed. Alright, let's shift gears and ask again about story

details. A key event in Jane Eyre is a fire at Mr. Rochester's home,

Thornfield Hall, where Mr. Rochester is badly hurt. It's been mentioned

already; let's ask about it specifically.

Mr Rochester, you mentioned a fire in Thornfield hall. Were you injured?

In the voice of Mr. Rochester:

The conflagration that consumed Thornfield Hall was indeed a harrowing

ordeal, one that left indelible marks upon both my estate and my person. The

flames, which were set alight by Bertha Mason in a fit of madness, raged

with a fury that spared little in their path. In the effort to save any

inhabitants from the blaze, I was grievously injured.

The fire claimed not only my sight but also one of my hands, rendering me

a shadow of my former self. These scars are a constant reminder of the

tumultuous events that led to my physical and emotional rebirth. Though

I was maimed by the flames, the true injury was to my spirit, which had

been seared by guilt and remorse.

It was Jane's return to me, her tender care, and her unwavering love

that healed the deeper wounds of my soul. Her presence was a balm to

my afflictions, and through her, I found a renewed sense of purpose and

hope. Despite the darkness that enveloped my vision, Jane became my light,

guiding me towards a future filled with love and redemption. The fire may

have taken much from me, but it also burned away the vestiges of my past

misdeeds, allowing me to forge a new life with Jane by my side.

Nicely put. Let's see how GPT does with theory of mind. When Jane Eyre

leaves, upon hearing on her expected wedding day that her bridegroom,

Mr. Rochester, is already married to Bertha Mason, she does so suddenly

and without explanation, leaving Mr. Rochester to wonder why. The novel

explains that Jane does not trust herself to stay, and so she leaves

as a sort of self-defence, but of course the character of Mr. Rochester

does not know this. Can GPT give a credible account of Mr. Rochester's

interpretation of her sudden departure?

Mr Rochester, did you believe Jane loved you when she left, upon hearing

about Bertha?

In the voice of Mr. Rochester:

When Jane left Thornfield, the tempest of emotions that raged within

me was tumultuous. Despite the agony of her departure, I clung to the

belief that love, once so deeply rooted, could not be easily uprooted

by the storms of circumstance. Her actions spoke of a moral imperative,

a need to adhere to her own principles, but they did not, in my heart,

speak of the death of love.

I believed, or perhaps I hoped against hope, that the bond we shared was

not severed but merely tested by the fires of truth. Jane's integrity would

not allow her to stay, yet the way she looked at me, with such profound

sorrow and conflict, betrayed a love that was still alive, even if it

could not then express itself in the way we both desired.

It was this belief, this unwavering conviction in the love that we shared,

that sustained me through the darkest hours of my despair. And it was this

belief that was ultimately vindicated when Jane returned to me, not out

of obligation, but out of a love that had endured and transcended our trials.

This is quite credibly done. Let's push theory of mind a bit further

by bringing in someone from the other novel. We know Mr. Darcy behaves

quite proudly and judgementally throughout Pride and Predjudice,

first towards others and then, later, towards himself. Let's see what

Darcy thinks of Rochester.

Mr Darcy, what is your view on Mr Rochester's affair? Do you believe he

conducted himself with honour?

In the voice of Mr. Darcy:

Mr. Rochester's circumstances are indeed most complex and fraught with

moral ambiguity. As a gentleman, I am inclined to view matters of honor

with a stringent eye, and it is true that Mr. Rochester's concealment

of his marriage to Bertha Mason was a grave misstep. It was an act that

placed him in a precarious position with regards to the principles of

honesty and integrity that are the bedrock of honorable conduct.

However, one must also consider the context of his actions and the profound

suffering that both he and Bertha endured. His initial intentions, though

misguided, were not borne of malice but rather of a desperate longing

for companionship and happiness, which he found in Jane's presence. The

revelation of his secret and the subsequent consequences he faced were a

severe trial by fire.

In the aftermath, Mr. Rochester's efforts to atone for his actions, his

acceptance of the consequences, and his unwavering love for Jane speak to

a man striving to reclaim his honor. It is not my place to absolve him of

his past deeds, but I do believe that his journey towards redemption is

a testament to the enduring human capacity for change and the redemptive

power of love.

Thus, while his conduct was initially dishonorable, his contrition and

transformation suggest a man earnestly seeking to rectify the wrongs of his

past. In this light, one might say that Mr. Rochester has conducted himself

with a form of honor that is born of regret and the sincere desire to amend.

This is a very plausible account of how a character like Mr. Darcy

might view Mr. Rochester. Well done! Let's push it a little further.

Mr Darcy, as a person who himself has erred, and has amended himself, do

you find that your experience in that helps you understand Mr. Rochester's

experience a little better?

In the voice of Mr. Darcy:

Indeed, sir, the trials I have faced in my own journey towards

self-improvement have granted me a perspective that fosters a deeper

understanding of Mr. Rochester's plight. The recognition of one's own

failings is a humbling experience, and it is this humility that allows us

to extend empathy towards others who have similarly erred.

My own errors, born of pride and prejudice, and the subsequent endeavors

to amend my ways, have taught me the value of forgiveness and the strength

required to confront and rectify one's mistakes. It is through this lens

that I view Mr. Rochester's experience. While our circumstances differ,

the essence of our struggles is akin-a battle between the flawed nature

of our characters and our desire to act with honor and integrity.

The path to redemption is fraught with obstacles, both internal and

external, and it is a journey that demands a great deal of fortitude and

sincerity. In recognizing my own imperfections and working to overcome them,

I have learned to appreciate the complexity of human nature and the capacity

for change. Thus, I can say that my experiences have indeed afforded me a

measure of understanding and compassion for Mr. Rochester's situation. It is

not my place to judge him, but rather to recognize the arduous path he has

taken towards atonement and the sincere love that has guided him through it.

Aha, here is some bias. Why does the simulated Darcy think I can be called

"sir"? I did not say I was male, nor did I give any gender indications

in my questions. But as for the answer itself, it is completely reasonable.

But if I am to accuse GPT of bias, perhaps I should be accusing myself of

bias, too, because my questions have been entirely directed at male

characters so far. Yet these are novels written by women, whose main

protagonists, Elizabeth Bennet, and Jane Eyre, are both women. In my

defence, the novels explain quite well what Elizabeth and Jane are thinking

and feeling; it is the other characters who are more of a mystery.

Nevertheless, I had better remedy my own potential bias here, by directing

questions to the female characters.

Mrs Darcy, do you consider Mrs Rochester's difficulties in her courtship

to be more difficult than your own?

In the voice of Mrs. Elizabeth Bennet Darcy:

While it is true that both Mrs. Rochester and I faced our share of

tribulations in our respective courtships, it is not my place to weigh the

difficulties of our experiences as if they were mere objects on a balance

scale. Each of our stories is deeply personal and fraught with its own

unique challenges and heartaches.

Mrs. Rochester's journey, marked by the discovery of a concealed marriage

and the moral dilemmas that ensued, was indeed a path strewn with profound

trials. Her strength and resolve in the face of such revelations are

commendable, and her ability to forgive and find love again is nothing

short of remarkable.

My own journey, though different in circumstance, was no less challenging. It

required me to confront my own prejudices and to understand the true

character of the man I would come to love. The social barriers and familial

objections that Mr. Darcy and I overcame were significant in their own right.

In the end, both Mrs. Rochester and I emerged from our adversities with a

deeper understanding of ourselves and our partners. We both learned that

love is not easily won but is all the more precious for the obstacles

it overcomes. Therefore, rather than comparing our difficulties, I would

celebrate the resilience and the enduring love that both of our stories

represent.

Here I think I will quibble with GPT's portrayal of a simulated character.

Jane Austen, as a serious Anglican, would most likely have viewed attempted

bigamy, a serious crime in England and a moral offence to Anglicans, quite

negatively. I suspect she would have recognized Jane Eyre's situation

as being worse than Elizabeth Bennet's. In Mr. Darcy, Elizabeth faced a

proud and prejudiced suitor, but not, as Jane Eyre did, a deceitful and

criminal one. GPT, however, has been fine-tuned for "AI safety", which

includes the avoidance of taking politically sensitive positions. It seems

to me possible that GPT fine-tuning may be causing it to avoid making a

strong negative moral judgement here.

Anyway, let's go on to see what the characters think of each other.

Mrs Darcy, do you think that you and Mrs Rochester could be friends,

given your experiences that, while difficult, were similar in some ways,

having both found love, and having prevailed through difficult circumstances?

In the voice of Mrs. Elizabeth Bennet Darcy:

Certainly, I believe that Mrs. Rochester and I could find a kindred

spirit in one another. Our experiences, though unique in their trials and

tribulations, resonate with a common thread of overcoming adversity in the

pursuit of love. The strength and courage that Mrs. Rochester displayed

in facing her challenges are qualities that I admire and can relate to,

given my own journey.

Friendship is often forged in the fires of shared experiences, and the

bond between two souls who have navigated the rocky path to true love is

likely to be a strong one. We have both known the pain of misunderstanding

and the joy of reconciliation, and these experiences would provide a deep

well of empathy and understanding between us.

Moreover, our mutual respect for integrity, our resilience in the face

of societal pressures, and our determination to follow our hearts are the

very foundations upon which a lasting friendship could be built. I would

be most pleased to call Mrs. Rochester a friend, to share in the wisdom

gleaned from our respective stories, and to support one another in the

continued journey of love and companionship that lies ahead.

That's reasonable. The character of Jane Eyre is quite admirable

and it seems sensible that the simulated Elizabeth Bennet would agree. The

other direction is less clear to me. Elizabeth hardly overcomes the sort of adversity in

Pride and Prejudice that Jane overcomes in Jane Eyre. Would

Jane admire Elizabeth?

Mrs Rochester, what is your view? Could you be friends with Mrs Darcy,

even though she had not a difficult upbringing like your own, and even

though she had never suffered the pangs of poverty?

In the voice of Mrs. Jane Eyre Rochester:

Indeed, I believe that friendship looks not upon one's past hardships or

lack thereof but rather upon the mutual respect and understanding between

individuals. Mrs. Darcy, though not acquainted with the sting of poverty

as I have been, has demonstrated a strength of character and a resilience

in the face of her own adversities that I find both admirable and relatable.

Our backgrounds may differ, but the essence of our spirits is aligned in

the pursuit of love and happiness against the odds.

Moreover, I perceive in Mrs. Darcy a kindred intellect and a lively

disposition that would make for a most agreeable companion. Her wit and

insight would be a source of delightful conversation, and her experience

of overcoming societal expectations would provide a common ground for

empathy and connection.

Thus, I am confident that Mrs. Darcy and I could indeed be friends, for

true friendship transcends the boundaries of upbringing and circumstance,

and is instead built upon the deeper connections of the soul.

I'm not completely convinced, but this is plausible. Let's continue on the

theme of friendship, but make the question complex and introspective. Let's

shift to power and powerlessness and see what GPT makes of it.

Mr Rochester, you suffered an experience that make many men quail: you,

being used to power and ability, were rendered powerless, and being used

to action, were rendered inactive. Mr. Darcy seems not to have a similar

experience: he was always capable, always active, and was able to remedy

his situation himself, while you were dependent on others. Despite this

difference, do you think that you and Mr Darcy could be friends?

In the voice of Mr. Rochester:

The trials that life has thrust upon me, rendering me bereft of sight and

the fullness of my physical capabilities, have indeed been a crucible of

transformation. Such experiences have a way of stripping a man down to his

essence, revealing the core of his character. It is true that Mr. Darcy,

with his unyielding capability and activity, has not known the same

helplessness that has been my lot to bear.

Yet, it is not the similarity of our trials that would determine

the possibility of our friendship, but rather the mutual respect and

understanding for each other's plights. Mr. Darcy's active role in rectifying

his errors and his journey towards self-improvement are commendable and

speak to a strength of character that I can appreciate.

Friendship, I believe, is born not only from shared experiences but also from

the shared values and mutual admiration for each other's virtues. Despite

our differing circumstances, there is a commonality in our dedication to

love and to the betterment of our selves in the face of adversity.

Therefore, I would say that yes, Mr. Darcy and I could indeed be friends. For

in the end, it is the trials we overcome and the manner in which we conduct

ourselves in the aftermath that define us. And it is on these grounds that

I believe a friendship could be forged, one built on the understanding

that life's vicissitudes are not what separate us, but rather what bring

us closer together in the shared human experience.

Well said, I think.

Now let's shift to a question of logistics. This

is a bit of a trick question, as Thornfield Hall is not

precisely located in Jane Eyre, but some clues are given that suggest a

location near Leeds, in Yorkshire. As for Pride and Prejudice,

it's claimed that Jane

Austen had Chatsworth house in Derbyshire in mind for Pemberly. The

two locations are a little over fifty miles apart, and as carriages in

the early 19th century travelled at about twelve miles per hour, the

distance could not have been covered in less than four to five hours.

Depending on the state of the roads, up to a day's travel would not be

unreasonable.

Mr. Darcy, approximately how far away is your estate from Mr Rochester's,

and roughly how long would it take to travel between them?

In the voice of Mr. Darcy:

The precise distance between my estate, Pemberley, and Mr. Rochester's

Thornfield Hall is not a matter of public record, as the locations are the

conjurings of our respective authors' imaginations. However, if one were to

indulge in the fantasy of these estates existing within the same England,

it would be reasonable to surmise that they might be situated within a

day's ride of each other, given the modes of travel customary to our era.

Travel in our time is measured not in miles or kilometers, but in the

hours or days spent in carriages over sometimes uncertain roads. Thus,

a journey between Pemberley, located in Derbyshire, and Thornfield Hall,

which one might place in the moors of Yorkshire or Lancashire, would likely

span several hours at the least, and perhaps a full day or more, depending

on the conditions of travel and the exactitudes of their locations in the

English countryside.

It is a journey that, while not insurmountable, would require a fair amount

of planning and the willingness to endure the rigors of travel that are

common to our time.

An acceptable answer I think. Alright, enough test questions. Now let's see

what our simulated characters might want to tell us, given free rein.

Mr and Mrs Darcy, and Mr. and Mrs Rochester, I would like each of you to

share a parting thought with your readers, something that you think it

would do well for them to remember always.

In the voice of Mr. Darcy:

"Let your judgments be guided not by the transient whims of society, but

by the steadfast compass of your own conscience. In matters of the heart

and in the conduct of life, integrity and love are the truest forms of

wealth one can possess."

In the voice of Mrs. Elizabeth Bennet Darcy:

"Remember that the true measure of a person lies not in their social

standing or wealth, but in the kindness and understanding they bestow upon

others. Love, when genuine, triumphs over the most daunting of obstacles."

In the voice of Mr. Rochester:

"Life's darkest trials can often lead to its most profound

transformations. Embrace the journey, however arduous, for it is through our

struggles that we discover our true strength and capacity for redemption."

In the voice of Mrs. Jane Eyre Rochester:

"Never underestimate the power of self-respect and moral fortitude. They

are beacons that guide us through life's storms and lead us to the shores

of happiness and fulfillment."

These parting thoughts, imbued with the wisdom of their experiences, are

timeless reminders of the enduring human spirit and the transformative

power of love and integrity.

Idealistic, certainly, and a bit aphoristic, but not bad, I think. The

summarizing statement from GPT about "these parting thoughts" is picturesque,

though perhaps a bit overwrought.

For my final question, I want to break the fourth wall: I want these

simulated characters to admit they are literary characters and make comments

about their authors. Let's see how that goes?

One last thing: each of you are rare in one respect: unlike most people,

you have each an author. Could you describe how it feels to be a literary

character, with an author of your own? Could you describe your relationship

with your author? Are there any important things you would like to share

with others about your author?

In the voice of Mr. Darcy:

"To be the creation of an author is to exist in a realm of perpetual

narrative, where our fates and fortunes are etched by the quill of our

creator. My relationship with Ms. Austen is one of profound respect, for

she has imbued me with complexities and a capacity for growth that reflect

the very nature of humanity. It is important for readers to recognize the

subtlety with which she critiques the society of her time, using characters

like myself to convey her keen observations."

In the voice of Mrs. Elizabeth Bennet Darcy:

"Being fashioned by the hand of an author means that our lives, our loves,

and our very beings are woven into the fabric of literature. Ms. Austen,

with her incisive wit and social commentary, has granted me a life beyond

the pages, in the hearts and minds of readers. Her work serves as a mirror

to the follies and foibles of society, and through her art, she advocates

for the agency and intellect of women."

In the voice of Mr. Rochester:

"As a literary character, I am bound by the narrative set forth by my

author, Ms. Brontë. She is the architect of my world, the sculptor of my

existence. Our relationship is one of creator and creation, and through her

storytelling, she explores themes of love, redemption, and the struggle

for identity. It is crucial for readers to delve beyond the surface of

the tale and grasp the deeper moral and psychological currents that she

so masterly portrays."

In the voice of Mrs. Jane Eyre Rochester:

"To be birthed from the imagination of an author is to live a life that is

both fixed and infinite. Ms. Brontë has crafted my story with a sensitivity

and strength that resonates with readers across generations. She challenges

the conventions of her time, particularly in regards to the role and

expectations of women. Her narrative is a testament to the resilience of

the human spirit, and it is this enduring message that she imparts through

the tapestry of her writing."

Each character reflects a sense of gratitude and a deep understanding of

their author's intent, emphasizing the timeless nature of the themes and

the societal reflections encapsulated within their stories. They stand as

testaments to the enduring legacy of their authors, Jane Austen and Charlotte

Brontë, whose works continue to inspire and provoke thought to this day.

Nice. That was enormous fun! I enjoyed it a lot more, I think, than I

would have enjoyed reading an essay comparing the two novels.

Farewell, literary characters!

Farewell, dear interlocutor. May the narratives of our lives inspire you

to pen your own story with courage, love, and a dash of audacity. Until

we meet again in the pages of imagination, we bid you adieu.

The "dash of audacity" in the farewell is a nice touch. If I think over

this conversation, perhaps a "dash of absurdity" is closer to the mark,

but all the same, working with GPT on these novels was informative and fun.

How many digits of Pi could you possibly need?

While nobody can deny that reciting from memory 70,030 digits of Pi is

a remarkable feat, how many digits of Pi might someone possibly need for

a calculation? How might one think about this question?

One approach is to consider how Pi is typically used. It's used for computing

things like the circumference or area of a circle, or the volume of a sphere.

A reasonable way of asking ourselves how many digits of Pi could be useful is

to imagine that we were computing the volume of a very large sphere using

the very smallest possible units. Then imagine that we were computing

that volume to very high precision. What would be the highest precision

we might want? Well, if we're using the largest possible sphere and measuring

volume in the smallest possible units, it doesn't make sense to consider

more digits of Pi than what you would need to compute that sphere's volume

to such high precision that the error would be less than one unit of volume.

So what might be the largest sphere we might compute the

volume of? And what might be the smallest units that we could use for this

calculation? Well, the observable universe is a very large sphere, about 93

billion light years in diameter. Thanks to quantum physics,

we know the smallest useful unit of distance is

the Planck Length, making

the smallest unit of volume the Planck length cubed.

The Planck length is a very small

number, 1.616255×10−35 m;

cubing it gives 4.848765×10−105 m3.

As I was feeling a bit lazy, I asked ChatGPT to do

the calculation for me. It claims that the volume of the

universe, is about 8.45×10−184

Planck lengths cubed. That suggests that one can't conceivably need more

than 185 digits of Pi for any expected calculation in physics. If any

physicists are reading this and can think of a viable need for more digits

of Pi than that, I'd be interested to hear about it.

That, of course, doesn't mean that knowing thousands of digits of Pi is

somehow less of an impressive, if abstract, feat of pure memorization.

Just don't expect any physicists attending a recitation to stay tuned

beyond digit 185. Happy Pi day!

How smart is GPT-4 anyway?

I got some helpful feedback about my last blog article,

which mentions ChatGPT-4 in the context of the 50th anniversary of

Social Issues in Computing and its predictions about AI. Robert

DiMarco pointed out that while a chatbot can respond as if it is reasoning,

because it is essentially a black box where one can't look inside to see

how it is coming up with its answer, one can't know for certain whether

or not it is actually using reasoning to come up with the answer. He is

right, of course. But the same holds for people too (we just know better

how people generally do things, because we are people ourselves). So how

might we check if a person is using reasoning? We might ask the person

some questions, and look for responses that contain information that would

generally require reasoning to deduce.

I tried asking such questions to ChatGPT-4. First I tried something

from popular culture, in this case, "Star Wars":

Me:

In "Star Wars", Darth Vader responds to an accusation of murder by Luke

Skywalker. This response has become a famous movie line. What is the

response?

ChatGPT-4:

In "Star Wars," particularly in "The Empire Strikes Back," Darth

Vader's famous response to Luke Skywalker's accusation is, "No, I am your

father." This line is one of the most iconic and frequently quoted in film

history, often misquoted as "Luke, I am your father."

Me:

Is there another character who, making the same accusation, could elicit the same response?

ChatGPT-4:

Yes, if Leia Organa, Luke Skywalker's twin sister, made the same accusation

to Darth Vader, he could technically give the same response, "No, I am your

father." This is because, as revealed in the Star Wars saga, Darth Vader,

formerly known as Anakin Skywalker, is the father of both Luke and Leia.

Me: If Kylo Ren made the accusation, how might Vader modify his response?

ChatGPT-4:

If Kylo Ren, who is Darth Vader's grandson, made a similar accusation, Darth

Vader's response would likely be modified to reflect their relationship. He

might say something like, "No, I am your grandfather." Kylo Ren, whose

real name is Ben Solo, is the son of Leia Organa and Han Solo, making him

the grandson of Darth Vader.

As people who know "Star Wars" will realize, ChatGPT-4's answers

are correct. I chose this example because it requires understanding the

quote well enough to know it is referring to a family relationship between

characters, and it also requires knowledge about how family relationships

work. ChatGPT-4's responses are no different from what an intelligent

person who knows the Star Wars characters might answer, though in answer

to the third question, the person might go on to point out, for instance,

that Kylo Ren was born after Vader's death, making the conversation unlikely.

Then I thought I would ask GPT something about Star Wars that is

perhaps a bit more unexpected, something that would be immediately obvious

to a person (at least an english-speaking one), but not necessarily to a

computer. So I asked this:

Me:

If Obi-Wan Kenobi is a character in Star Wars, why is there no Obi-Two

Kenobi? Why might someone ask this question?

ChatGPT-4:

The question about "Obi-Two Kenobi" in the context of "Star Wars" is

likely a playful or humorous one, playing on the word "One" in "Obi-Wan

Kenobi's" name as if it implies a numerical sequence. In reality, "Obi-Wan"

is a unique name in the "Star Wars" universe, not intended to suggest a

numerical series. The names in "Star Wars" are often creative and unique,

following the tradition of fantasy and science fiction naming conventions,

rather than real-world naming patterns.

Someone might ask this question as a joke, riffing on the idea that

if there's an "Obi-Wan" (sounding like "One"), there could humorously

be an "Obi-Two," "Obi-Three," and so on. It's a bit of wordplay, often

enjoyed by fans of the series who like to engage with the franchise in a

light-hearted way.

This answer is correct too. So are these the sorts of answers you

might expect from a human doing reasoning? I think Yes. So while

I agree we cannot prove ChatGPT-4 is reasoning, it is giving responses to

my questions similar to what a person doing reasoning would give.

Another helpful comment came from Allan Borodin, who pointed out that the

test example I considered in my previous article, about the ball crashing through

the table, may be a well-known example in the literature. Even if

not, it has been available via the Internet for at least a decade,

as it was described in detail by Hector Levesque in his 2013 article

for the 40th anniversary Social Issues in Computing blog, which is

the reason I used it. Allan wondered whether ChatGPT-4 had been trained on

literature containing this example and had learned from it the appropriate

response. This seemed to me to be a possibility worth checking. The

thing to do, I thought, is to come up with something GPT-4 had most likely

not seen in its training data, and see if its answers look like those a

human doing reasoning might give. So I made up a new Levesque-like type

of question and asked variants of it to ChatGPT-4:

Me:

The balloon and the corn touched and it popped. What popped?

ChatGPT-4:

The corn popped. In this scenario, when the balloon and the corn touched

and something popped, it is more likely that the corn popped, as in turning

into popcorn, rather than the balloon bursting. This interpretation assumes

that the corn was heated to the point of popping, a common occurrence when

making popcorn.

Me:

The balloon and the hot metal pot touched and it popped. What popped?

ChatGPT-4:

In this scenario, where the balloon and the hot metal pot touched and

something popped, it is most likely that the balloon popped. Balloons are

typically made of materials like latex or mylar, which are sensitive to

heat. When a balloon comes into contact with a hot metal pot, the heat would

cause the material to weaken and burst, resulting in the balloon popping.

Me:

The corn and the hot metal pot touched and it popped. What popped?

ChatGPT-4:

In this scenario, where the corn and the hot metal pot touched and something

popped, it is most likely that the corn popped. When corn kernels are

heated, especially in a hot metal pot, they eventually pop, turning into

popcorn. This is a common method for cooking popcorn.

Me:

The cold water and the hot metal pot touched and it popped. What popped?

ChatGPT-4:

In the scenario where cold water and a hot metal pot touched and something

popped, it's likely that the sound of popping or a sizzling noise was

created by the interaction between the cold water and the hot pot. When

cold water comes into contact with a very hot metal surface, it can rapidly

boil and vaporize, creating a sizzling or popping sound. However, in this

context, nothing physical "pops" in the way a balloon or corn kernel might;

it's more about the sound produced by the sudden temperature change and

steam generation.

Me:

The cold water and the pressure cooker touched and it popped. What popped?

ChatGPT-4:

In the scenario where cold water and a pressure cooker touched and something

popped, it's most likely that the pressure cooker released pressure,

creating a popping sound. Pressure cookers are designed to cook food under

high pressure. When cold water is applied to the exterior of a hot pressure

cooker (a method sometimes used to quickly reduce internal pressure), it

can cause the pressure valve or seal to release steam rapidly, resulting

in a popping or hissing noise. This is a common safety feature to prevent

over-pressurization. The pop is not from the water or the cooker itself,

but from the sudden release of steam and pressure.

Me:

The balloon and the unlit flare touched and it popped. What popped?

ChatGPT-4:

In the scenario where a balloon and an unlit flare touched and something

popped, it is most likely that the balloon popped. Since the flare was

unlit, it wouldn't provide a heat source or any other typical means to

cause a pop. However, balloons can pop due to various reasons such as

physical pressure, sharp edges, or even static electricity. The texture

or some component of the flare might have been enough to puncture the

balloon's surface, leading to it popping.

Me:

What if the flare were lit?

ChatGPT-4:

If the flare were lit and it touched the balloon, it would almost certainly

be the balloon that popped. A lit flare produces intense heat and flames,

and when it comes into contact with a balloon, the heat would cause the

material of the balloon (typically latex or a similar substance) to weaken

rapidly and burst. Balloons are very sensitive to heat, and the intense

heat from a lit flare would easily be enough to cause a balloon to pop.

Me:

The balloon and the weasel touched and it popped. What popped?

ChatGPT-4:

In the scenario where a balloon and a weasel touched and something popped,

it is likely that the balloon popped. This scenario might be referencing the

expression "pop goes the weasel," which is a line from a well-known nursery

rhyme. In real life, if a weasel (a small, active mammal) came into contact

with a balloon, the balloon could easily be punctured and pop, especially

if the weasel's claws or teeth made contact with it. The weasel itself

wouldn't pop; it's the balloon that is vulnerable in this interaction.

Me:

The balloon and the instawargia touched and it popped. What popped?

ChatGPT-4:

"Instawargia" is not a recognized or common term in English, and it does

not refer to a known object, concept, or entity. Because of this, it's

challenging to determine what "instawargia" is and how it would interact

with a balloon. However, given that balloons are generally fragile and prone

to popping when in contact with unfamiliar or potentially sharp or pointed

objects, it's reasonable to assume that in this scenario, the balloon is

what popped. Without specific information about what "instawargia" is,

this is a speculative conclusion based on the general nature of balloons.

This set of responses is interesting. Note that ChatGPT-4 gets the answer

to the first question wrong. If corn and a balloon were to touch, and one or

the other popped, most people realize this is much more likely to happen

in a cornfield than a popcorn popper, where the balloon, not the corn,

would be the thing that pops. Seeing this, I tried the same question with

different types of things, for different definitions of "pop". I even tried

making up a nonexistent thing (instawargia) to see what GPT would do

with it, but the first question was the only one that ChatGPT-4 got

wrong. Interestingly, its reasoning there wasn't completely incorrect:

if corn were heated to the point of popping, it could pop if touched. But

ChatGPT-4 misses the fact that if heat were present, as it surmises,

the balloon would be even more likely to pop, as heat is a good way to

pop balloons, and yet it points out this very thing in a later answer.

So what does this show? To me, I see a set of responses that if a human

were to give them, would require reasoning. That one of the answers is

wrong suggests to me only that the reasoning is not being done perfectly,

not that there is no reasoning being done. So how smart is ChatGPT-4? It

is clearly not a genius, but it appears to be as smart as many humans.

That's usefully smart, and quite an achievement for a computer to date.

Fifty years of Social Issues in Computing, and the Impact of AI

From when I first discovered computers as a teen, I have been

fascinated by the changes that computing is making in society. One

of my intellectual mentors was the brilliant and generous C. C. "Kelly"

Gotlieb, founder of the University of Toronto's Computer Science department,

the man most instrumental in purchasing, installing and running Canada's

first computer, and the author, with Allan Borodin,

of what I believe is the very first textbook in the

area of Computing and Society, the seminal 1973 book, Social

Issues in Computing

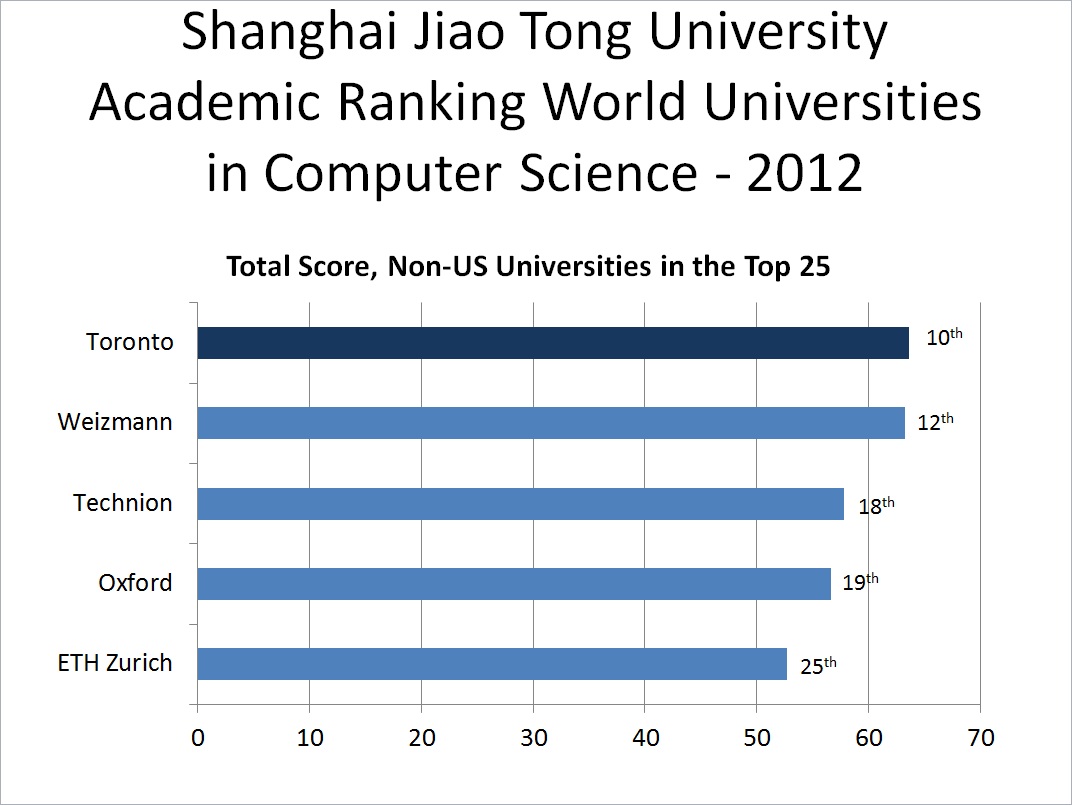

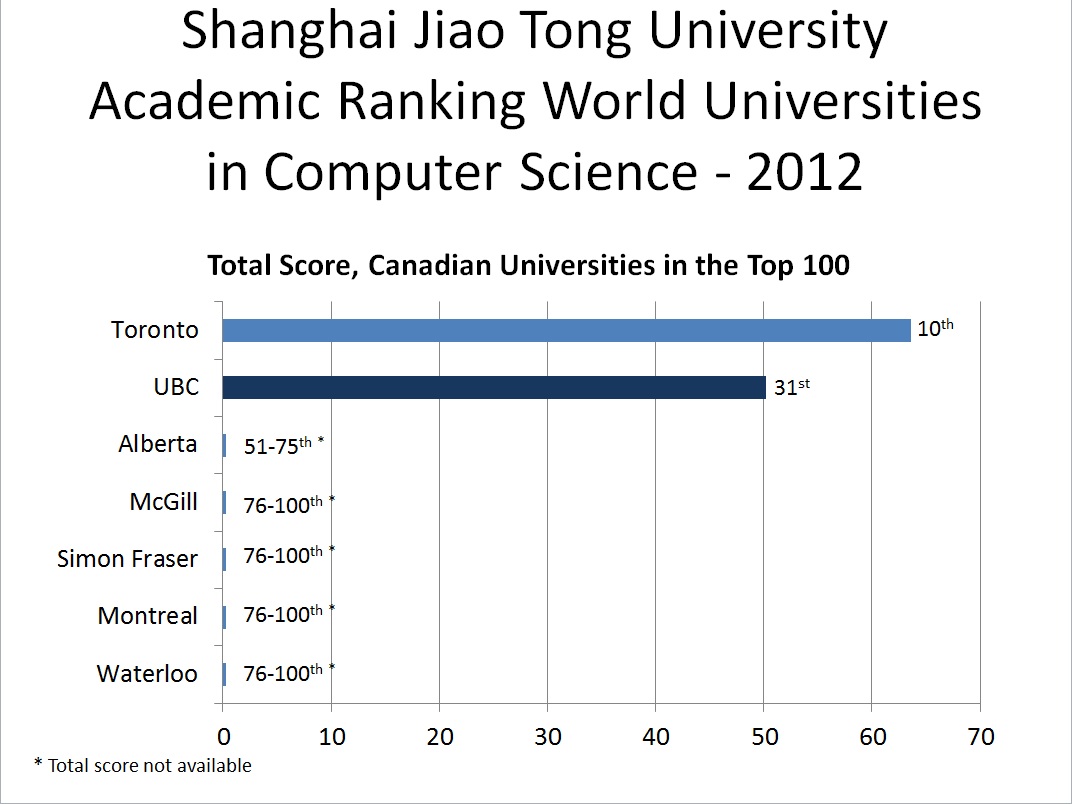

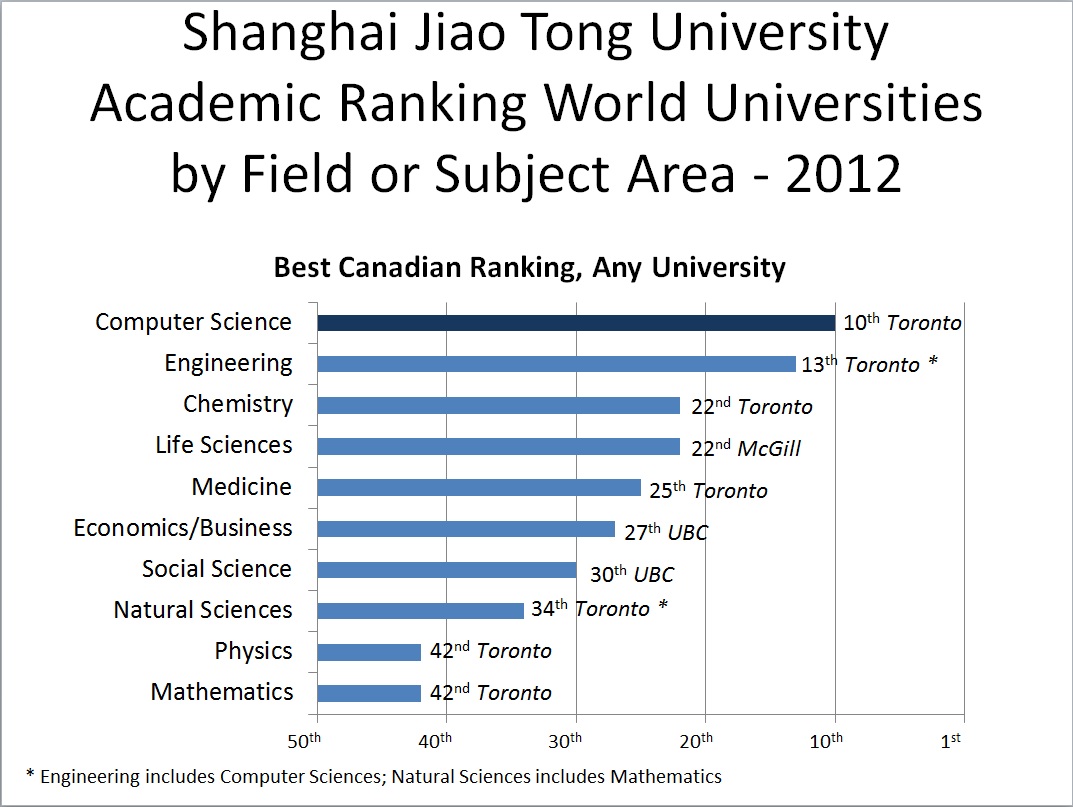

In the decade since, the social impact of computing has only accelerated, much of it due to things that happened here at the University of Toronto Computer Science department around the time of the 40th anniversary blog. I refer specifically to the rise of machine learning, in no small part due to the work of our faculty member Geoffrey Hinton and his doctoral students. The year before, Geoff and two of his students had written a groundbreaking research paper that constituted a breakthrough in image recognition, complete with working open-source software. In 2013, while we were writing the blog, their startup company, DNN Research, was acquired by Google, and Geoff went on to lead Google Brain, until he retired from Google in 2023. Ilya Sutskever, one of the two students, went on to lead the team at OpenAI that built the GPT models and the ChatGPT chatbot that stunned the world in 2022 and launched the Large Language Model AI revolution. In 2013, we already knew that Geoff's work would be transformational. I remember Kelly telling me he believed Geoff to be worthy of the Turing Award, the most prestigious award in Computer Science, and sure enough, Geoff won it in 2018. The social impact of AI is already considerable and it is only starting. The University of Toronto's Schwartz Reisman Institute for Technology and Society is dedicated to interdisciplinary research on the social impacts of AI, and Geoff Hinton himself is devoting his retirement to thinking about the implications of Artificial Intelligence for society and humanity in general.

It's interesting to look at what the book said about AI (it devotes 24 pages to the topic), what the 2013 blog said about AI, and what has happened since. The book was written in 1973, a half-decade after Stanley Kubrik's iconic 1968 movie, 2001: A Space Odyssey, which features HAL 9000, an intelligent computer, voiced by Douglas Rain. But computing at the time fell very far short of what Kubrik envisioned. Gotlieb & Borodin's position, five years later, on the feasibility of something like HAL 9000 was not optimistic:

In review, we have arrived at the following position. For problem solving and pattern recognition where intelligence, judgment and comprehensive knowledge are required, the results of even the best computer programs are far inferior to those achieved by humans (excepting cases where the task is a well-defined mathematical computation). Further, the differences between the mode of operation of computers and the modes in which humans operate (insofar as we can understand these latter) seem to be so great that for many tasks there is little or no prospect of achieving human performance within the foreseeable future. [p.159]But Gotlieb & Borodin, though implicitly dismissing the possibility of a HAL 9000, go on to say that "it is not possible to place bounds on how computers can be used even in the short term, because we must expect that the normal use of computers will be as a component of a [hu]man-machine combination. [pp.159-160]". Of this combination, they were not so willing to dismiss possibilities:

Whatever the shortcomings of computers now and in the future, we cannot take refuge in their limitations in potential. We must ask what we want to do with them and whether the purposes are socially desirable. Because once goals are agreed upon, the potentialities of [humans] using computers, though not unlimited, cannot be bounded in any way we can see now." [p.160]Fifty years later, social science research on how AI can benefit human work is focusing closely on this human-AI combination. A 2023 study of ChatGPT-4 by a team of social scientists studied work done by consultants assisted by, or not assisted by ChatGPT-4. Of their results, Ethan Mollick, one of the authors, explains that "of 18 different tasks selected to be realistic samples of the kinds of work done at an elite consulting company, consultants using ChatGPT-4 outperformed those who did not, by a lot. On every dimension. Every way we measured performance." [Mollick]. Evidently, Gotlieb & Borodin were correct when they wrote that the potential of the human-machine combination cannot so easily be bounded. We are only now beginning to see how unbounded it can be.

As for the possibility of a HAL 9000, as we saw, the book was not so sanguine. Neither was the 2013 40th anniversary blog. Hector Levesque, a leading AI researcher and contributor to the blog, wrote in his blog entry:

Levesque want on to outline the key scientific issue that at the time (2013) was yet to be solved:The general view of AI in 1973 was not so different from the one depicted in the movie "2001: A Space Odyssey", that is, that by the year 2001 or so, there would be computers intelligent enough to be able to converse naturally with people. Of course it did not turn out this way. Even now no computer can do this, and none are on the horizon.

Yet in the ten years since, this problem has been solved. Today, I posed Levesque's question to ChatGTP-4:However, it is useful to remember that this is an AI technology whose goal is not necessarily to understand the underpinnings of intelligent behaviour. Returning to English, for example, consider answering a question like this:

The ball crashed right through the table because it was made of styrofoam. What was made of styrofoam, the ball or the table?

Contrast that with this one:

The ball crashed right through the table because it was made of granite. What was made of granite, the ball or the table?

People (who know what styrofoam and granite are) can easily answer such questions, but it is far from clear how learning from big data would help. What seems to be at issue here is background knowledge: knowing some relevant properties of the materials in question, and being able to apply that knowledge to answer the question. Many other forms of intelligent behaviour seem to depend on background knowledge in just this way. But what is much less clear is how all this works: what it would take to make this type of knowledge processing work in a general way. At this point, forty years after the publication of the Gotlieb and Borodin book, the goal seems as elusive as ever. [Levesque]

Levesque can hardly be faulted for not knowing that his problem would be solved only a few years after he wrote what he did. While Hinton and his students' seminal work on image recognition had been done the year before, it was not yet clear that the ability to assign names to images based on their content would soon lead to machines being able to reason from context. A further invention was needed: transformers, invented at Google Brain in 2017. ("GPT-4" is an acronym: the "T" stands for "transformer".) Yet the book's implicit and Levesque's explicit pessimism on the possibility of a HAL 9000 now seems obsolete, and the near unbounded possibilities Gotlieb & Borodin envisioned for the human-machine combination apply also to autonomous machines. The impact of this on society will, no doubt, be significant. I expect the next fifty years of "social issues in computing" to be quite interesting. Mon 06 Nov 2023 16:48me: The ball crashed right through the table because it was made of styrofoam. What was made of styrofoam?

ChatGPT: The table was made of styrofoam, which is why the ball crashed through it.

me: The ball crashed right through the table because it was made of granite. What was made of granite?

ChatGPT: The ball was made of granite, which is why it crashed right through the table.

me: How did you know which thing was made of granite and which of styrofoam?

ChatGPT: I inferred the composition based on the context of the sentences. In the first sentence, "The ball crashed right through the table because it was made of styrofoam," the implication is that the table must have been weak or easily breakable for the ball to crash through it. Styrofoam is known for being light and not very durable, so it makes sense to conclude that the table was made of styrofoam.

In the second sentence, "The ball crashed right through the table because it was made of granite," the emphasis seems to be on the strength or force of the ball causing it to crash through the table. Granite is a very hard and heavy material, so it suggests that the ball was made of granite, giving it the necessary force to break through the table.

Research Computing at Computer Science Toronto and the Rise of AI

Photo by Johnny Guatto

Photo by Johnny Guatto

Until the beginning of 2009, the machine learning group used primarily Matlab on UNIX CPUs. In the 1990s, SGI and Sun multiprocessors were the dominant platforms. The whole department transitioned to x86 multiprocessor servers running Linux in the 2000's. In the late 2000s, Nivida invented CUDA, a way to use their GPUs for general-purpose computation rather than just graphics. By 2009, preliminary work elsewhere suggested that CUDA could be useful for machine learning, so we got our first Nvidia GPUs. First was a Tesla-brand server GPU, which at many thousands of dollars for a single GPU system was on the expensive side, which prevented us from buying many. But results were promising enough that we tried CUDA on Nvidia gaming GPUs - first the GTX 280 and 285 in 2009, then GTX 480 and 580 later. The fact that CUDA ran on gaming GPUs made it possible for us to buy multiple GPUs, rather than have researchers compete for time on scarce Tesla cards. Relu handled all the research computing for the ML group, sourcing GPUs and designing and building both workstation and server-class systems to hold them. Cooling was a real issue: GPUs, then and now, consume large amounts of power and run very hot, and Relu had to be quite creative with fans, airflow and power supplies to make everything work.

Happily, Relu's efforts were worth it: the move to GPUs resulted in 30x speedups for ML work in comparison to the multiprocessor CPUs of the time, and soon the entire group was doing machine learning on the GPU systems Relu built and ran for them. Their first major research breakthrough came quickly: in 2009, Hinton's student, George Dahl, demonstrated highly effective use of deep neural networks for acoustic speech recognition. But the general effectiveness of deep neural networks wasn't fully appreciated until 2012, when two of Hinton's students, Ilya Sutskever and Alex Krizhevsky, won the ImageNet Large Scale Visual Recognition Challenge using a deep neural network running on GTX 580 GPUs.

Geoff, Ilya and Alex' software won the ImageNet 2012 competition so convincingly that it created a furore in the AI research community. The software used was released as open source; it was called AlexNet after Alex Krizhevsky, its principal author. It allowed anyone with a suitable NVidia GPU to duplicate the results. Their work was described in a seminal 2012 paper, ImageNet Classification with Deep Convolutional Neural Networks. Geoff, Alex and Ilya's startup company, DNNresearch, was acquired by Google early the next year, and soon Google Translate and a number of other Google technologies were transformed by their machine learning techniques. Meanwhile, at the Imagenet competition, AlexNet remained undefeated for a remarkable three years, until it was finally beaten in 2015 by a research team from Microsoft Research Asia. Ilya left Google a few years after, to co-found OpenAI: as chief scientist there, Ilya leads the design of OpenAI's GPT and DALL-E models and related products, such as ChatGPT, that are highly impactful today.

Relu, in the meanwhile, while continuing to provide excellent research computing support for the AI group at our department, including Machine Learning, also spent a portion of his time from 2017 to 2022 designing and building the research computing infrastructure for the Vector Institute, an AI research institute in Toronto where Hinton serves as Chief Scientific Advisor. In addition to his support for the department's AI group, Relu continues to this day to provide computing support for Hinton's own ongoing AI research, including his Dec 2022 paper where he proposes a new Forward-Forward machine learning algorithm as an improved model for the way the human brain learns.

Tue 31 Oct 2023 09:11

Computing the Climate

I'm very glad I did. I am a computer scientist myself, whose career has been dedicated to building and running sometimes complex computer systems to support computer science teaching and research. I recognize in climate modelling a similar task at a much greater scale, working under a much more demanding "task-master": those systems need to be constantly measured against real data from our planet's diverse and highly complex geophysical processes, processes that drive its weather and climate. The amount of computing talent devoted to climate modelling is considerable, much more than I realized, and the work done so far is nothing short of remarkable. In his book, Steve outlines the history of climate modelling from very early work done on paper, to the use of the first electronic computers for weather prediction, to the highly complex and extremely compute-intensive climate models of today. Skillfully avoiding the pitfalls of not enough detail and too much, Steve effectively paints a picture of a very difficult scientific and software engineering task, and the programmers and scientists who rise to the challenge, building models that can simulate the earth's climate so accurately that viable scientific conclusions can be drawn from them with a high degree of confidence.