Generalizing from MIDI to Real-World Audio

To further explore the generalization capability of TimbreTron, we also tried one domain adaptation experiment where we took a CycleGAN trained on MIDI data, tested it on the real world test dataset, and synthesized audio with Wavenet trained on training real world data. To test generalization ability of TimbreTron, we conducted experiments where SpecGAN is trained on unpaired MIDI data, and then evaluated on real world data test set. The WaveNet syntheiszer here is trained on real world data.

Examples of Piano Samples from MIDI training Dataset

Examples of Harpsichord Samples from MIDI training Dataset

Samples Generated by TimbreTron trained on MIDI but tested on Real World test Dataset(Piano pieces played by Sageev)

1.Source Piano

2. Source Piano

3. Source Piano

1.Generated Harpsichord

2. Generated Harpsichord

3. Generated Harpsichord

4. Source Piano

5. Source Piano

4. Generated Harpsichord

5. Generated Harpsichord

As is shown from the corresponding audio examples in this section, the quality of generated audio is very good, with pitch preserved and timbre transferred. The ability to generalize from MIDI to real-world is interesting, in that it opens up the possibility of training on paired examples.

Comparing CQT and STFT

One of the key design choices in TimbreTron was whether to use an STFT or CQT representation. If the STFT

representation is used, there is an additional choice of whether to reconstruct using the Griffin-Lim

algorithm or the conditional WaveNet synthesizer. We found that the STFT-based pipeline had two problems:

1) it sometimes failed to correctly transfer low pitches, likely due to the STFT's poor frequency

resolution at low frequencies, as shown in the following samples:

Source Audio

Generated Sample from CQT TimbreTron

Generated Sample from STFT+GriffinLim TimbreTron

Generated Sample from STFT+WaveNet TimbreTron

2) it sometimes produced a random permutation of pitches. For example, we ran TimbreTron on a Bach piano sample played by a professional musician, as shown in the following samples:

Source Audio

Generated Sample from CQT TimbreTron

Generated Sample from STFT+GriffinLim TimbreTron

Generated Sample from STFT+WaveNet TimbreTron

The STFT TimbreTron transposed parts of the longer excerpt by different amounts, and for a few notes in particular, seemed to fail to transpose them by the same amount as it did the others. As was shown by the samples above, those problems were completely solved using CQT TimbreTron (likely due to the CQT's pitch equivariance and higher frequency resolution at low frequencies) Furthermore, both of these artifacts were presented in both STFT WaveNet and Griffin-Lim reconstruction methods, which suggests that the source of the artifacts are likely to be from the CycleGAN stage of the pipeline. This empirically demonstrates the effectiveness of the CQT representation compared with STFT.

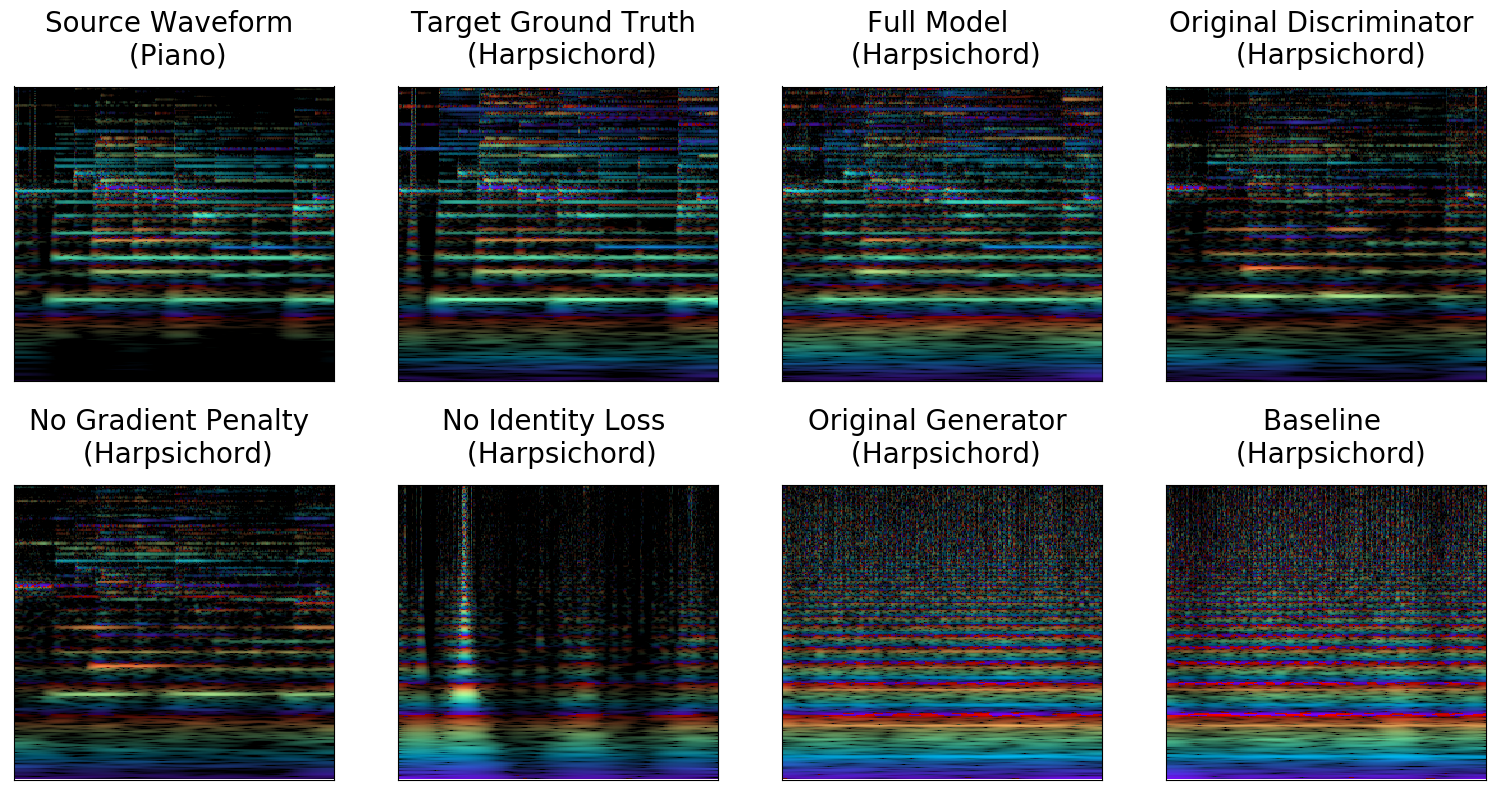

Ablation study for CycleGAN

To better understand and justify each modification we made to the original CycleGAN, we conducted an ablation study where we removed one modification at a time for MIDI CQT experiment. (We used MIDI data for ablation because the dataset has paired samples, which provides a convenient ground truth for transfer quality evaluation.)

Above are the rainbowgrams of the 4-second audio samples for the ablation study on MIDI test dataset.The source ground truth and the target ground truth come from a paired samples in the dataset."Full Model" corresponds to the output of our final TimbreTron, which is perceptually closest totarget ground truth. "Original discriminator" or "Original generator" corresponds to the TimbreTron pipeline with the discriminator or generator replaced by the original discriminator or generator in theoriginal CycleGAN. "No gradient penalty", "No identity loss", and "No data augmentation" refer to the full model without the corresponding modifications. "Baseline" is the original CycleGAN (Zhuet al., 2017).

Here are the corresponding audio samples

Source Waveform

Target Ground Truth

Full Model

No Gradient Penalty

No Identity Loss

Original Generator

No Data Augmentation

Original discriminator

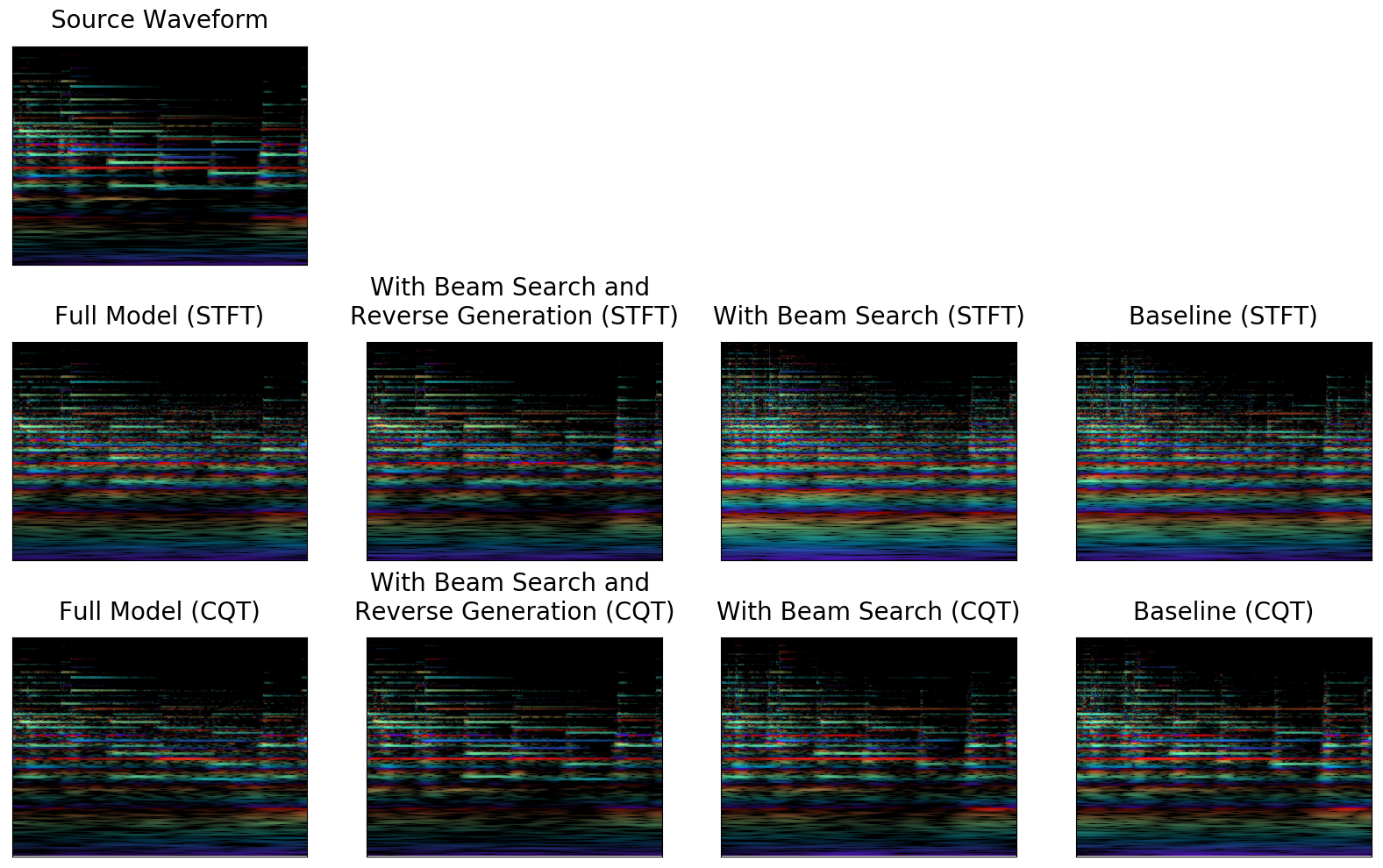

Ablation study for WaveNet

We also did ablation study for WaveNet.

The Source Waveform is from test set. All other audio samples are the WaveNet reconstruction(WaveNet(CQT(Waveform))) of the source ground truth with different versions (full and ablated) of our WaveNet. On the first row, "Full Model(STFT)" corresponds to the output of our final WaveNet architecture but trained with with STFT representation, Data augmentation was removed for the next one to the right, then next Reverse Generation was removed, then finally the Baseline is the original WaveNet (van den Oord et al., 2016a). On the second row, we did similar ablation of CQT trained models. The first one is our final model, which is perceptually closest to the source. Then a modification is removed in a similar fashion as the first row. As is shown by those ablated models, each time a modification is removed the audio quality gets worse.

Here are the corresponding audio samples

1.1 Source Waveform

2.1 Full Model(STFT)

2.2 W/o Data Aug.(STFT)

2.3 W/o Reverse Generation(STFT)

2.4 Baseline (STFT)

3.1 Full Model(CQT)

3.2 W/o Data Aug. (CQT)

3.3 W/o Reverse Generation (CQT)

3.4 Baseline (CQT)

WaveNet Reconstruction: WaveNet(CQT(source audio)))

To demonstrate the reconstruction quality of our WaveNet synthesizer without the influence of CycleGAN, we conducted experiments where the CQT is generated from source Audio and then reconstructed with WaveNet. We tired both with and without beam search. Here are some samples.

Source Audio | Reconstructed without Beam Search | Reconstructed with Beam Search

Website maintained by: Sheldon Huang / Last updated on: November 15, 2018: