|

|

Course description

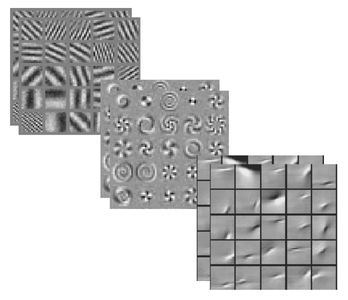

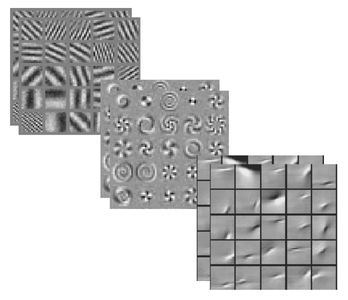

In many vision tasks, good performance is all about the right representation. Learning of

image features (AKA Sparse Coding, Dictionary Learning or Deep Learning) has therefore become a

common approach in tasks like object recognition.

Although feature learning works well on static images,

in a huge number of computer vision tasks, it is the relationship between images not the content of

any single image that carries the relevant information.

Examples include motion and action understanding, stereo, geometry, invariant recognition or optic flow.

Recently, a variety of higher-order sparse coding models emerged, that try to learn codes

to represent relationships instead of just the content of single images.

Interestingly, some of these approaches quickly became some of best performing methods in

action and motion recognition tasks, and they are increasingly being deployed to model more difficult

capabilities, like learning invariances, tracking or modeling higher-level, analogical reasoning.

Most of these models were introduced independently and from various different

perspectives, but they are all based on the same core idea:

Sparse codes can act like "gates",

that modulate the connections between the other variables in the model. This allows them to

dynamically represent changes inherent in an image sequence, turning model parameters into "stereo", "mapping"

or "spatio- temporal" features. Higher-order feature learning models are closely related to biological

models of complex cells known as "energy models".

The tutorial will show how higher order features allow us to learn relations. It will

discuss learning and inference methods and will present recent applications from various domains.

The tutorial will also discuss in some detail the connections to biological models of complex cells

as well as to multi-layer feature learning and deep learning methods.

Buzzwords

Spatio-temporal features,

Deep Learning for Videos,

Energy models,

Complex Cells,

Square-Pooling,

Quadrature Features,

Bilinear Sparse Coding,

Conditional Sparse Coding,

Subspace Models,

Gated Boltzmann Machines,

mcRBM.

Review paper

This tech-report is a summary of most of the topics discussed in the tutorial.

This shorter version of the paper analyzes the role of shared eigenspaces and energy models.

Pointers to code

Python code for Factored Gated Boltzmann machines is available here.

Python GPU implementation of Factored Gated Boltzmann machines, using joint training, here.

Marc'Aurelio Ranzato made code available for his implementation of the mean-covariance model.

Python/theano implementation of a gated autoencoder here.

Schedule and Slides

The tutorial will consist of four sections. Use the links below to download the slides.

Slides may change until the last minute.

Introduction [part 1]

Multiview feature learning [part 2]

Factorization, complex cells, shared eigenspaces [part 3]

Applications, examples, conclusions [part 4]

|

time and topic

|

content

|

slides

|

| 13:30 - 14:00 Introduction |

- Feature Learning

- Correspondence tasks in vision

- From features to relational features

|

[part 1]

|

| 14:00 - 15:00 Multiview feature learning |

- Brief sparse coding review

- Learning relations and the need for multiplicative interactions

- Bilinear models and gated sparse coding

- Inference and learning with multiple views

|

[part 2]

|

| 15:00 - 15:30 Coffee break |

|

|

| 15:30 -16:30 Factorization, eigenspaces and complex cells |

- Parameter factorization and square pooling

- Orthogonal warps and Quadrature Filters

- Subspace and energy models

- Learning more than two views

|

[part 3]

|

| 16:30 - 17:00 Applications, tricks, outlook |

- Learning for action recognition

- Learning for stereo inference

- Learning invariant representations

- Extensions, outlook

|

[part 4]

|

Organizer

Roland Memisevic

Roland Memisevic received the PhD in Computer Science from the University of Toronto, Canada, in 2008.

He held positions as a research intern at Microsoft Research, Redmond, as a research scientist at

PNYLab LLC in Princeton, and as a post-doctoral fellow at the University of Toronto and at ETH Zurich,

Switzerland. In 2011, he joined the department of Computer Science, University of Frankfurt, Germany,

as an assistant professor in Computer Science. His research interests are in machine learning and

computer vision, in particular in unsupervised learning and feature learning.

Relevant Literature

|

[1]

|

Christoph von der Malsburg.

The correlation theory of brain function.

Internal report, 81-2, Max-Planck-Institut für Biophysikalische

Chemie, Postfach 2841, 3400 Göttingen, FRG, 1981.

Reprinted in E. Domany, J. L. van Hemmen, and K. Schulten, editors,

Models of Neural Networks II, chapter 2, pages 95-119.

Springer-Verlag, Berlin, 1994.

[ bib ]

|

|

[2]

|

Geoffrey F. Hinton.

A parallel computation that assigns canonical object-based frames of

reference.

In Proceedings of the 7th international joint conference on

Artificial intelligence - Volume 2, pages 683-685, San Francisco, CA, USA,

1981. Morgan Kaufmann Publishers Inc.

[ bib |

http ]

|

|

[3]

|

E.H. Adelson and J.R. Bergen.

Spatiotemporal energy models for the perception of motion.

J. Opt. Soc. Am. A, 2(2):284-299, 1985.

[ bib ]

|

|

[4]

|

C. Lee Giles and Tom Maxwell.

Learning, invariance, and generalization in high-order neural

networks.

Appl. Opt., 26(23):4972-4978, December 1987.

[ bib ]

|

|

[5]

|

T. Sanger.

Stereo disparity computation using Gabor filters.

Biological Cybernetics, 59:405-418, 1988.

10.1007/BF00336114.

[ bib ]

|

|

[6]

|

A.D. Jepson and M.R.M. Jenkin.

The fast computation of disparity from phase differences.

In Computer Vision and Pattern Recognition, 1989. Proceedings

CVPR'89., IEEE Computer Society Conference on, pages 398-403. IEEE, 1989.

[ bib ]

|

|

[7]

|

Izumi Ohzawa, Gregory C. Deangelis, and Ralph D. Freeman.

Stereoscopic Depth Discrimination in the Visual Cortex: Neurons

Ideally Suited as Disparity Detectors.

Science (New York, N.Y.), 249(4972):1037-1041, August 1990.

[ bib ]

|

|

[8]

|

Yoan Shin and Joydeep Ghosh.

The pi-sigma network : An efficient higher-order neural network for

pattern classification and function approximation.

In in Proceedings of the International Joint Conference on

Neural Networks, pages 13-18, 1991.

[ bib ]

|

|

[9]

|

Peter Foldiak.

Learning invariance from transformation sequences.

Neural Computation, 3(2):194-200, 1991.

[ bib ]

|

|

[10]

|

Ning Qian.

Computing stereo disparity and motion with known binocular cell

properties.

Neural Comput., 6:390-404, May 1994.

[ bib ]

|

|

[11]

|

P.A. Arndt, H.A. Mallot, and H.H. Bulthoff.

Human stereovision without localized image features.

Biological cybernetics, 72(4):279-293, 1995.

[ bib ]

|

|

[12]

|

D. Fleet, H. Wagner, and D. Heeger.

Neural encoding of binocular disparity: Energy models, position

shifts and phase shifts.

Vision Research, 36(12):1839-1857, June 1996.

[ bib ]

|

|

[13]

|

Rajesh Rao and Daniel Ruderman.

Learning lie groups for invariant visual perception.

In In Advances in Neural Information Processing Systems 11. MIT

Press, 1999.

[ bib ]

|

|

[14]

|

Joshua Tenenbaum and William Freeman.

Separating style and content with bilinear models.

Neural Computation, 12(6):1247-1283, 2000.

[ bib ]

|

|

[15]

|

Aapo Hyvärinen and Patrik Hoyer.

Emergence of phase- and shift-invariant features by decomposition of

natural images into independent feature subspaces.

Neural Comput., 12:1705-1720, July 2000.

[ bib ]

|

|

[16]

|

C. Schuldt, I. Laptev, and B. Caputo.

Recognizing human actions: A local svm approach.

International Conf. on Pattern Recognition, 2004.

[ bib ]

|

|

[17]

|

David Grimes and Rajesh Rao.

Bilinear sparse coding for invariant vision.

Neural Computation, 17(1):47-73, 2005.

[ bib ]

|

|

[18]

|

Bruno Olshausen, Charles Cadieu, Jack Culpepper, and David Warland.

Bilinear models of natural images.

In SPIE Proceedings: Human Vision Electronic Imaging XII,

San Jose, 2007.

[ bib ]

|

|

[19]

|

Xu Miao and Rajesh Rao.

Learning the lie groups of visual invariance.

Neural Computation, 19(10):2665-2693, 2007.

[ bib ]

|

|

[20]

|

Roland Memisevic and Geoffrey Hinton.

Unsupervised learning of image transformations.

In Proceedings of IEEE Conference on Computer Vision and Pattern

Recognition, 2007.

[ bib ]

|

|

[21]

|

M Bethge, S Gerwinn, and JH Macke.

Unsupervised learning of a steerable basis for invariant image

representations.

In B. E. Rogowitz, editor, Human Vision and Electronic Imaging

XII, pages 1-12, Bellingham, WA, USA, February 2007.

Max-Planck-Gesellschaft, SPIE.

[ bib ]

|

|

[22]

|

Marcin Marszalek, Ivan Laptev, and Cordelia Schmid.

Actions in context.

In IEEE Conference on Computer Vision & Pattern Recognition,

2009.

[ bib ]

|

|

[23]

|

A. Hyvarinen, J. Hurri, , and Patrik O. Hoyer.

Natural Image Statistics: A Probabilistic Approach to Early

Computational Vision.

Springer Verlag, 2009.

[ bib ]

|

|

[24]

|

Graham Taylor and Geoffrey Hinton.

Factored conditional restricted Boltzmann machines for modeling

motion style.

In Léon Bottou and Michael Littman, editors, Proceedings of

the 26th International Conference on Machine Learning, pages 1025-1032,

Montreal, June 2009. Omnipress.

[ bib ]

|

|

[25]

|

Graham, W. Taylor, Rob Fergus, Yann LeCun, and Christoph Bregler.

Convolutional learning of spatio-temporal features.

In Proc. European Conference on Computer Vision (ECCV'10),

2010.

[ bib ]

|

|

[26]

|

Hugo Larochelle and Geoffrey Hinton.

Learning to combine foveal glimpses with a third-order boltzmann

machine.

In Advances in Neural Information Processing Systems 23, pages

1243-1251. 2010.

[ bib ]

|

|

[27]

|

George E. Dahl, Marc'Aurelio Ranzato, Abdel-rahman Mohamed, and Geoffrey E.

Hinton.

Phone recognition with the mean-covariance restricted Boltzmann

machine.

In Advances in Neural Information Processing Systems 23, pages

469-477. 2010.

[ bib ]

|

|

[28]

|

Gary B. Huang and Erik Learned-Miller.

Learning class-specific image transformations with higher-order

Boltzmann machines.

In In Workshop on Structured Models in Computer Vision at IEEE

CVPR, 2010, 2010.

[ bib ]

|

|

[29]

|

Roland Memisevic and Geoffrey E Hinton.

Learning to represent spatial transformations with factored

higher-order Boltzmann machines.

Neural Computation, 22(6):1473-92, 2010.

[ bib ]

|

|

[30]

|

Marc'Aurelio Ranzato and Geoffrey E. Hinton.

Modeling Pixel Means and Covariances Using Factorized Third-Order

Boltzmann Machines.

In Computer Vision and Pattern Recognition, pages 2551-2558,

2010.

[ bib ]

|

|

[31]

|

Roland Memisevic.

Gradient-based learning of higher-order image features.

In Proceedings of the International Conference on Computer

Vision (ICCV), 2011.

[ bib ]

|

|

[32]

|

Q.V. Le, W.Y. Zou, S.Y. Yeung, and A.Y. Ng.

Learning hierarchical spatio-temporal features for action recognition

with independent subspace analysis.

In Proc. CVPR, 2011. IEEE, 2011.

[ bib ]

|

|

[33]

|

Ching Ming Wang, Jascha Sohl-Dickstein, Ivana Tosic, and Bruno A. Olshausen.

Lie group transformation models for predictive video coding.

In DCC, pages 83-92, 2011.

[ bib ]

|

|

[34]

|

J. Susskind, R. Memisevic, G. Hinton, and M. Pollefeys.

Modeling the joint density of two images under a variety of

transformations.

In Proceedings of IEEE Conference on Computer Vision and Pattern

Recognition, 2011.

[ bib ]

|

|

[35]

|

I. Tosic and P. Frossard.

Dictionary learning in stereo imaging.

IEEE Transactions on Image Processing, 20(4), 2011.

[ bib ]

|

Bibliography table was generated by

bibtex2html 1.95.

|

|

|