In this chapter we expand on our discussion of interpretation by examining several dispatch techniques in detail. In cha:Background we defined dispatch as the mechanism used by a high level language virtual machine to transfer control from the code to emulate one virtual instruction to the next. This chapter has the flavor of a tutorial as we trace the evolution of dispatch techniques from the simplest to the highest performing.

Although in most cases we will give a small C language example to illustrate the way the interpreter is structured, this should not be taken to mean that all interpreters are hand written C programs. Precisely because so many dispatch mechanisms exist, some researchers argue that the interpreter portion of a virtual machine should be generated from some more generic representation [#!ertl:vmgen!#,#!sugalski:parrot-impl!#].

sec:Switch-Dispatch describes switch dispatch, the simplest dispatch technique. sec:Direct-Call-Threading introduces call threading, which figures prominently in our work. sec:direct-threading describes direct threading, a common technique that suffers from branch misprediction problems. sec:Branch-Predictors briefly describes branch prediction resources in modern processors. sec:Context-Problem defines the context problem, our term for the challenge to branch prediction posed by interpretation. Subroutine threading is introduced in sec:Subroutine-Threading. Finally, sec:Optimizing-Dispatch describes related work that eliminates dispatch overhead by inlining or replicating virtual instruction bodies.

Switch dispatch, perhaps the simplest dispatch mechanism, is illustrated

by Figure ![]() . Although the persistent representation

of a Java class is standards-defined, the representation of a loaded

virtual program is up to the VM designer. In this case we show how

an interpreter might choose a representation that is less compact

than possible for simplicity and speed of interpretation. In the figure,

the loaded representation appears on the bottom left. Each virtual

opcode is represented as a full word token even though a byte would

suffice. Arguments, for those virtual instructions that take them,

are also stored in full words following the opcode. This avoids any

alignment issues on machines that penalize unaligned loads and stores.

. Although the persistent representation

of a Java class is standards-defined, the representation of a loaded

virtual program is up to the VM designer. In this case we show how

an interpreter might choose a representation that is less compact

than possible for simplicity and speed of interpretation. In the figure,

the loaded representation appears on the bottom left. Each virtual

opcode is represented as a full word token even though a byte would

suffice. Arguments, for those virtual instructions that take them,

are also stored in full words following the opcode. This avoids any

alignment issues on machines that penalize unaligned loads and stores.

Figure ![]() illustrates the situation just

before the statement c=a+b+1 is executed. The box on the

right of the figure represents the C implementation of the interpreter.

The vPC points to the word in the loaded representation corresponding

to the first instance of iload. The interpreter works by

executing one iteration of the dispatch loop for each virtual instruction

it executes, switching on the token representing each virtual instruction.

Each virtual instruction is implemented by a case in the

switch statement. Virtual instruction bodies are simply the

compiler-generated code for each case.

illustrates the situation just

before the statement c=a+b+1 is executed. The box on the

right of the figure represents the C implementation of the interpreter.

The vPC points to the word in the loaded representation corresponding

to the first instance of iload. The interpreter works by

executing one iteration of the dispatch loop for each virtual instruction

it executes, switching on the token representing each virtual instruction.

Each virtual instruction is implemented by a case in the

switch statement. Virtual instruction bodies are simply the

compiler-generated code for each case.

Every instance of a virtual instruction consumes at least one word in the internal representation, namely the word occupied by the virtual opcode. Virtual instructions that take operands are longer. This motivates the strategy used to maintain the vPC. The dispatch loop always bumps the vPC to account for the opcode and bodies that consume operands bump the vPC further, one word per operand.

Although no virtual branch instructions are illustrated in the figure, they operate by assigning a new value to the vPC for taken branches.

Figure:

A switch interpreter loads each virtual instruction as a virtual

opcode, or token, corresponding to the case of the switch statement

that implements it. Virtual instructions that take immediate operands,

like iconst, must fetch them from the vPC and adjust

the vPC past the operand. Virtual instructions which do not

need operands, like iadd, do not need to adjust the vPC.

![\includegraphics[width=1\columnwidth]{figs/javaSwitch}% WIDTH=556 HEIGHT=391](img13.png)

A switch interpreter is relatively slow due to the overhead of the dispatch loop and the switch. Despite this, switch interpreters are commonly used in production (e.g. in the JavaScript and Python interpreters). Presumably this is because switch dispatch can be implemented in ANSI standard C and so it is very portable.

Another portable way to organize an interpreter is to write each virtual

instruction as a function and dispatch it via a function pointer.

Figure ![]() shows each virtual instruction

body implemented as a C function. While the loaded representation

used by the switch interpreter represents the opcode of each virtual

instruction as a token, direct call threading represents each virtual

opcode as the address of the function that implements it. Thus, by

treating the vPC as a function pointer, a direct call-threaded

interpreter can execute each instruction in turn.

shows each virtual instruction

body implemented as a C function. While the loaded representation

used by the switch interpreter represents the opcode of each virtual

instruction as a token, direct call threading represents each virtual

opcode as the address of the function that implements it. Thus, by

treating the vPC as a function pointer, a direct call-threaded

interpreter can execute each instruction in turn.

In the figure, the vPC is a static variable which means the

interp function as shown is not re-entrant. Our example aims

only to convey the flavor of call threading. In Chapter ![]() we will show how a more complex approach to direct call threading

can perform about as well as switch threading (described in Section

we will show how a more complex approach to direct call threading

can perform about as well as switch threading (described in Section ![]() ).

).

A variation of this technique is described by Ertl [#!ertl95pldi!#]. For historical reasons the name ``direct'' is given to interpreters which store the address of the virtual instruction bodies in the loaded representation. Presumably this is because they can ``directly'' obtain the address of a body, rather than using a mapping table (or switch statement) to convert a virtual opcode to the address of the body. However, the name can be confusing as the actual machine instructions used for dispatch are indirect branches. (In this case, an indirect call).

Next we will describe direct threading, perhaps the most well-known high performance dispatch technique.

Figure:

A direct call-threaded interpreter packages each virtual instruction

body as a function. The shaded box highlights the dispatch loop showing

how virtual instructions are dispatched through a function pointer.

Direct call threading requires the loaded representation of the program

to point to the address of the function implementing each virtual

instruction.

![\begin{centering}\includegraphics[width=0.8\columnwidth,keepaspectratio]{figs/javaDirectCallThreadingExt}\par

\end{centering}% WIDTH=445 HEIGHT=213](img14.png)

Figure:

Direct-threaded Interpreter showing how Java Source code compiled

to Java bytecode is loaded into the Direct Threading Table (DTT).

The virtual instruction bodies are written in a single C function,

each identified by a separate label. The double-ampersand (&&)

shown in the DTT is gcc syntax for the address of a label.

![\begin{centering}\includegraphics[width=1\textwidth,keepaspectratio]{figs/javaDirectThread}\par

\end{centering}% WIDTH=554 HEIGHT=313](img15.png)

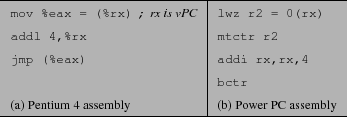

Figure:

Machine instructions used for direct dispatch. On both platforms

assume that some general purpose register, rx, has been dedicated

for the vPC. Note that on the PowerPC indirect branches are

two part instructions that first load the ctr register and

then branch to its contents.

Like in direct call threading, a virtual program is loaded into a direct-threaded interpreter as a list of body addresses and operands. We will refer to the list as the Direct Threading Table, or DTT, and refer to locations in the DTT as slots.

Interpretation begins by initializing the vPC to the first

slot in the DTT, and then jumping to the address stored there. A direct-threaded

interpreter does not need a dispatch loop like direct call threading

or switch dispatch. Instead, as can be seen in Figure ![]() ,

each body ends with goto *vPC++, which transfers control

to the next instruction.

,

each body ends with goto *vPC++, which transfers control

to the next instruction.

In C, bodies are identified by a label. Common C language extensions permit the address of this label to be taken, which is used when initializing the DTT. GNU's gcc, as well as C compilers produced by Intel, IBM and Sun Microsystems all support the label-as-value and computed goto extensions, making direct threading quite portable.

Direct threading requires fewer instructions and is faster than direct

call threading or switch dispatch. Assembler for the dispatch sequence

is shown in Figure ![]() . When executing the indirect

branch in Figure

. When executing the indirect

branch in Figure ![]() (a) the Pentium 4 will speculatively

dispatch instructions using a predicted target address. The PowerPC

uses a different strategy for indirect branches, as shown in Figure

(a) the Pentium 4 will speculatively

dispatch instructions using a predicted target address. The PowerPC

uses a different strategy for indirect branches, as shown in Figure ![]() (b).

First the target address is loaded into a register, and then a branch

is executed to this register address. The PowerPC stalls until the

target address is known

(b).

First the target address is loaded into a register, and then a branch

is executed to this register address. The PowerPC stalls until the

target address is known![]() , although other instructions may be scheduled between the load and

the branch (like the addi in Figure

, although other instructions may be scheduled between the load and

the branch (like the addi in Figure ![]() )

to reduce or eliminate these stalls.

)

to reduce or eliminate these stalls.

Mispredicted branches pose a serious challenge to modern processors because they threaten to starve the processor of instructions. The problem is that before the destination of the branch is known the execution of the pipeline may run dry. To perform at full speed, modern CPUs need to keep their pipelines full by correctly predicting branch targets.

Ertl and Gregg point out that the assumptions underlying the design

of indirect branch predictors are usually wrong for direct-threaded

interpreters [#!ertl:dispatch-arch!#,#!ertl:vm-branch-pldi!#]. In a

direct-threaded interpreter, there is only one indirect jump

instruction per virtual instruction. For example, in the fragment

of virtual code illustrated in Figure ![]() ,

there are two instances of iload followed by an instance

of iconst. The indirect dispatch branch at the end of the

iload body will execute twice. The first time, in the context

of the first instance of iload, it will branch back to the

entry point of the the iload body, whereas in the context

of the second iload it will branch to iconst. Thus,

the hardware will likely mispredict the second execution of the dispatch

branch.

,

there are two instances of iload followed by an instance

of iconst. The indirect dispatch branch at the end of the

iload body will execute twice. The first time, in the context

of the first instance of iload, it will branch back to the

entry point of the the iload body, whereas in the context

of the second iload it will branch to iconst. Thus,

the hardware will likely mispredict the second execution of the dispatch

branch.

The performance impact of this can be hard to predict. For instance, if a tight loop in a virtual program happens to contain a sequence of unique virtual instructions, the BTB may successfully predict each one. On the other hand, if the sequence contains duplicate virtual instructions, the BTB may mispredict all of them.

This problem is even worse for direct call threading and switch dispatch. For these techniques there is only one dispatch branch and so all dispatches share the same BTB entry. Direct call threading will mispredict all dispatches except when the same virtual instruction body is dispatched multiple times consecutively.

Another perspective is that the destination of the indirect dispatch branch is unpredictable because its destination is not correlated with the hardware pc. Instead, its destination is correlated to the vPC. We refer to this lack of correlation between the hardware pc and vPC as the context problem. We choose the term context following its use in context sensitive inlining [#!Grove_Chambers_2002!#] because in both cases the context of shared code (in their case methods, in our case virtual instruction bodies) is important to consider.

Forth is organized as a collection of callable bodies of code called words. Words can be user defined or built into the system. Meaningful Forth words are composed of built-in and user-defined words and execute by dispatching their constituent words in turn. A Forth implementation is said to be subroutine-threaded if a word is compiled to a sequence of native call instructions, one call for each constituent word. Since a built-in Forth word is loosely analogous to a callable virtual instruction body, we could apply subroutine threading at load time to a language virtual machine that implements virtual instruction bodies as callable. In such a system the loaded representation of a virtual method would include a sequence of generated native call instructions, one to dispatch each virtual instruction in the virtual method.

Curley [#!curley:subr1!#,#!curley:subr2!#] describes a subroutine-threaded

Forth for the 68000 CPU. He improves the resulting code by inlining

small opcode bodies, and converts virtual branch opcodes to single

native branch instructions. He credits Charles Moore, the inventor

of Forth, with discovering these ideas much earlier. Outside of Forth,

there is little thorough literature on subroutine threading. In particular,

few authors address the problem of where to store virtual instruction

operands. In Section ![]() , we document how operands

are handled in our implementation of subroutine threading.

, we document how operands

are handled in our implementation of subroutine threading.

The choice of optimal dispatch technique depends on the hardware platform,

because dispatch is highly dependent on micro-architectural features.

On earlier hardware, call and return were both expensive

and hence subroutine threading required two costly branches, versus

one in the case of direct threading. Rodriguez [#!rodriguez:forth!#]

presents the trade offs for various dispatch types on several 8 and

16-bit CPUs. For example, he finds direct threading is faster than

subroutine threading on a 6809 CPU, because the jsr and ret

instruction require extra cycles to push and pop the return address

stack. On the other hand, Curley found subroutine threading faster

on the 68000 [#!curley:subr2!#]. Due to return branch prediction

hardware deployed in modern general purpose processors, like the Pentium

and PowerPC, the cost of a return is much lower than the cost of a

mispredicted indirect branch. In Chapter ![]() we quantify this effect on a few modern CPUs.

we quantify this effect on a few modern CPUs.

Much of the work on interpreters has focused on how to optimize dispatch. In general dispatch optimizations can be divided into two broad classes: those which refine the dispatch itself, and those which alter the bodies so that they are more efficient or simply require fewer dispatches. Switch dispatch and direct threading belong to the first class, as does subroutine threading. Kogge remains a definitive description of many threaded code dispatch techniques [#!kogge:threaded!#]. Next, we will discuss superinstruction formation and replication, which are in the second class.

Superinstructions reduce the number of dispatches. Consider the code to add a constant integer to a variable. This may require loading the variable onto the expression stack, loading the constant, adding, and storing back to the variable. VM designers can instead extend the virtual instruction set with a single superinstruction that performs the work of all four virtual instructions. This technique is limited, however, because the virtual instruction encoding (often one byte per opcode) may allow only a limited number of instructions, and the number of desirable superinstructions grows large in the number of subsumed atomic instructions. Furthermore, the optimal superinstruction set may change based on the workload. One approach uses profile-feedback to select and create the superinstructions statically (when the interpreter is compiled [#!ertl:vmgen!#]).

Piumarta and Riccardi [#!piumarta:inlining!#] present selective

inlining![]() . Selective inlining constructs superinstructions when the virtual

program is loaded. They are created in a relatively portable way,

by memcpy'ing the compiled code in the bodies, again using

GNU C labels-as-values. The idea is to construct (new) super instruction

bodies by concatenating the virtual bodies of the virtual instructions

that make them up. This works only when the code in the virtual bodies

is position independent, meaning that the destination of any

relative branch in a body is in that body also. Typically this excludes

bodies making C function calls. Like us, Piumarta and Riccardi applied

selective inlining to OCaml, and reported significant speedup on several

micro benchmarks. As we discuss in Section

. Selective inlining constructs superinstructions when the virtual

program is loaded. They are created in a relatively portable way,

by memcpy'ing the compiled code in the bodies, again using

GNU C labels-as-values. The idea is to construct (new) super instruction

bodies by concatenating the virtual bodies of the virtual instructions

that make them up. This works only when the code in the virtual bodies

is position independent, meaning that the destination of any

relative branch in a body is in that body also. Typically this excludes

bodies making C function calls. Like us, Piumarta and Riccardi applied

selective inlining to OCaml, and reported significant speedup on several

micro benchmarks. As we discuss in Section ![]() , our

technique is separate from, but supports and indeed facilitates, inlining

optimizations.

, our

technique is separate from, but supports and indeed facilitates, inlining

optimizations.

Languages, like Java, that require runtime binding complicate the implementation of selective inlining significantly because at load time little is known about the arguments of many virtual instructions. When a Java method is first loaded some arguments are left unresolved. For instance, the argument of an invokevirtual instruction will initially be a string naming the callee. The argument will be re-written the first time the virtual instruction executes to point to a descriptor of the now resolved callee. At the same time, the virtual opcode is rewritten so that subsequently a ``quick'' form of the virtual instruction body will be dispatched. In Java, if resolution fails, the instruction throws an exception and is not rewritten. The process of rewriting the arguments, and especially the need to point to a new virtual instruction body, complicates superinstruction formation. Gagnon describes a technique that deals with this additional complexity which he implemented in SableVM [#!gagnon:inline-thread-prep-seq!#].

Selective inlining requires that the superinstruction starts at a virtual basic block, and ends at or before the end of the block. Ertl and Gregg's dynamic superinstructions [#!ertl:vm-branch-pldi!#] also use memcpy, but are applied to effect a simple native compilation by inlining bodies for nearly every virtual instruction. They show how to avoid the basic block constraints, so dispatch to interpreter code is only required for virtual branches and unrelocatable bodies. Vitale and Abdelrahman describe a technique called catenation, which (i) patches Sparc native code so that all implementations can be moved, (ii) specializes operands, and (iii) converts virtual branches to native branches, thereby eliminating the virtual program counter [#!vitale:catenation!#].

Replication -- creating multiple copies of the opcode body--decreases the number of contexts in which it is executed, and hence increases the chances of successfully predicting the successor [#!ertl:vm-branch-pldi!#]. Replication combined with inlining opcode bodies reduces the number of dispatches, and therefore, the average dispatch overhead [#!piumarta:inlining!#]. In the extreme, one could create a copy for each instruction, eliminating misprediction entirely. This technique results in significant code growth, which may [#!vitale:catenation!#] or may not [#!ertl:vm-branch-pldi!#] cause cache misses.

In summary, branch mispredictions caused by the context problem limit the performance of a direct-threaded interpreter on a modern processor. We have described several recent dispatch optimization techniques. Some of the techniques improve performance of each dispatch by reducing the number of contexts in which a body is executed. Others reduce the number of dispatches, possibly to zero.

In the next chapter we will describe a new technique for interpretation that deals with the context problem. Our technique, context threading, performs well compared to the interpretation techniques we have described in this chapter.