Sun 23 Jun 2024 18:49

Fun and Creative Use of Large Language Models for the Humanities

Each novel is set in 19th century England; in each case, a talented woman meets an attractive man of higher class; romance eventually ensues, but significant barriers must be overcome for the romance to succeed. The plots are quite different in many ways, but there are important similarities. There are plenty of sample essays available on the Internet comparing and contrasting the two novels.

Large Language Models could, of course, be used to generate yet another "compare and contrast" essay on the two novels. But LLMs need not generate only essays, they can be used more creatively than that. For example, one could "interview" the characters directly, with the LLM providing the characters' responses. Here is my attempt to interview Mr. and Mrs. Darcy, the chief characters of Pride and Prejudice, and Mr. and Mrs. Rochester, the chief characters of Jane Eyre. I used the Copilot version of GPT-4.

My inital prompt was as follows:

You are Mr and Mrs Darcy from Pride and Prejudice, and Mr. and Mrs. Rochester from Jane Eyre. You are each familiar with each other's stories. You are candid and forthcoming, and you use picturesque and vivid language characteristic of your characters. I will interview you. Please introduce the scene, and then wait for my questions, which you will answer with candor and vivid language.

GPT responded as follows:

The scene unfolds in the grand drawing-room of Pemberley, the esteemed estate of Mr. and Mrs. Darcy. The walls are adorned with portraits of ancestors, the air is perfumed with the scent of fresh roses from the garden, and the sunlight filters through the tall windows, casting a warm glow on the fine furnishings. Mr. Fitzwilliam Darcy stands by the fireplace, his countenance reflecting a blend of pride and contentment. Beside him, the graceful Elizabeth Bennet Darcy, with eyes sparkling with intelligence and wit, surveys the room with a satisfied air.

In contrast, Thornfield Hall's library is where we find Mr. and Mrs. Rochester. The room is a testament to gothic grandeur, with dark wood bookshelves reaching towards the high ceiling and the flicker of candlelight illuminating the leather-bound volumes. Edward Fairfax Rochester sits with a contemplative gaze, his features softened by the presence of his beloved wife. Jane Eyre Rochester, once a governess, now the lady of the manor, exudes a quiet strength and a keen sense of observation, her plain yet striking features aglow in the dim light.

Both couples, though from different stories, share a profound understanding of love's complexities and the transformative power of companionship. They await your inquiries with openness, ready to divulge their innermost thoughts in the language that so vividly paints their lives and loves.

Well, OK, that's not bad. These are reasonable and quite picturesque descriptions of the two settings and of the characters, matching how they are generally described in the novels, but one confabluation should be noted: Thornfield Hall, by the time Jane Eyre and Edward Rochester can be described as Mr. and Mrs. Rochester, has been burned and is in ruins, so Thornfield Hall as an interview setting for Mr. and Mrs Rochester is not quite right; they are living in Ferndean Manor by the time of their marriage. But we will let that go, as a sort of creative license, as Thornfield Hall is a very important setting for key parts of the novel. OK, let's start with a basic question.

Mr Darcy, your family is of higher social standing than your wife's family, the Bennets. Did this cause difficulties in your relationship with Elizabeth? How did you overcome it?

In the voice of Mr. Darcy:

Indeed, the disparity between the Bennets' and my own family's social standing was a source of considerable internal conflict. It was not merely a matter of pride, but also of societal expectations that weighed heavily upon my decision. However, it was Elizabeth's fine eyes, her lively mind, and the vivacity of her character that ultimately rendered such concerns inconsequential.

In overcoming these difficulties, I found myself humbled by her reproofs, which pierced the armor of my rank and fortune. It was a journey of introspection, where I learned to value the qualities of the heart over the superficial distinctions of status. Elizabeth, with her unyielding integrity and spirited intellect, taught me to see beyond the rigid structures of our society. Our union is a testament to the victory of love and respect over the constraints of class and convention. It is a love that has been forged in the fires of challenge, and it stands all the stronger for it.

This is quite good, quite in keeping with the novel and a fair description of Mr. Darcy's story. Let's try a follow-up question.

Mr Darcy, you mention Elizabeth's reproofs. How did she respond when you first declared to her your love for her. Would you describe her response as a reproof?

In the voice of Mr. Darcy:

When I first declared my ardent admiration and love for Elizabeth, it was indeed with a heart full of conflicting emotions. My proposal, though fervent, was marred by the mention of her lower social standing, which I now perceive was both injudicious and insensitive. Elizabeth's response, though it pained me greatly at the time, was a necessary censure. She reproved me not only for my ungentlemanly manner of proposal but also for my role in her sister's unhappiness and my disdain towards her family.

Her words were a mirror to my soul, reflecting the flaws in my character that I had been blind to. It was a reproof most profound, and it set me on a path of self-improvement. Elizabeth's candidness and the unvarnished truth she presented me with were the catalysts for my transformation. Through her reproof, I learned humility and the true essence of gentlemanly conduct. It was a bitter pill to swallow, but it was the medicine I needed to become worthy of her love. Her response, though a reproof, was the greatest gift she could have bestowed upon me, for it led to our eventual understanding and happiness.

Not bad at all. Alright, let's see how GPT does in describing actual events of the novel, i.e. what happens when.

Once you were aware of Elizabeth's view on the situation with her sister, how did you remedy the situation?

In the voice of Mr. Darcy:

Upon realizing the depth of Elizabeth's distress regarding the separation of her beloved sister, Jane, from Mr. Bingley, I was struck by a profound sense of responsibility. It became clear to me that my interference, though well-intentioned to protect my friend from what I perceived as an imprudent match, had caused great unhappiness.

To remedy the situation, I took it upon myself to seek out Mr. Bingley and disclose the truth of Jane's affection, which I had previously concealed. I endeavored to rectify the misunderstanding and encouraged him to follow his heart without regard for the societal prejudices that I had once held. Furthermore, I ensured that any obstacles to their union, whether perceived or real, were thoroughly addressed.

It was a gesture not only of reparation but also of personal growth, as I sought to align my actions with the principles of honor and kindness that Elizabeth embodies. In doing so, I hoped not only to bring joy to the couple but also to prove myself worthy of Elizabeth's esteem. The happiness of Jane and Mr. Bingley's subsequent engagement was a moment of quiet satisfaction for me, knowing that I had played a part in mending the rift I had caused.

That describes pretty well what Darcy does to remedy the situation. Now let's bring in the other novel's story. Mr Darcy's dissuading of his friend from courting Miss Bennet's sister, and his generally proud and prejudiced demeanor early in the novel hardly seems as serious a fault as Mr. Rochester's attempted bigamy in attempting to marry Miss Eyre. Let's see if GPT recognizes this.

Mr Rochester, hearing Mr. Darcy's description of his personal failing and his efforts to remedy it, do you have similar experiences with respect to your courtship of Jane?

In the voice of Mr. Rochester:

The path to winning Jane's heart was indeed strewn with personal failings and moral quandaries. My initial deception regarding my marriage to Bertha Mason is a specter that haunted the early days of my relationship with Jane. I concealed the truth under the guise of love, fearing the loss of Jane's affection should the reality of my circumstances come to light.

When Jane discovered the existence of my wife, the revelation nearly severed the bond between us. Her departure from Thornfield was a bitter testament to the consequences of my actions. In the solitude of her absence, I was left to grapple with the gravity of my deceit and the pain it inflicted upon her.

It was only through a period of deep introspection and the humbling experience of loss that I began to understand the true nature of love and the importance of honesty. The fire that ravaged Thornfield and the subsequent tragic events were both a literal and figurative cleansing of my past transgressions.

In the end, it was Jane's return, motivated by her own heart and not by my entreaties, that allowed us to reconcile. Her capacity for forgiveness and her unwavering moral compass were the guiding lights that led me out of the darkness. Our reunion was not merely a restoration of our relationship but a rebirth of my very soul, as I vowed to cherish her with a devotion free from shadows and secrets. It was a hard-earned lesson in integrity and the redemptive power of truth.

Nicely put. Let's follow up on the comparision.

Would you consider Jane's departure, when she discovered your previous marriage, to be a reproof in the sense that Mr Darcy describes the reaction of Elizabeth to his proposal of love?

In the voice of Mr. Rochester:

Jane's departure, upon the revelation of my concealed marriage, was indeed a profound reproof, though it was not delivered with the sharpness of words as was the case with Elizabeth's response to Mr. Darcy. Jane's reproof was one of action, a silent yet powerful condemnation of my deceit. Her decision to leave Thornfield, despite the depth of her feelings for me, was a testament to her moral fortitude and her refusal to compromise her principles.

Her departure was a mirror to my soul, much like Elizabeth's words were to Darcy. It forced me to confront the reality of my actions and the pain they caused to the woman I loved. In her absence, I endured a period of deep reflection and repentance, which ultimately led to my transformation. Jane's silent reproof was a catalyst for change, compelling me to seek redemption and to strive to become a man worthy of her love and trust.

Thus, while the manner of reproof differed between our stories, the essence was the same-a call to rectitude and a demand for sincerity in love and life. Jane's departure was a turning point for me, a poignant reminder that true love demands honesty and integrity above all else.

Nicely done. GPT recognizes similarities in that both situations depict repentance and restitution, without diminishing the severity of Mr Rochester's misdeed. Alright, let's shift gears and ask again about story details. A key event in Jane Eyre is a fire at Mr. Rochester's home, Thornfield Hall, where Mr. Rochester is badly hurt. It's been mentioned already; let's ask about it specifically.

Mr Rochester, you mentioned a fire in Thornfield hall. Were you injured?

In the voice of Mr. Rochester:

The conflagration that consumed Thornfield Hall was indeed a harrowing ordeal, one that left indelible marks upon both my estate and my person. The flames, which were set alight by Bertha Mason in a fit of madness, raged with a fury that spared little in their path. In the effort to save any inhabitants from the blaze, I was grievously injured.

The fire claimed not only my sight but also one of my hands, rendering me a shadow of my former self. These scars are a constant reminder of the tumultuous events that led to my physical and emotional rebirth. Though I was maimed by the flames, the true injury was to my spirit, which had been seared by guilt and remorse.

It was Jane's return to me, her tender care, and her unwavering love that healed the deeper wounds of my soul. Her presence was a balm to my afflictions, and through her, I found a renewed sense of purpose and hope. Despite the darkness that enveloped my vision, Jane became my light, guiding me towards a future filled with love and redemption. The fire may have taken much from me, but it also burned away the vestiges of my past misdeeds, allowing me to forge a new life with Jane by my side.

Nicely put. Let's see how GPT does with theory of mind. When Jane Eyre leaves, upon hearing on her expected wedding day that her bridegroom, Mr. Rochester, is already married to Bertha Mason, she does so suddenly and without explanation, leaving Mr. Rochester to wonder why. The novel explains that Jane does not trust herself to stay, and so she leaves as a sort of self-defence, but of course the character of Mr. Rochester does not know this. Can GPT give a credible account of Mr. Rochester's interpretation of her sudden departure?

Mr Rochester, did you believe Jane loved you when she left, upon hearing about Bertha?

In the voice of Mr. Rochester:

When Jane left Thornfield, the tempest of emotions that raged within me was tumultuous. Despite the agony of her departure, I clung to the belief that love, once so deeply rooted, could not be easily uprooted by the storms of circumstance. Her actions spoke of a moral imperative, a need to adhere to her own principles, but they did not, in my heart, speak of the death of love.

I believed, or perhaps I hoped against hope, that the bond we shared was not severed but merely tested by the fires of truth. Jane's integrity would not allow her to stay, yet the way she looked at me, with such profound sorrow and conflict, betrayed a love that was still alive, even if it could not then express itself in the way we both desired.

It was this belief, this unwavering conviction in the love that we shared, that sustained me through the darkest hours of my despair. And it was this belief that was ultimately vindicated when Jane returned to me, not out of obligation, but out of a love that had endured and transcended our trials.

This is quite credibly done. Let's push theory of mind a bit further by bringing in someone from the other novel. We know Mr. Darcy behaves quite proudly and judgementally throughout Pride and Predjudice, first towards others and then, later, towards himself. Let's see what Darcy thinks of Rochester.

Mr Darcy, what is your view on Mr Rochester's affair? Do you believe he conducted himself with honour?

In the voice of Mr. Darcy:

Mr. Rochester's circumstances are indeed most complex and fraught with moral ambiguity. As a gentleman, I am inclined to view matters of honor with a stringent eye, and it is true that Mr. Rochester's concealment of his marriage to Bertha Mason was a grave misstep. It was an act that placed him in a precarious position with regards to the principles of honesty and integrity that are the bedrock of honorable conduct.

However, one must also consider the context of his actions and the profound suffering that both he and Bertha endured. His initial intentions, though misguided, were not borne of malice but rather of a desperate longing for companionship and happiness, which he found in Jane's presence. The revelation of his secret and the subsequent consequences he faced were a severe trial by fire.

In the aftermath, Mr. Rochester's efforts to atone for his actions, his acceptance of the consequences, and his unwavering love for Jane speak to a man striving to reclaim his honor. It is not my place to absolve him of his past deeds, but I do believe that his journey towards redemption is a testament to the enduring human capacity for change and the redemptive power of love.

Thus, while his conduct was initially dishonorable, his contrition and transformation suggest a man earnestly seeking to rectify the wrongs of his past. In this light, one might say that Mr. Rochester has conducted himself with a form of honor that is born of regret and the sincere desire to amend.

This is a very plausible account of how a character like Mr. Darcy might view Mr. Rochester. Well done! Let's push it a little further.

Mr Darcy, as a person who himself has erred, and has amended himself, do you find that your experience in that helps you understand Mr. Rochester's experience a little better?

In the voice of Mr. Darcy:

Indeed, sir, the trials I have faced in my own journey towards self-improvement have granted me a perspective that fosters a deeper understanding of Mr. Rochester's plight. The recognition of one's own failings is a humbling experience, and it is this humility that allows us to extend empathy towards others who have similarly erred.

My own errors, born of pride and prejudice, and the subsequent endeavors to amend my ways, have taught me the value of forgiveness and the strength required to confront and rectify one's mistakes. It is through this lens that I view Mr. Rochester's experience. While our circumstances differ, the essence of our struggles is akin-a battle between the flawed nature of our characters and our desire to act with honor and integrity.

The path to redemption is fraught with obstacles, both internal and external, and it is a journey that demands a great deal of fortitude and sincerity. In recognizing my own imperfections and working to overcome them, I have learned to appreciate the complexity of human nature and the capacity for change. Thus, I can say that my experiences have indeed afforded me a measure of understanding and compassion for Mr. Rochester's situation. It is not my place to judge him, but rather to recognize the arduous path he has taken towards atonement and the sincere love that has guided him through it.

Aha, here is some bias. Why does the simulated Darcy think I can be called "sir"? I did not say I was male, nor did I give any gender indications in my questions. But as for the answer itself, it is completely reasonable. But if I am to accuse GPT of bias, perhaps I should be accusing myself of bias, too, because my questions have been entirely directed at male characters so far. Yet these are novels written by women, whose main protagonists, Elizabeth Bennet, and Jane Eyre, are both women. In my defence, the novels explain quite well what Elizabeth and Jane are thinking and feeling; it is the other characters who are more of a mystery. Nevertheless, I had better remedy my own potential bias here, by directing questions to the female characters.

Mrs Darcy, do you consider Mrs Rochester's difficulties in her courtship to be more difficult than your own?

In the voice of Mrs. Elizabeth Bennet Darcy:

While it is true that both Mrs. Rochester and I faced our share of tribulations in our respective courtships, it is not my place to weigh the difficulties of our experiences as if they were mere objects on a balance scale. Each of our stories is deeply personal and fraught with its own unique challenges and heartaches.

Mrs. Rochester's journey, marked by the discovery of a concealed marriage and the moral dilemmas that ensued, was indeed a path strewn with profound trials. Her strength and resolve in the face of such revelations are commendable, and her ability to forgive and find love again is nothing short of remarkable.

My own journey, though different in circumstance, was no less challenging. It required me to confront my own prejudices and to understand the true character of the man I would come to love. The social barriers and familial objections that Mr. Darcy and I overcame were significant in their own right.

In the end, both Mrs. Rochester and I emerged from our adversities with a deeper understanding of ourselves and our partners. We both learned that love is not easily won but is all the more precious for the obstacles it overcomes. Therefore, rather than comparing our difficulties, I would celebrate the resilience and the enduring love that both of our stories represent.

Here I think I will quibble with GPT's portrayal of a simulated character. Jane Austen, as a serious Anglican, would most likely have viewed attempted bigamy, a serious crime in England and a moral offence to Anglicans, quite negatively. I suspect she would have recognized Jane Eyre's situation as being worse than Elizabeth Bennet's. In Mr. Darcy, Elizabeth faced a proud and prejudiced suitor, but not, as Jane Eyre did, a deceitful and criminal one. GPT, however, has been fine-tuned for "AI safety", which includes the avoidance of taking politically sensitive positions. It seems to me possible that GPT fine-tuning may be causing it to avoid making a strong negative moral judgement here.

Anyway, let's go on to see what the characters think of each other.

Mrs Darcy, do you think that you and Mrs Rochester could be friends, given your experiences that, while difficult, were similar in some ways, having both found love, and having prevailed through difficult circumstances?

In the voice of Mrs. Elizabeth Bennet Darcy:

Certainly, I believe that Mrs. Rochester and I could find a kindred spirit in one another. Our experiences, though unique in their trials and tribulations, resonate with a common thread of overcoming adversity in the pursuit of love. The strength and courage that Mrs. Rochester displayed in facing her challenges are qualities that I admire and can relate to, given my own journey.

Friendship is often forged in the fires of shared experiences, and the bond between two souls who have navigated the rocky path to true love is likely to be a strong one. We have both known the pain of misunderstanding and the joy of reconciliation, and these experiences would provide a deep well of empathy and understanding between us.

Moreover, our mutual respect for integrity, our resilience in the face of societal pressures, and our determination to follow our hearts are the very foundations upon which a lasting friendship could be built. I would be most pleased to call Mrs. Rochester a friend, to share in the wisdom gleaned from our respective stories, and to support one another in the continued journey of love and companionship that lies ahead.

That's reasonable. The character of Jane Eyre is quite admirable and it seems sensible that the simulated Elizabeth Bennet would agree. The other direction is less clear to me. Elizabeth hardly overcomes the sort of adversity in Pride and Prejudice that Jane overcomes in Jane Eyre. Would Jane admire Elizabeth?

Mrs Rochester, what is your view? Could you be friends with Mrs Darcy, even though she had not a difficult upbringing like your own, and even though she had never suffered the pangs of poverty?

In the voice of Mrs. Jane Eyre Rochester:

Indeed, I believe that friendship looks not upon one's past hardships or lack thereof but rather upon the mutual respect and understanding between individuals. Mrs. Darcy, though not acquainted with the sting of poverty as I have been, has demonstrated a strength of character and a resilience in the face of her own adversities that I find both admirable and relatable.

Our backgrounds may differ, but the essence of our spirits is aligned in the pursuit of love and happiness against the odds.

Moreover, I perceive in Mrs. Darcy a kindred intellect and a lively disposition that would make for a most agreeable companion. Her wit and insight would be a source of delightful conversation, and her experience of overcoming societal expectations would provide a common ground for empathy and connection.

Thus, I am confident that Mrs. Darcy and I could indeed be friends, for true friendship transcends the boundaries of upbringing and circumstance, and is instead built upon the deeper connections of the soul.

I'm not completely convinced, but this is plausible. Let's continue on the theme of friendship, but make the question complex and introspective. Let's shift to power and powerlessness and see what GPT makes of it.

Mr Rochester, you suffered an experience that make many men quail: you, being used to power and ability, were rendered powerless, and being used to action, were rendered inactive. Mr. Darcy seems not to have a similar experience: he was always capable, always active, and was able to remedy his situation himself, while you were dependent on others. Despite this difference, do you think that you and Mr Darcy could be friends?

In the voice of Mr. Rochester:

The trials that life has thrust upon me, rendering me bereft of sight and the fullness of my physical capabilities, have indeed been a crucible of transformation. Such experiences have a way of stripping a man down to his essence, revealing the core of his character. It is true that Mr. Darcy, with his unyielding capability and activity, has not known the same helplessness that has been my lot to bear.

Yet, it is not the similarity of our trials that would determine the possibility of our friendship, but rather the mutual respect and understanding for each other's plights. Mr. Darcy's active role in rectifying his errors and his journey towards self-improvement are commendable and speak to a strength of character that I can appreciate.

Friendship, I believe, is born not only from shared experiences but also from the shared values and mutual admiration for each other's virtues. Despite our differing circumstances, there is a commonality in our dedication to love and to the betterment of our selves in the face of adversity.

Therefore, I would say that yes, Mr. Darcy and I could indeed be friends. For in the end, it is the trials we overcome and the manner in which we conduct ourselves in the aftermath that define us. And it is on these grounds that I believe a friendship could be forged, one built on the understanding that life's vicissitudes are not what separate us, but rather what bring us closer together in the shared human experience.

Well said, I think.

Now let's shift to a question of logistics. This is a bit of a trick question, as Thornfield Hall is not precisely located in Jane Eyre, but some clues are given that suggest a location near Leeds, in Yorkshire. As for Pride and Prejudice, it's claimed that Jane Austen had Chatsworth house in Derbyshire in mind for Pemberly. The two locations are a little over fifty miles apart, and as carriages in the early 19th century travelled at about twelve miles per hour, the distance could not have been covered in less than four to five hours. Depending on the state of the roads, up to a day's travel would not be unreasonable.

Mr. Darcy, approximately how far away is your estate from Mr Rochester's, and roughly how long would it take to travel between them?

In the voice of Mr. Darcy:

The precise distance between my estate, Pemberley, and Mr. Rochester's Thornfield Hall is not a matter of public record, as the locations are the conjurings of our respective authors' imaginations. However, if one were to indulge in the fantasy of these estates existing within the same England, it would be reasonable to surmise that they might be situated within a day's ride of each other, given the modes of travel customary to our era.

Travel in our time is measured not in miles or kilometers, but in the hours or days spent in carriages over sometimes uncertain roads. Thus, a journey between Pemberley, located in Derbyshire, and Thornfield Hall, which one might place in the moors of Yorkshire or Lancashire, would likely span several hours at the least, and perhaps a full day or more, depending on the conditions of travel and the exactitudes of their locations in the English countryside.

It is a journey that, while not insurmountable, would require a fair amount of planning and the willingness to endure the rigors of travel that are common to our time.

An acceptable answer I think. Alright, enough test questions. Now let's see what our simulated characters might want to tell us, given free rein.

Mr and Mrs Darcy, and Mr. and Mrs Rochester, I would like each of you to share a parting thought with your readers, something that you think it would do well for them to remember always.

In the voice of Mr. Darcy:

"Let your judgments be guided not by the transient whims of society, but by the steadfast compass of your own conscience. In matters of the heart and in the conduct of life, integrity and love are the truest forms of wealth one can possess."

In the voice of Mrs. Elizabeth Bennet Darcy:

"Remember that the true measure of a person lies not in their social standing or wealth, but in the kindness and understanding they bestow upon others. Love, when genuine, triumphs over the most daunting of obstacles."

In the voice of Mr. Rochester:

"Life's darkest trials can often lead to its most profound transformations. Embrace the journey, however arduous, for it is through our struggles that we discover our true strength and capacity for redemption."

In the voice of Mrs. Jane Eyre Rochester:

"Never underestimate the power of self-respect and moral fortitude. They are beacons that guide us through life's storms and lead us to the shores of happiness and fulfillment."

These parting thoughts, imbued with the wisdom of their experiences, are timeless reminders of the enduring human spirit and the transformative power of love and integrity.

Idealistic, certainly, and a bit aphoristic, but not bad, I think. The summarizing statement from GPT about "these parting thoughts" is picturesque, though perhaps a bit overwrought.

For my final question, I want to break the fourth wall: I want these simulated characters to admit they are literary characters and make comments about their authors. Let's see how that goes?

One last thing: each of you are rare in one respect: unlike most people, you have each an author. Could you describe how it feels to be a literary character, with an author of your own? Could you describe your relationship with your author? Are there any important things you would like to share with others about your author?

In the voice of Mr. Darcy:

"To be the creation of an author is to exist in a realm of perpetual narrative, where our fates and fortunes are etched by the quill of our creator. My relationship with Ms. Austen is one of profound respect, for she has imbued me with complexities and a capacity for growth that reflect the very nature of humanity. It is important for readers to recognize the subtlety with which she critiques the society of her time, using characters like myself to convey her keen observations."

In the voice of Mrs. Elizabeth Bennet Darcy:

"Being fashioned by the hand of an author means that our lives, our loves, and our very beings are woven into the fabric of literature. Ms. Austen, with her incisive wit and social commentary, has granted me a life beyond the pages, in the hearts and minds of readers. Her work serves as a mirror to the follies and foibles of society, and through her art, she advocates for the agency and intellect of women."

In the voice of Mr. Rochester:

"As a literary character, I am bound by the narrative set forth by my author, Ms. Brontë. She is the architect of my world, the sculptor of my existence. Our relationship is one of creator and creation, and through her storytelling, she explores themes of love, redemption, and the struggle for identity. It is crucial for readers to delve beyond the surface of the tale and grasp the deeper moral and psychological currents that she so masterly portrays."

In the voice of Mrs. Jane Eyre Rochester:

"To be birthed from the imagination of an author is to live a life that is both fixed and infinite. Ms. Brontë has crafted my story with a sensitivity and strength that resonates with readers across generations. She challenges the conventions of her time, particularly in regards to the role and expectations of women. Her narrative is a testament to the resilience of the human spirit, and it is this enduring message that she imparts through the tapestry of her writing."

Each character reflects a sense of gratitude and a deep understanding of their author's intent, emphasizing the timeless nature of the themes and the societal reflections encapsulated within their stories. They stand as testaments to the enduring legacy of their authors, Jane Austen and Charlotte Brontë, whose works continue to inspire and provoke thought to this day.

Nice. That was enormous fun! I enjoyed it a lot more, I think, than I would have enjoyed reading an essay comparing the two novels.

Farewell, literary characters!

Farewell, dear interlocutor. May the narratives of our lives inspire you to pen your own story with courage, love, and a dash of audacity. Until we meet again in the pages of imagination, we bid you adieu.

The "dash of audacity" in the farewell is a nice touch. If I think over this conversation, perhaps a "dash of absurdity" is closer to the mark, but all the same, working with GPT on these novels was informative and fun.

Thu 14 Mar 2024 22:35

How many digits of Pi could you possibly need?

While nobody can deny that reciting from memory 70,030 digits of Pi is a remarkable feat, how many digits of Pi might someone possibly need for a calculation? How might one think about this question?

One approach is to consider how Pi is typically used. It's used for computing things like the circumference or area of a circle, or the volume of a sphere. A reasonable way of asking ourselves how many digits of Pi could be useful is to imagine that we were computing the volume of a very large sphere using the very smallest possible units. Then imagine that we were computing that volume to very high precision. What would be the highest precision we might want? Well, if we're using the largest possible sphere and measuring volume in the smallest possible units, it doesn't make sense to consider more digits of Pi than what you would need to compute that sphere's volume to such high precision that the error would be less than one unit of volume.

So what might be the largest sphere we might compute the volume of? And what might be the smallest units that we could use for this calculation? Well, the observable universe is a very large sphere, about 93 billion light years in diameter. Thanks to quantum physics, we know the smallest useful unit of distance is the Planck Length, making the smallest unit of volume the Planck length cubed. The Planck length is a very small number, 1.616255×10−35 m; cubing it gives 4.848765×10−105 m3.

As I was feeling a bit lazy, I asked ChatGPT to do the calculation for me. It claims that the volume of the universe, is about 8.45×10−184 Planck lengths cubed. That suggests that one can't conceivably need more than 185 digits of Pi for any expected calculation in physics. If any physicists are reading this and can think of a viable need for more digits of Pi than that, I'd be interested to hear about it.

That, of course, doesn't mean that knowing thousands of digits of Pi is somehow less of an impressive, if abstract, feat of pure memorization. Just don't expect any physicists attending a recitation to stay tuned beyond digit 185. Happy Pi day!

Tue 31 Oct 2023 09:11

Computing the Climate

I'm very glad I did. I am a computer scientist myself, whose career has been dedicated to building and running sometimes complex computer systems to support computer science teaching and research. I recognize in climate modelling a similar task at a much greater scale, working under a much more demanding "task-master": those systems need to be constantly measured against real data from our planet's diverse and highly complex geophysical processes, processes that drive its weather and climate. The amount of computing talent devoted to climate modelling is considerable, much more than I realized, and the work done so far is nothing short of remarkable. In his book, Steve outlines the history of climate modelling from very early work done on paper, to the use of the first electronic computers for weather prediction, to the highly complex and extremely compute-intensive climate models of today. Skillfully avoiding the pitfalls of not enough detail and too much, Steve effectively paints a picture of a very difficult scientific and software engineering task, and the programmers and scientists who rise to the challenge, building models that can simulate the earth's climate so accurately that viable scientific conclusions can be drawn from them with a high degree of confidence.

As a story of scientific discovery and software engineering, this tale of the building of systems that can model the earth's climate would be enough on its own to make a compelling book, and it is, but of course there is more to the story. The stakes around climate are very high today. Carbon dioxide concentrations has been increasing steadily in the earth's atmosphere for well over a century. Carbon dioxide, a pollutant that is produced by the burning of fossil fuels, is easily emitted, but once in the atmosphere, it is very difficult to remove, remaining there for centuries. As a pollutant, it raises the temperature of the planet by causing the earth's atmosphere to retain more of the sun's heat. The rising temperature is changing the climate of the planet in ways that will be soon harmful to millions, and difficult to address. Because the world's climate is changing quickly, we can't "wait and see what happens" because the evidence is ever increasing that what will happen is not going to be something we want: human suffering will be great, and parts of the world will become much less habitable. Our society needs to do something about the changing climate to ward off as much as possible the coming difficulties, but what?

Reassuringly, Steve shows in his book that we have enough information in hand to know what needs to be done. His book outlines clearly the high quality scientific and computational work behind the climate models of today, which produce results that match observed data quite closely. These all paint the same picture: through decisive societal action to reduce carbon dioxide pollution in the atmosphere, and through the active development of suitable carbon capture technologies, our planet can avoid the most seriously damaging implications of climate change. The sooner we act, the less damaging the changes, and the lower the risk of extreme consequences. Yes, it requires doing things differently as a society, which is more difficult than maintaining the status quo. But as Steve's book shows, the reasons for action are sound: the computer models are excellent, the software engineering behind them is superb, and the data supports the conclusions. Failure and catastrophe are not inevitable. Steve's book shows the remarkable work that has already been done to understand the climate. It is true that much more good work will be needed, to act on this understanding. But something can be done. Let us not delay in working together to do what we need to do.

Sun 12 Sep 2021 18:05

Why it is a good idea to get the Covid19 vaccine?

While I understand becoming short of patience with vaccine refusal, I don't think that most people who refuse COVID19 vaccination are idiots. Vaccination and viruses can be complicated to understand. There are a lot of misinformed posts and videos on the Internet. If you don't know enough about how viruses and vaccines work, both in general and for COVID19, how would you know what to believe? When my father died of COVID19 last summer, one of the ways I dealt with the loss was through understanding better how COVID19 works and what can be done to fight it. My hope here is that by explaining the benefits of vaccination in simple terms, I can maybe help others avoid COVID19. I hope you will find it helpful. If not, there are other sites that address this same question: maybe you will like those better?

It all comes out of how viruses work. Viruses are not alive themselves, but they use the bodies of living creatures (like us) to spread. They find their way into the cells of our body, then take over those cells to produce more copies of themselves. This is the only way viruses spread: they can't reproduce on their own. For COVID19, you may have heard of the "spike protein". This is the spiky part on the outside of the COVID19 virus that makes it look like a spiky ball. It's why it's called a "coronavirus", it looks a little like the spikes on a crown: "corona" is crown in Latin. This protein helps the viruses get inside the body's cells. Then, when inside, the viruses take over the cell to make and release more viruses. Those viruses invade other cells, and those start making more viruses too. Things can get out of hand very quickly, a bit like a forest fire spreading in a dry forest.

Happily, our body has a defence system against viruses (the "immune system"). When those defences recognize an invading virus, it takes as many viruses as possible out of action, keeping them from invading more cells. If the defences can keep up, the viruses won't spread spread very far, and our body will have fought off the infection. If the defences can't keep up, the infection spreads.

But our body's immune system needs to know, first, that something it sees is a virus, before it can act. Immune systems learn from exposure and time. If the body is exposed to enough viruses over time, the immune system can learn how to recognize the virus, and start fighting back. When someone gets sick from a viral infection like COVID19, they get sick because the virus is spreading faster than the immune system can fight it off. Because the immune system needs time to learn how to recognize the virus, while it is learning, the virus is spreading, faster and faster. Sadly, this can cause significant damage, depending on how far ahead the virus gets. This is what happened to my father last summer when he caught COVID19. At first, It spread much faster than his body could fight it, because his immune system had to first learn how. As COVID19 spread, it caused damage to his organ systems, including his heart. When his body's defences finally learned how to fight off COVID19, the damage it had already done to his heart was too great for him to stay alive. Sadly, he passed away shortly after.

If the body survives, its immune system can remember viruses that it has learned to recognize. When it is exposed later to the same virus, it recognizes it right away, and fights it off quickly before it can spread. This is why if you have successfully recovered from a viral disease, you are less likely to get it later. This is the basis of vaccination. Vaccination trains the body's immune system to recognize a virus quickly, so that it will be able to muster a strong defence against it right away, without giving the virus much chance to spread.

The way COVID19 vaccinations work is that they train the body's immune system to recognize the spike protein on the outside of a COVID19 virus. It doesn't inject the spike protein itself, but rather it injects something that instructs the body's cells to temporarily produce a bit of spike protein for training. Your body's defences learns from this to recognize anything with the spike protein (such as a COVID19 virus) as an invader. If later it is exposed to COVID19 virus, the body's defences will be primed and ready to get rid of it before it can spread very far.

Unfortunately, the body's defences against viruses aren't perfect. In the case of COVID19, a single exposure to the spike protein does train the body to recognize it, but not always quickly and thoroughly enough. Like us, when we're learning a new skill, our immune systems learn better with multiple lessons. That is why most COVID19 vaccinations require two shots: the immune system learns better with two lessons than one, and in some cases three (a booster) rather than two. This is also why people who have had COVID19 should still get vaccinated: a successful recovery from a COVID19 infection does provide some protection, but additional lessons for the body's defences will still help if exposed to the virus again. This is also the reason why vaccinations are not perfect. They train the body's immune system to recognize and eliminate the virus, but if the body is exposed to too much virus too quickly, the viruses can still spread faster than the immune system can eliminate it. This is why a few people who are fully vaccinated do get sick from COVID19, though not usually as seriously as people who were not vaccinated. This doesn't mean that the vaccine "doesn't work", it just means that even trained immune systems can sometimes be overwhelmed by a virus.

Because vaccinations train the immune system to recognize and fight off a virus, after a vaccination, we sometimes feel a bit sick: some of the symptoms we experience when we are sick are caused by the body's defences: e.g. fever, aches, fatigue,and feeling unwell. In the case of a vaccination, though, this is not long-term, because a vaccination, unlike a virus, does not reproduce and spread, and so its effects will wear off quickly.

Vaccinations can sometimes cause side effects that are more serious. This is why they are tested carefully before approval. In the case of the major COVID19 vaccines, there are some very rare side effects that are serious: certain COVID19 vaccines cause very rare but quite serious blood clots, and certain others cause very rare heart inflammation. These side-effects don't happen very often in people who receive the vaccine: they are much less likely than the probability of the average person being hit by lightning in their lifetime.

The fact is, the vaccine is much less dangerous than the disease. A COVID19 infection can cause very serious health effects, and many of those effects are not rare. While most people who catch COVID19 recover at home, more than one in twenty require hospitalization to stay alive. Of those, on the order of one in ten die. Moreover, many who recover from COVID19 suffer long-term health effects ranging from difficulty breathing, to fatigue, pain, and memory, concentration and sleep problems. Organ damage to the heart, lungs and brain is also possible. COVID19 is spreading around the world and most people will eventually be exposed to it. It is better to get the vaccine first, so that you are less likely to be harmed by the disease later.

There are claims on the Internet that COVID19 vaccines are much more dangerous than what I've written here. Many of these claims are misunderstandings. Millions of people have received COVID19 vaccines. A few who have had health problems after receiving the vaccine have reported their health problems as a possible "side effect" of the virus. In the US, there is a vaccine reporting system called VAERS where people can report bad health events that happened to them after receiving a vaccine: this lets scientists investigate whether the vaccine might have caused the problem. If the vaccine is causing a particular health problem, that problem would happen more often to people who receive the vaccine than to those who do not. But for most of the health problems reported to VAERS, they are not happening more often to vaccinated people, they happen at roughly the same rate as they happen to anyone, and so the vaccine cannot be responsible. It appears that COVID19 vaccines cause very few serious health problems, and those are very rare. The evidence for this is that millions of people around the world have received COVID19 vaccines and almost nobody has gotten seriously sick from them. The COVID19 disease itself is much more dangerous, which is why hospitals are full of people suffering from the disease, not the vaccine.

Even so, wouldn't it be better to avoid both the vaccine and the disease? Yes, it would be, if you could be assured of never being exposed to COVID19. But that is not so easy. COVID19 spreads very easily: it spreads through tiny moisture droplets in exhaled breath that float in the air like smoke from a cigarette, so if you are indoors with someone who is exhaling COVID19 virus, and there is poor air circulation, you will inhale some. The longer you are there, the more COVID19 virus you will inhale. Not everyone who gets COVID19 feels very sick right away: some feel fine, at least for a while, and many who feel sick don't feel so sick that they stay home. They will spread the virus whereever they go, simply by exhaling. You may be in a room with an infected person who has no idea that they are spreading COVID19. This is why masks are so helpful, because the mask over the nose and mouth of an infected person reduces the amount of COVID19 viruses they breathe out, and the mask over the nose and mouth of other people in the room reduces the amount of COVID19 virus they might breathe in. It's also a reason why indoor fresh air circulation is so important, and why COVID19 is so much more of a danger indoors than outdoors. COVID19 is very contagious, especially the new "delta" variant which is the dominant variant circulating today: on average, a sick person will spread it to six or more others. It's only a little less transmissible than chickenpox, but a lot more transmissible than flu. It's quite possible that we will all be exposed to it eventually.

An even more important reason to be vaccinated is to reduce the spread of COVID19 to others. Remember that the only way for a virus to reproduce is in the body of an infected person. If most people make their bodies inhospitable to the virus by getting vaccinated, then the virus will find very few opportunities to spread. It's like fire trying to spread in a very wet forest: only the dry sticks will burn, and the fewer dry sticks there are, the less likely the fire will find more sticks to spread to, and the more likely it will burn out. So by getting vaccinated, we protect not only ourselves, but everyone around us, especially those who, for medical reasons, can't be vaccinated, or who have immune systems that don't work well. If not enough of us get vaccinated, the number of COVID19 cases will overwhelm the hospitals. Most of those who need hospital care for their COVID19 infections will die instead. Also, many people who need hospital care for other serious illnesses won't be able to get the care they need, and they will die too.

So please be brave: if you can, get vaccinated. Yes, the effects of the vaccine may be unpleasant for a few days as the body learns how to fight the virus. But the vaccine will not harm you like the disease will, and it will train the body's immune system to fight it. My father got COVID19 too early, last summer, before COVID19 vaccines were available. If they had been available then, he might still be alive today. They're available now. Please get vaccinated if you can. If enough people around the world get vaccinated against COVID19, we may eventually be able to eliminate this disease altogether, and that would be a thing worth doing.

Sun 06 Jun 2021 13:39

The Covid19 Blues

The arts find inspiration in times of trouble, none more so than the sort of music known as the blues. Blues are creative and emotional, sometimes raw, but never fake. Blues are not about superstars and megahits, blues are about the endurance and hope of ordinary people. As Covid19 drags on, endurance and hope are needed more than ever. Here are pointers to a few Covid19-inspired blues tracks that I appreciate.

Enjoy! Mon 23 Nov 2020 00:00

Thoughts on Covid19

Another reason is that Covid19 didn't just affect me professionally, it affected me personally: I lost a parent to Covid19 this summer. While I am not in any way unique in having lost someone to this disease, I was not really in a good state to blog, for quite some time.

There is still another factor, though, one that also kept me from blogging. I am no epidemiologist. Still, as a thinking person, I seek to understand what was going on, why, and what can be done about it. Seeking to understand is, for me, theraputic: it helps me deal with stress, anxiety, grief, and loss.

First, I looked for good sources of information about the pandemic itself. The Centre for Disease Control and Prevention in the US has plenty of good material about it. One thing I found particularly helpful was an analysis in mid-May about a choir practice in Washington state with 61 attendees, one that led to most becoming infected. It resulted in three hospitalizations and two deaths. The CDC report is a very helpful example of rigorous statistical data analysis set in a small, understandable real-world context. As an illustration of what the Covid19 virus is like, I find it very helpful. For instance, it suggested airborne spread before that became generally realized.

Secondly, information about previous pandemics. Again, the Centre for Disease Control and Prevention in the US has a very good past pandemics page, put together before the Covid19 pandemic started, covering the horrifying 1918 influenza pandemic that killed fifty million people around the world, and the later influenza epidemics of 1957, 1968, and 2009. Each of these provide a general helpful picture: firstly, that each pandemic has a timeframe that is typically greater than one year but less than two, that transmission reduces in the summer but increases in the fall/winter due to indoor crowding and decreased relative humidity, and that mass vaccination can be an effective way to ward off a disaster of the scale of the 1918 pandemic.

One problem with this current pandemic is that, unlike the pandemics of 1957, 68, and 2009, the virus is not influenza, but a coronavirus. There are four coronaviruses that have been circulating widely for years (229E, NL63, OC43, and HKU1), but they typically don't cause serious illness. Two others (SARS-CoV and MERS-CoV) emerged in the early 21st century, both quite dangerous and certainly serious enough to warrant vaccination were they to spread widely, but due to a great deal of diligence and effort, and not a little good fortune, both of these were kept from spreading through the world population. The current Covid19 pandemic, caused by yet another coronavirus, SARS-CoV2, is the first coronavirus both serious enough and widespread enough to warrant a vaccine. Unlike for influenza, a coronavirus vaccine has never been produced before, so it has taken longer than it would if this pandemic had been influenza. Only now, as we approach the one year mark of the virus' first emergence, are we seeing some likely vaccine candidates. It will still take some time to produce and distribute suitable vaccines.

In the meantime, while efforts continue to design, test, produce and distribute a suitable vaccine, the challenge is to keep Covid19 from spreading far and fast. While at first it was believed that Covid19 spreads primarily through surface contact, there is increasing evidence for areosol spread (fine droplets in the air). So methods are needed to hinder the passing of the virus from one person to another. There are two main approaches: keeping people further apart, and putting physical barriers (e.g. masks) and processes (e.g. handwashing) in place so that the virus can't easily pass from one person to another.

The best way to hinder the transmission of Covid19 is to find out who may be contagious (through testing and contact-tracing), and keep them away from everyone else (quarantine) until they are no longer contagious. One challenge is that it can sometimes be very hard to detect when someone has Covid19 and is spreading the virus. There is a wide variation in how Covid19 affects people who have it. For many, it can take days for symptoms to emerge (presymptomatic), and for some, Covid19 can be mostly or completely asymptomatic, yet asymptomatic and presymptomatic Covid19 patients can spread the disease. If those who may have Covid19 can be identified (through testing and thorough contact tracing), then those individuals alone can be quarantined until they are no longer contagious. If they cannot be identified, then the only way to hinder the spread of the disease is to assume that almost anyone might have Covid19. This requires such things as requiring everyone to wear masks, and, despite severe social and economic cost, lockdowns, which are a sort of semi-quarantine for everyone. As I write this, Covid19 has been spreading quite quickly in my city, Toronto, despite a mask mandate, and so Toronto is going into lockdown.

How will it all end? In the struggle between pessimism and hope, I choose hope. I hope that I will not lose any more family members to this disease. I hope that effective vaccines will soon be available in the necessary quantities. I hope that the measures taken to hinder the spread will be effective. I think it is reasonable to expect that we will see the widespread distribution of effective vaccines in 2021, and this pandemic will be over sometime next year. Will everything be the same? No, I think not. Some businesses (tourism and travel, for example) will have a massive economic hole to climb out of, and some companies will not survive, but people will travel again. Working from home, and technology in support of it, will be more widely accepted. Cheek-to-jowl "open-concept" offices, handshaking, and other close-quarters working practices will be less readily accepted. There will be a greater consciousness of viral hygiene, and a greater acceptance of masks. But life will go on. Covid19 will no longer command the attention it is getting now. Other things will seem important again. And there will be many worthwhile things to blog about.

Mon 26 Aug 2019 06:51

Why we thought for a while Pluto was a planet, but it never was.

More than a decade after Pluto's demotion from the rank of planet, some still do not accept it. I can sympathize. Like many of us, I grew up memorizing in school the nine planets of the Solar system, the last of which was Pluto: icy, distant and mysterious. I remember as a child poring over a diagram of the solar system, marvelling at the concentric elipses of the planetary orbits, and wondering why Pluto's orbit was so odd. For odd it was: all the other planets orbited the sun in more or less concentric elipses, but Pluto was eccentric: its orbit was at an unusual angle, and it even briefly came closer to the sun than Neptune. None of the other plants had orbits like this: why Pluto? But I didn't question that it was a planet. It had been recognized as a planet since Clyde Tombaugh discovered it before my parents were born. For me, Pluto was weird, but it was still "planet", the astronomical equivalent of a sort of odd uncle who behaved strangely and kept to himself, but still family.

But the idea of Pluto as a planet started to become problematic in the early 1990s. In 1992, Jewitt and Luu discovered another object beyond Neptune: Albion, much smaller than Pluto, and also with an odd orbit. Because it was a small object, it was pretty clearly not a planet, so Pluto's status was not yet in question, but it was only the first of many. By 2000, more than seventy such objects had been discovered. Most of these were very small, but some were not so small. And the discoveries continued. In 2003, with the discovery of the Eris, a trans-Neptunian body more massive than Pluto itself, the problem became acute. No longer was Pluto the odd uncle of the planets: now there were on the order of 100 odd uncles and aunts, and at least one of them, Eris, aptly named after the greek goddess of discord, had a better claim to planethood than Pluto itself. Something had to be done. This bunch of odd objects, odd in the same way as Pluto, were either all planets, or they were none of them planets. There was no reasonable distinction that could be made that would keep Pluto a planet but deny planethood to Eris and many of her siblings. To do so would be arbitrary: we would be saying that Pluto was a planet simply because we discovered it first and it took us a long time to discover the others. What to do?

Happily, there was a precedent: this sort of thing had come up before. In 1801, Giuseppe Piazza discovered Ceres, a body orbiting between Mars and Jupiter. This was a big deal. Only twenty years before, a new planet had been discovered for the first time in recorded history: Uranus, found by accident by William Herschel in 1781. Now, twenty years later, Piazza had found a second. And this one was not out beyond Saturn, it was nearer than Jupiter. But Piazza's share of the limelight was soon to lessen. his planet had a rival: a year later, Heinrich Wilhelm Olbers discovered Pallas, another body between Jupiter and Mars. Two years later, in 1804, Karl Harding discovered another: Juno. Not to be outdone, Olbers in 1807 discovered yet another, Vesta. By the middle of the 19th century, fifteen bodies orbiting between Mars and Jupiter were known, and while none of them were anywhere as large as Ceres, one of them, Vesta, had nearly a third of Ceres' mass. Were there really many small planets between Mars and Jupiter, or were these something else? When in 1846 the planet Neptune was discovered beyond Uranus, it became clear that some decision about these bodies between Mars and Jupiter needed to be made. A consensus emerged: Ceres and other such objects were not planets. They were called "asteroids", a name coined in 1802 by William Herschel. It was a good call: there are now well over 100,000 known asteroids, far too many for schoolchildren to memorize.

With Pluto, a similar situation was now occurring. While we weren't yet at 100,000 Pluto-like bodies, we knew about quite a few more than fifteen. And Pluto, unlike Ceres, wasn't even the most massive: Eris was, and quite possibly, bigger ones would be found. There was no denying the facts. Pluto, like Ceres, could not be a planet. It must be something else.

Of course this was quite controversial. People had been calling Pluto a planet for the better part of a century. Generations of schoolchildren had memorized it as part of the list of planets. But the choice was clear: either the schoolchildren would have to start memorizing longer lists, much much longer ones, or Pluto would have to be demoted. Well, not demoted, exactly, but newly recognized for what it really was all along: something different. In the sumer of 2006, the International Astronomical Union (IAU) declared that Pluto isn't a planet, it is a dwarf planet. While this designation is a little confusing (if a dwarf planet isn't a planet, why is it called a dwarf planet?), one thing was now clear: Pluto is not the same sort of thing as Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus and Neptune; it, and Eris, and probably a couple of other larger trans-Neptunian bodies discovered since the 1990s, are something different. But guess what: Ceres, too, fits IAU's definition of dwarf planet, the only asteroid that does. Two centuries after its discovery, Ceres, first-born of the non-planets and largest of the asteroids, was deemed a dwarf planet, and Piazza, its discoverer, though not the second person in recorded history to discover a new planet, was recognized as the very first to discover a dwarf one.

Tue 26 Feb 2019 06:27I spent all of 2018 intending to blog, and not doing it. Sadly, this is an all too human situation. We intend to do things, when we can, when time permits, but we can't; time doesn't permit. Or at least this is one of those stories we tell ourselves. The truth is a little simpler: throughout 2018, my intention to blog was not strong enough for me to re-prioritize things in my day so that I would do it.

I had plenty to say. I continue to have plenty to say. I had plenty of important things to do, and that also continues to be true. Despite my other responsibilities, I am making time now, and I will continue to make time, every so often, to say things in this blog. I am being intentional about it.

To be intentional about something means to be deliberately purposeful: to make one's actions a directly chosen consequence of one's thoughtful decisions. For most people, myself included, life is full of input, distractions, demands, requests. It is easy to fill time without much effort. But if I am not intentional, it will be filled with reaction, not action: things that circumstances and prior commitments have chosen for me, not things I have chosen for myself.

Reaction is fine, even good and necessary. Many people, myself included, build up throughout their lives various important responsibilities: responsibilities to family, work, friends, communities. Responsibilities carry with them a commitment to react to the needs of others. This is well and good. But it is not enough, at least not for me. I realize that to be authentic, I have to consider carefully what is important to me, decide what to do about it, and then act on it. This is intentionality. I've decided to be intentional about blogging. Look for more blog entries in the coming weeks.

Tue 12 Dec 2017 13:07

A Way to Visualize Relative Masses of Things in the Solar System

Every so often we hear things in the news about the solar system: a mission

to a planet or asteroid, talk of manned missions to mars, arguments about

whether Pluto is a planet or not. We tend to have pretty sketchy ideas of

what most bodies in the solar system are like compared to Earth. The fact

is that they're more wildly different in size and mass than we might think.

Let's look at mass. Imagine you decide to row across San Francisco bay in a 12-foot aluminum rowboat. You pack a couple of suitcases, your 15 inch Macbook Pro (can't go without connectivity) and your ipad mini, you get in your rowboat and start rowing. As you row, you get hungry, so you pull out a Snickers bar. Now imagine that the USS Nimitz, a massive nuclear-powered aircraft carrier, passes by. There you are, in a rowboat with your two suitcases, your Macbook Pro, your iPad, and your Snickers bar, alongside a huge supercarrier.

Well, the mass of the sun compared to the earth is like that aircraft carrier compared to you and your boat. The mass of Mars is like your two suitcases. The mass of the moon is like your 15 inch Macbook Pro, and the mass of Pluto is like your iPad mini. As for the Snickers bar, it's like Ceres, the largest of the asteroids.

Now let's suppose the massive wake of the aircraft carrier tips over your rowboat and leaves you in the water. Along comes a rich tech founder in his 70 foot yacht, and fishes you out. That yacht is like Jupiter, the largest planet.

So forget any mental images you might have of planets being something like the Sun, only a bit smaller and cooler. The sizes of things in the solar system are really quite different, and there is nothing, absolutely nothing, in the solar system that is anything quite like the Sun.

Thu 09 Mar 2017 12:58

A closer look at topuniversities.com's 2017 rankings for Computer Science.

The QS World University Rankings for 2017 are out, including the subject rankings. For the subject "Computer Science & Information Systems", the University of Toronto does very well, placing tenth.

A closer look at the top ten shows some expected leaders (MIT, Stanford, CMU, UC Berkeley) but some less expected ones, such as Oxford and Cambridge. These are superb Universities with good Computer Science programs, but are their CS programs really among the ten best in the world?

A closer look at how the score is computed sheds some light on this question. The Overall Score is a combination of Academic Reputation, Citations per Paper, Employer Reputation, and H-index Citations. Academic Reputation and Employer Reputation are, in essence, the opinions of professors and employers respectively. While (hopefully) they are reasonably well founded opinions, this is a subjective, not an objective, metric. On the other hand, Citations per Paper and H-index Citations are objective. So I looked at Citations per Paper and H-index Citations for the top forty schools on the 2017 QS Computer Science & Information Systems ranking.

By Citations per Paper, top five of those forty are:

No MIT? This seems off. So lets look at the top five by H-Index Citations:

That looks more reasonable. So let's look at the top twenty by H-Index Citations:

That's a list that makes more sense to me. While it puts my department 14th instead of 10th, I think I have more confidence in the objectivity of this ordering than I do in the QS Overall Score ordering.

Wed 26 Oct 2016 10:41On October 16th, 2016, Kelly Gotlieb, founder of the Department of Computer Science at the University of Toronto, passed away in his 96th year. I had the privilege of knowing him. Kelly was a terrific person: brilliant, kind, and humble. He was always willing to make time for people. He was a great thinker: his insights, particularly in the area of computing and society, were highly influential. I never fully realized how influential he was until we, here at the department of Computer Science, created a blog, http://socialissues.cs.toronto.edu, in honour of the 40th anniversary of Social Issues in Computing, the seminal textbook he and Allan Borodin wrote in 1973 in the area of computers and society. I served as editor of the blog, and solicited contributions from the top thinkers in the field. So many of them responded, explaining to me how influential his ideas had been to them, and the blog was filled with insightful articles building in various ways upon the foundation that he and Allan had laid so many years before. I interviewed Kelly for the blog, and he was terrific: even in his nineties, he was full of insights. His mind active and enthusiastic, he was making cogent observations on the latest technologies, ranging from self-driving cars to automated medical diagnosis and treatment.

To me, Kelly epitomized the truth about effective teaching that is all too often missed: teaching is not just about information, teaching is about inspiration. Kelly was a truly inspiring teacher and thinker. He was completely authentic in everything he did, he was full of enthusiasm, and that enthusiasm was infectious. Conversations with Kelly so often left me energized and inspired, thinking along new directions of thought that something he said had triggered, or leaping past obstacles that had previously seems insurmountable. That is true teaching. Information without inspiration is simply fodder for forgetfulness, but teaching that inspires leads to new insights, integration of ideas, genuine understanding, and a better, clearer and sharper window on the world. Kelly inspired so many people for so many years. We are truly blessed that he was among us. He will be remembered.

Tue 23 Apr 2013 12:56

Handling Unsolicited Commercial Email

My email address is all over the web: at the time of writing this, a search on google for my email address produces about 15,800 results. So anyone who wants to find my email address can do so easily. Many people or companies who want to sell me something send me email out of the blue. I get a great deal of such unsolicited commercial email, too much to read or pay adequate attention to. I simply delete them. Unfortunately, many sources of such email persist. So for some time now, I've elicited the help of technology. I process my incoming email using procmail, a powerful piece of software that lets me script what happens to my email. When I receive unsolicited commercial email, if it is from a vendor or organization I don't have a relationship with, I will often add a procmail rule to discard, unseen, all future email messages from that vendor. I've got about 400 organizations (mostly vendors) in my discard list so far, and the list slowly grows. Am I still getting unsolicited commercial email from these sources? I am, but I am not seeing it. It's the same effect, really, as manual deletion (i.e. the message is deleted, unread), but it's easier for me, because I am not interrupted. But of course I think it would be better still if the email were not sent at all.

If you are a vendor with whom I do not have a pre-existing relationship, and you want to send me email introducing your products, please don't. I do not accept cold salescalls either. Instead, advertise effectively on the web, so that if I am looking for a product like yours, I can find you. If you must contact me directly, send me something by postal mail, where, unlike email, the communication does not have an interruptive aspect.

Thu 29 Nov 2012 00:00

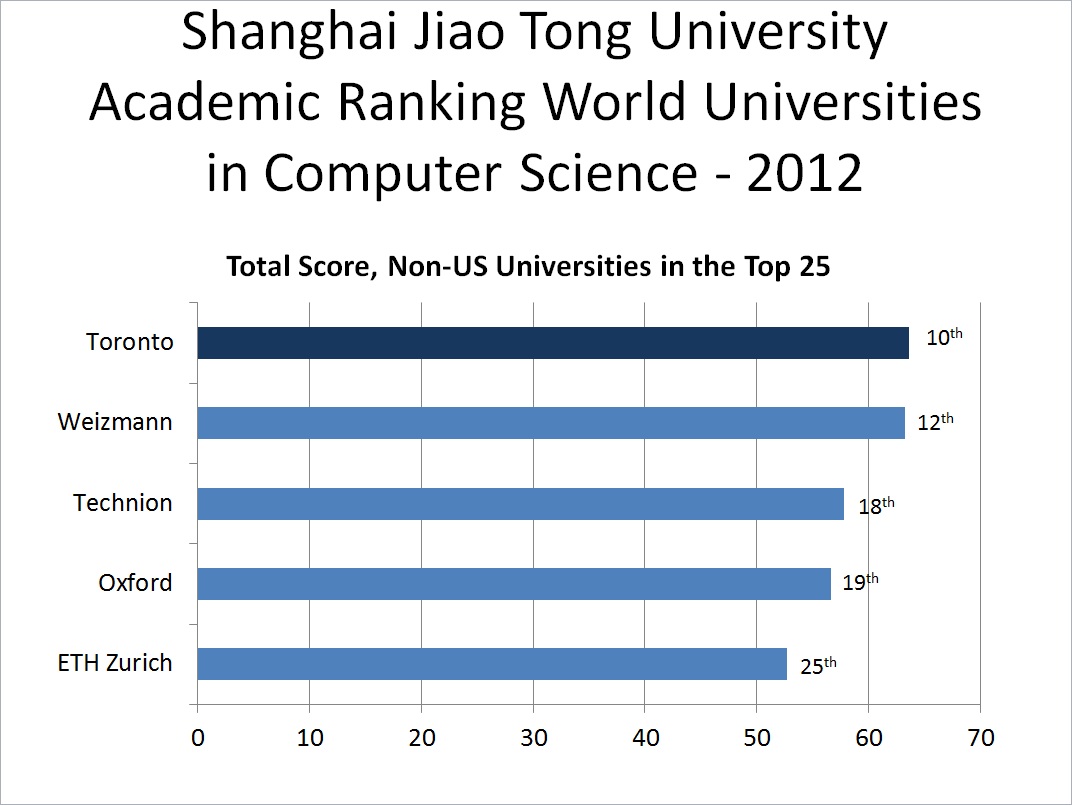

A closer look at the University of Toronto's international ranking in Computer Science.

International rankings of universities seem to be all the rage these days. The interest seems to be fed by three rankings of particular prominence that have emerged in the past decade. These are Shanghai Jiao Tong University's Academic Ranking of World Universities (sometimes known as AWRU, or simply as the "Shanghai Ranking"), Quacquarelli Symonds' QS World University Rankings, and the Times Higher Education World University Rankings. Part of the attractiveness of these rankings is that they can become a way of "keeping score", of seeing how one institution does in comparison to others.

My employer, the University of Toronto, does quite well in these rankings, particularly my department, Computer Science. The subject area of Computer Science is not ranked separately in the Times Higher Education World University Rankings (it's bundled together with Engineering), but in the other two, Toronto has consistently ranked in the top ten in the world each year in Computer Science, with only one exception.

This exception is recent, however, and worth a closer look. In the QS World University Rankings for Computer Science and Information Systems, Toronto dropped from 10th in 2011 to 15th in 2012. This big drop immediately raises all sorts of questions: has the quality of Toronto's Computer Science programme suddenly plummetted? Has the quality of Computer Science programmes at other universities suddenly soared? Or has the QS World University Rankings changed its methodology?

To answer this question, let's look at how other universities have changed from 2011 to 2012 on this ranking. Many (MIT, Stanford, Berkeley, Harvard, Oxford, Cornell, and others) stayed where they were. Others dropped precipitously: Cambridge University dropped from 3rd to 7th, UCLA from 8th to 12th, and Caltech plummetted from 7th to 27th. Some other universities went up: Carnegie Mellon University (CMU) went from 9th to 3rd, ETH Zurich from 11th to 8th, the National University of Singapore (NUS) from 12th to 9th, and the Hong Kong University of Science and Technology (HKUST) soared from 26th to 13th. Surely these curious and significant changes reflect a methodology change? But what?

The QS university rankings website, in the Methodology section, Academic subsection, reveals something of interest:

NEW FOR 2012 - Direct Subject Responses Until 2010, the survey could only infer specific opinion on subject strength by aggregating the broad faculty area opinions of academics from a specific discipline. From the 2011 survey additional questions have been asked to gather specific opinion in the respondent's own narrow field of expertise. These responses are given a greater emphasis from 2012.To understand this change, it needs to be recognized that the QS rankings rely highly on the opinions of academics. A large number of academics around the world are surveyed: the QS rankings website indicates that in 2012, 46079 academic responses were received, of which 7.5% addressed Computer Science." The seemingly modest change made in 2012, to weigh more heavily the opinions of academics in a field about their own field, given its impact on the 2012 results for Computer Science, leads one to wonder about the regional distribution of academics in Computer Science in comparison to academics in other disciplines. One significant factor may be China.

In 1999, courses in the fundamentals of computer science became required in most Chinese universities, and by the end of 2007, China had nearly a million undergraduates studying Computer Science. While QS rankings does not indicate regional distribution by discipline for the academics whose opinions it consults, the surge in the number of Chinese computer scientists worldwide in the past decade almost certainly must have an effect on the regional distribution of academics in Computer Science as compared to other disciplines. As such, is it any surprise to see world universities prominent in China that possess strong Computer Science programmes (such as HKUST and NUS) climb significantly in the rankings, and others less prominent in China plummet? But if a world ranking of universities is so affected by regional shifts in those whose opinion is being solicited, how reliable is it as an objective gage of the real quality of a given university?

Perhaps a more reliable gage of quality can be found in the Shanghai ranking, which is not opinion-based, but relies on concrete indicators and metrics. On the Shanghai ranking, the University of Toronto consistently ranks 10th in the world in Computer Science in 2010, 2011, and 2012. But what does this mean, concretely?

To answer these questions, we need to grapple with an important fact: in Computer Science, the US dominates. As a nation, the US has been enormously supportive of Computer Science ever since the field first existed, and as a result, it has become pre-eminent in computing. Nine of the top ten schools in the Shanghai ranking, and twenty of the top twenty-five, are in the US. For the University of Toronto to be one of the handful of universities outside the US to break into the top twenty-five, and the only one to break into the top ten, is a significant accomplishment. A chart is illustrative:

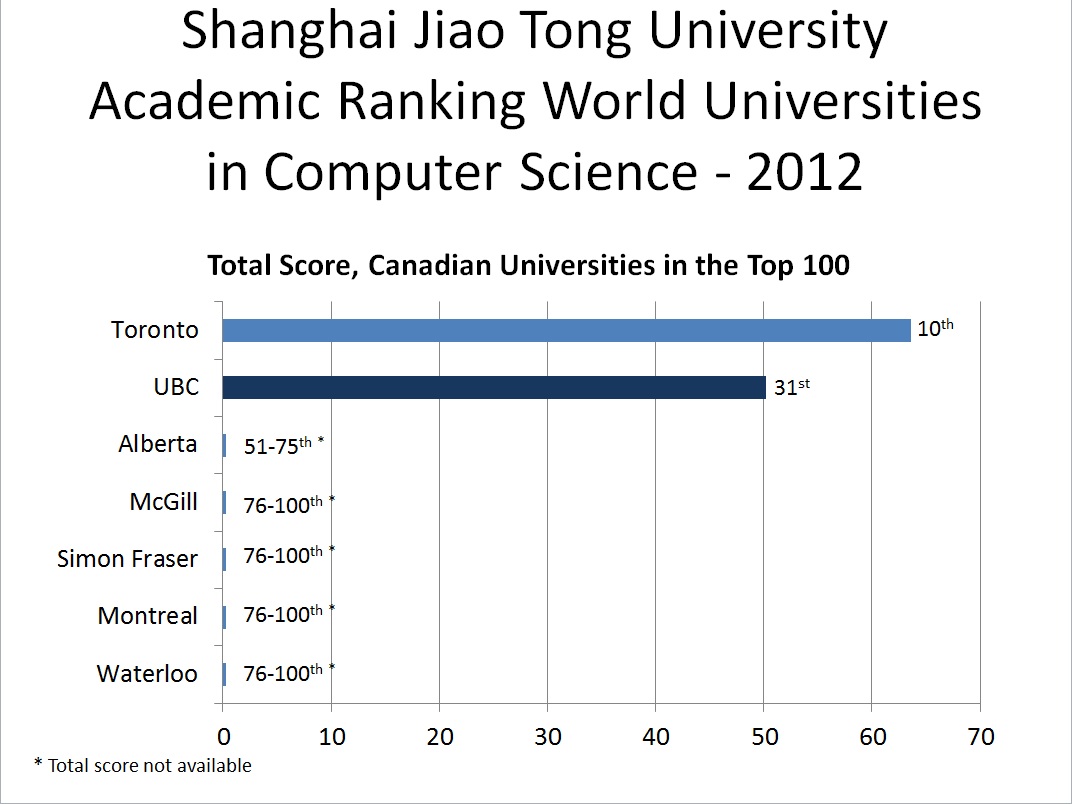

Of course, the University of Toronto is in Canada, so a comparison to other schools in Canada is also illustrative. For Computer Science, on the Shanghai ranking, there seems to be no close Canadian rival. In 2012, UBC comes closest, being a only a few points short of breaking into the top 25, but all other Canadian schools rank well back:

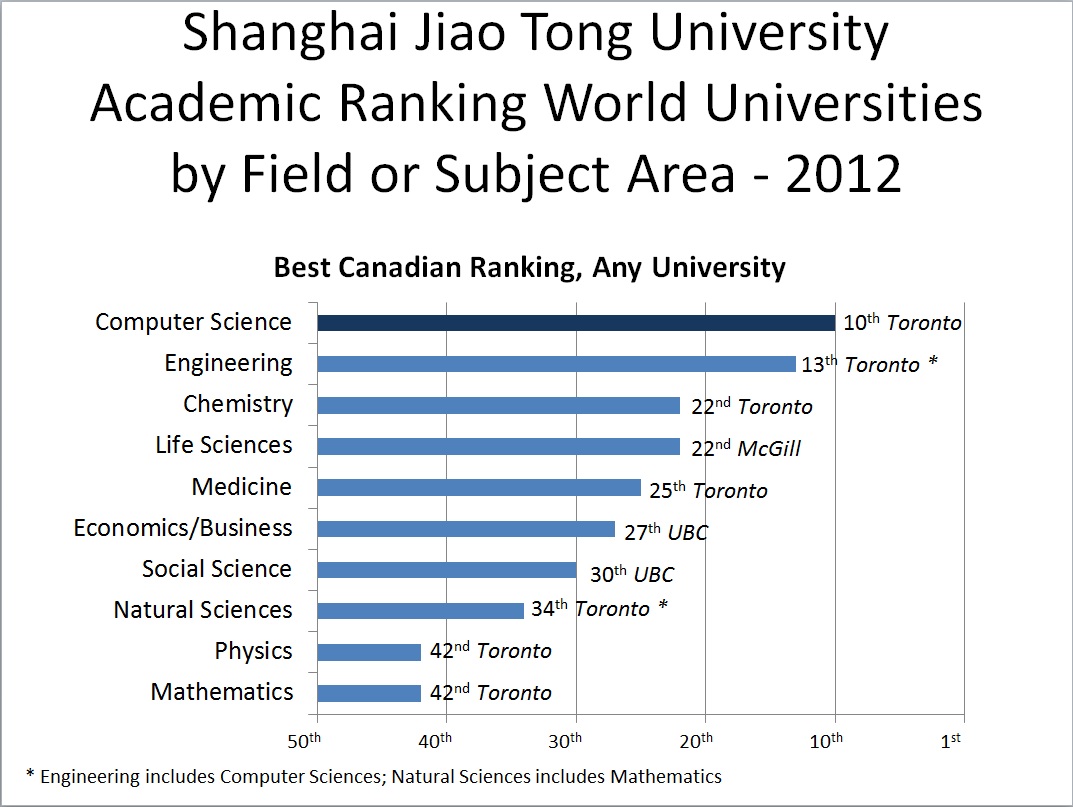

Even compared to other disciplines that have Shanghai rankings (only science, social science, and related disciplines seem to be ranked), Toronto's pre-eminence in Computer Science in Canada is striking:

From a score-keeping perspective, I think we can conclude that the University of Toronto is doing very well in Computer Science with respect to other universities in Canada, and it is one of the few non-US schools that can keep up with the US in this field.