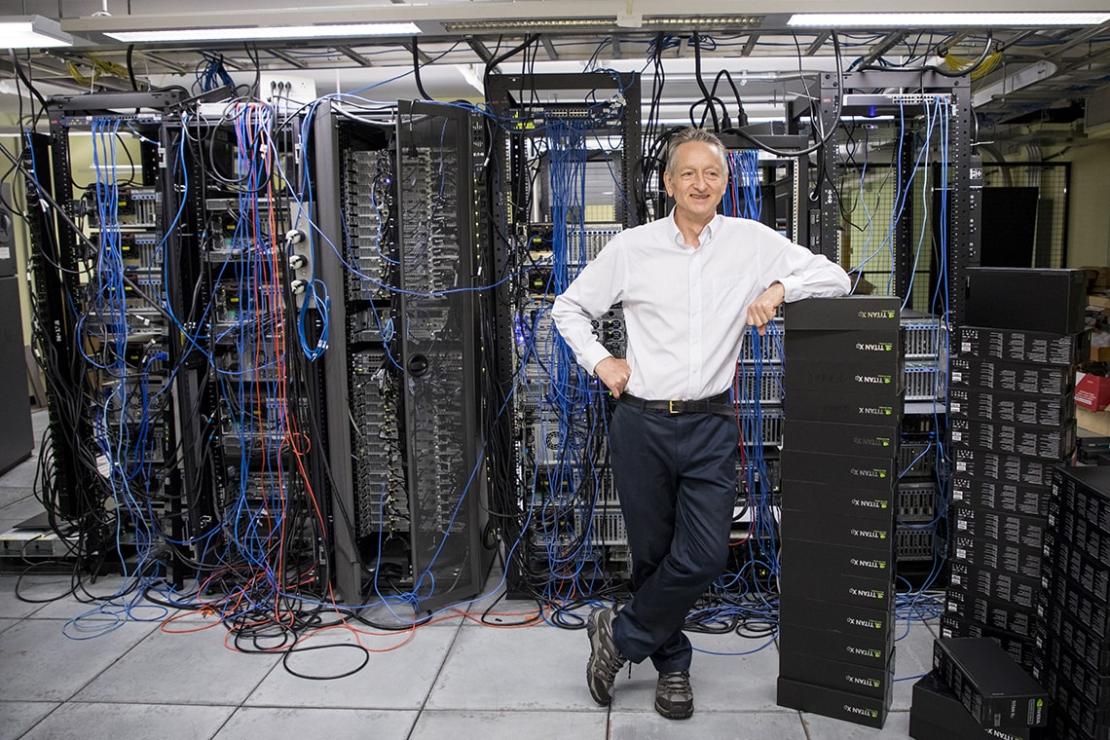

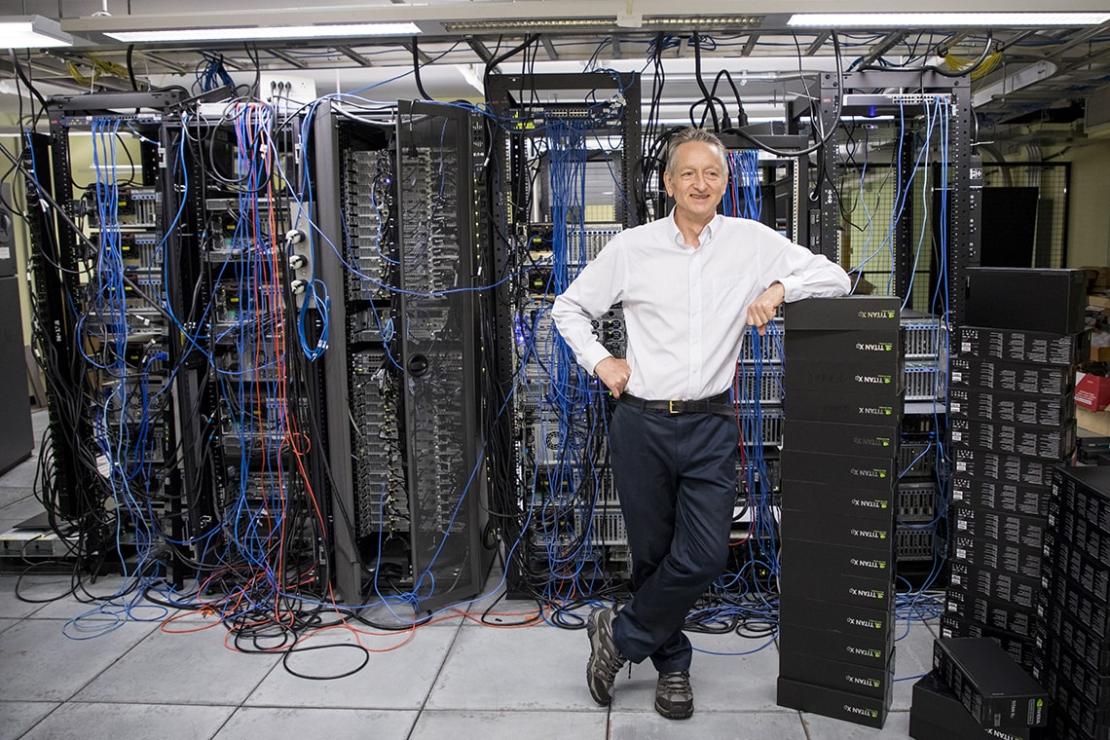

Photo by Johnny Guatto

Photo by Johnny Guatto

Mon 06 Nov 2023 16:48

Research Computing at Computer Science Toronto and the Rise of AI

Photo by Johnny Guatto

Photo by Johnny Guatto

Until the beginning of 2009, the machine learning group used primarily Matlab on UNIX CPUs. In the 1990s, SGI and Sun multiprocessors were the dominant platforms. The whole department transitioned to x86 multiprocessor servers running Linux in the 2000's. In the late 2000s, Nivida invented CUDA, a way to use their GPUs for general-purpose computation rather than just graphics. By 2009, preliminary work elsewhere suggested that CUDA could be useful for machine learning, so we got our first Nvidia GPUs. First was a Tesla-brand server GPU, which at many thousands of dollars for a single GPU system was on the expensive side, which prevented us from buying many. But results were promising enough that we tried CUDA on Nvidia gaming GPUs - first the GTX 280 and 285 in 2009, then GTX 480 and 580 later. The fact that CUDA ran on gaming GPUs made it possible for us to buy multiple GPUs, rather than have researchers compete for time on scarce Tesla cards. Relu handled all the research computing for the ML group, sourcing GPUs and designing and building both workstation and server-class systems to hold them. Cooling was a real issue: GPUs, then and now, consume large amounts of power and run very hot, and Relu had to be quite creative with fans, airflow and power supplies to make everything work.

Happily, Relu's efforts were worth it: the move to GPUs resulted in 30x speedups for ML work in comparison to the multiprocessor CPUs of the time, and soon the entire group was doing machine learning on the GPU systems Relu built and ran for them. Their first major research breakthrough came quickly: in 2009, Hinton's student, George Dahl, demonstrated highly effective use of deep neural networks for acoustic speech recognition. But the general effectiveness of deep neural networks wasn't fully appreciated until 2012, when two of Hinton's students, Ilya Sutskever and Alex Krizhevsky, won the ImageNet Large Scale Visual Recognition Challenge using a deep neural network running on GTX 580 GPUs.

Geoff, Ilya and Alex' software won the ImageNet 2012 competition so convincingly that it created a furore in the AI research community. The software used was released as open source; it was called AlexNet after Alex Krizhevsky, its principal author. It allowed anyone with a suitable NVidia GPU to duplicate the results. Their work was described in a seminal 2012 paper, ImageNet Classification with Deep Convolutional Neural Networks. Geoff, Alex and Ilya's startup company, DNNresearch, was acquired by Google early the next year, and soon Google Translate and a number of other Google technologies were transformed by their machine learning techniques. Meanwhile, at the Imagenet competition, AlexNet remained undefeated for a remarkable three years, until it was finally beaten in 2015 by a research team from Microsoft Research Asia. Ilya left Google a few years after, to co-found OpenAI: as chief scientist there, Ilya leads the design of OpenAI's GPT and DALL-E models and related products, such as ChatGPT, that are highly impactful today.

Relu, in the meanwhile, while continuing to provide excellent research computing support for the AI group at our department, including Machine Learning, also spent a portion of his time from 2017 to 2022 designing and building the research computing infrastructure for the Vector Institute, an AI research institute in Toronto where Hinton serves as Chief Scientific Advisor. In addition to his support for the department's AI group, Relu continues to this day to provide computing support for Hinton's own ongoing AI research, including his Dec 2022 paper where he proposes a new Forward-Forward machine learning algorithm as an improved model for the way the human brain learns.