|

Hi, I'm a research scientist at Databricks/MosaicML. I work on pre-training Large Language Models (LLMs). I graduated from the University of Toronto with an MSc, where I was in Sanja Fidler's group on machine learning and computer vision research.Previously, I was an EE undergrad at the University of Waterloo and held internships at Cohere, Cerebras, Uber ATG and Intel. When I'm not ssh'd into a gpu cluster, you can find me skiing at the local (or not-so local) slopes or at the hockey rink.

doubovs at cs dot toronto dot edu / CV / Google Scholar / Twitter / Github |

|

|

|

|

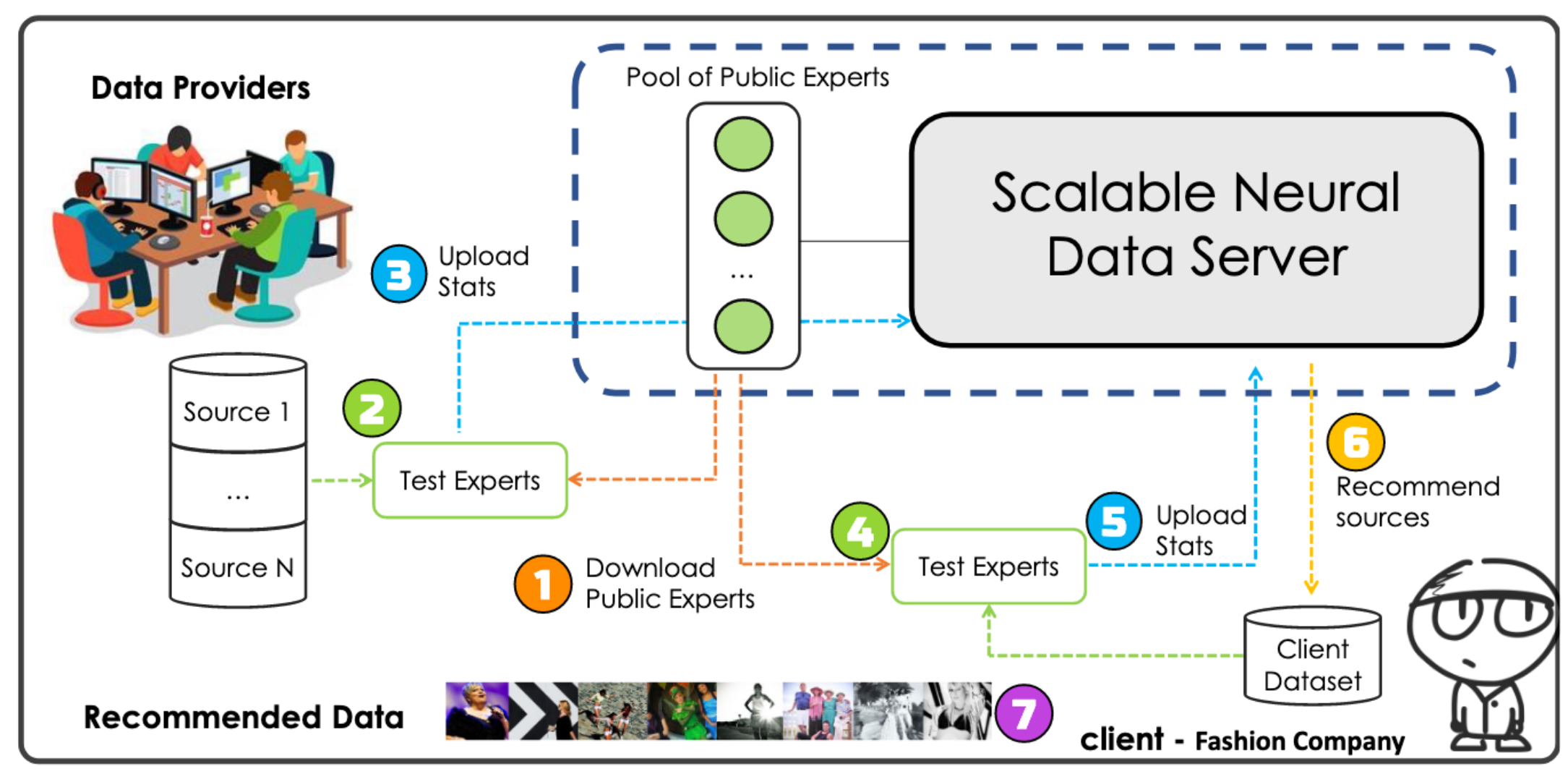

Tianshi Cao*, Sasha Doubov*, David Acuna, Sanja Fidler NeurIPS, 2021 We present a scalable system for recommending pre-training data for transfer learning for computer vision models, which works with out-of-domain distributions such as medical and satellite data. Links: arxiv / openreview / NeurIPS 2021 talk (15 min) |

|

Julieta Martinez, Sasha Doubov, Jack Fan, Ioan Andrei Bârsan, Shenlong Wang, Gellért Máttyus, Raquel Urtasun IROS, 2020 (Best Application Paper Finalist) We introduce a large-scale dataset for global localization in the context of self-driving cars, and show that a simple voxelization + CNN method is competitive for LiDAR-based localization. Links: arxiv / teaser video (90 s) / IROS talk (15 min) |

|

|

|

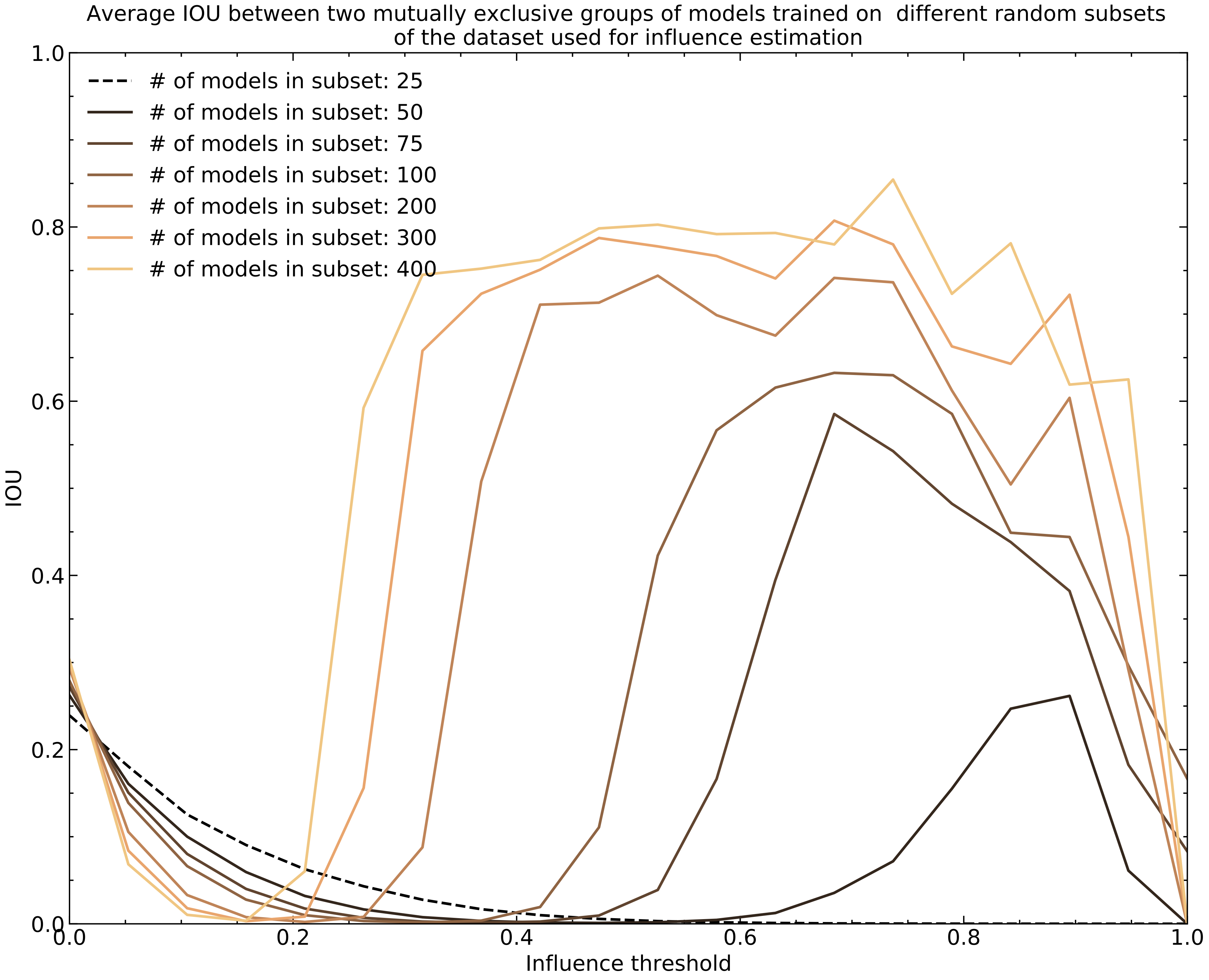

Sasha Doubov, Tianshi Cao, David Acuna, Sanja Fidler I Can't Believe It's Not Better Workshop at NeurIPS 2022 In this work, we explore the scalability and limitations of modern influence estimators for neural networks. We find that a large number of models are needed for accurate influence estimation, but the exact number is difficult to quantify and depends on factors such as training nondeterminism and test example difficulty. Links: openreview / pdf |

|

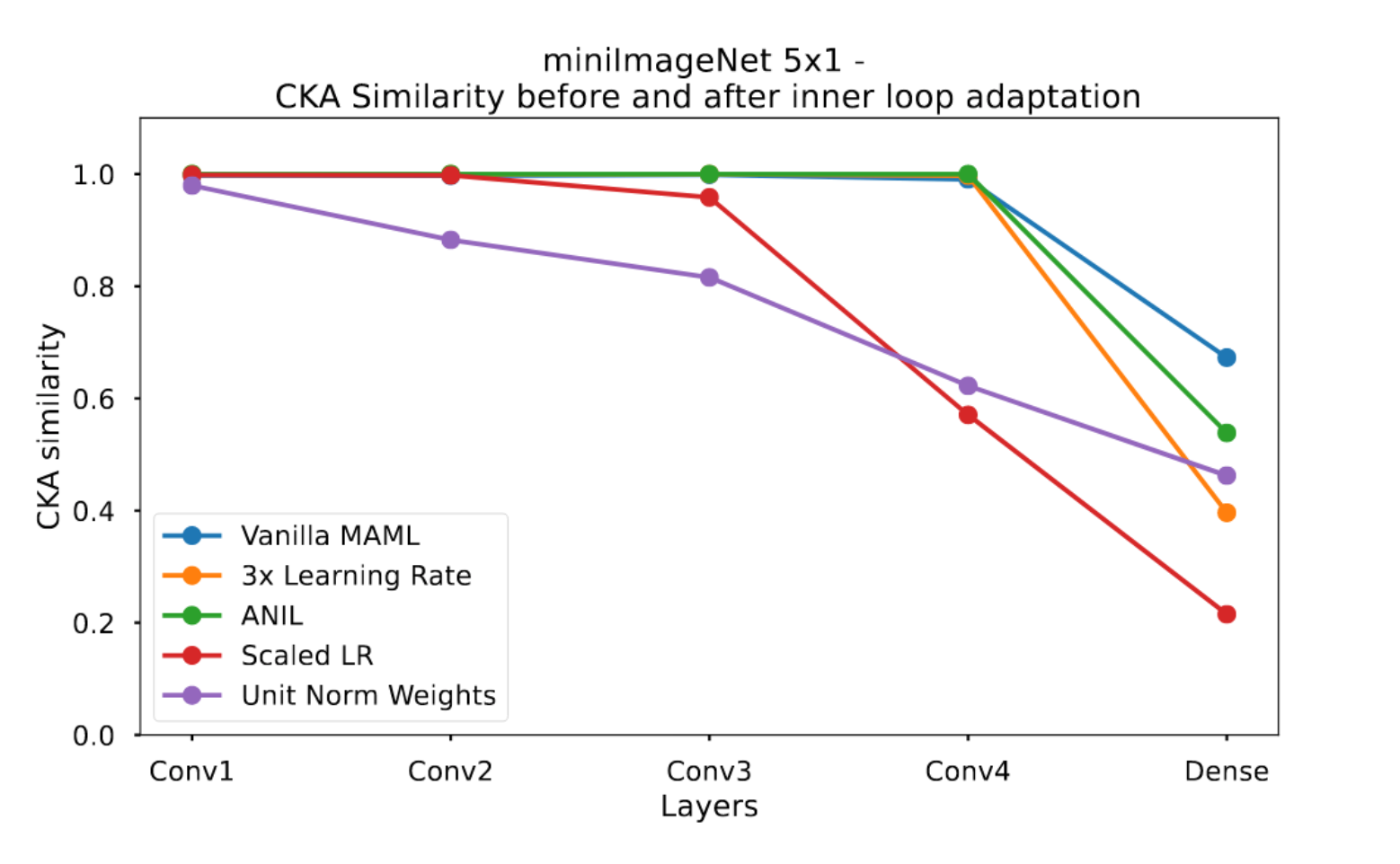

Alexander Wang*, Gary Leung*, Sasha Doubov* NeurIPS Workshop on Meta-Learning , 2021 We study how BatchNorm's implicit learning rate decay effect interacts with meta-learning methods. In particular, we examine how it affects the feature reuse/rapid adaptation of MAML. Links: openreview / pdf |

|

|

|

University of Toronto

Sept 2020-April 2022 MSc in Computer Science GPA: 3.93 Advisor: Sanja Fidler |

|

University of Waterloo

Sept 2015-2020 Bachelor in Electrical Engineering GPA: 94% Top student in graduating stream |

|

|

|

Databricks/MosaicML

Research Scientist Aug 2023- Member of the LLM pre-training team. |

|

MosaicML

Research Scientist Intern April 2023-Aug 2023 Worked on methods for hyperparameter tuning for LLMs and training and evaluating domain-specific models. |

|

Cohere

ML Intern (Model Efficiency Team) October 2022- Worked on structured pruning to accelerate inference and training of Large Language Models. |

|

Cerebras Systems

Research Intern (ML Algorithms) April 2022-August 2022 Worked on unstructured sparsity research to accelerate neural network training. |

|

Uber ATG

Research Intern Sept 2019-Dec 2019 Jan 2019-July 2019 Worked on retrieval-based localization for self-driving cars using deep learning Curated a large-scale dataset, including data processing with Spark |

|

Intel FPGA

Software Engineering Intern (Deep Learning Accelerator Team) May 2018-Aug 2018 Prototyped a CI/CD pipeline for continuously checking code using Jenkins |

|

University of Waterloo

Research Assistant Supervisor: Srinivasan Keshav Jan 2018 - Dec 2018 Developed a system for overhead person detection in an office using deep learning/traditional CV to optimize lighting for energy savings |

|

Intel FPGA

Software Engineering Intern (Devices & Features Infrastructure) Sep 2017-Dec 2017 Developed a tool for modeling FPGA device RTL in a graph representation |

|

University of Waterloo

Research Assistant Supervisor: Oleg Michailovich May 2017 - Dec 2017 Developed a pre-processing pipeline for MRI images for a dataset used for Alzheimer's Disease research |

|

Thanks to the great theme by Jon Barron. |