Introduction

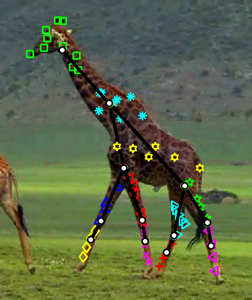

Humans demonstrate a remarkable ability to parse complicated motion sequences into their constituent structures and motions. We investigate this problem, attempting to learn the structure of one or more articulated objects, given a time-series of two-dimensional feature positions. We model the observed sequence in terms of "stick figure" objects, under the assumption that the relative joint angles between sticks can change over time, but their lengths and connectivities are fixed. We formulate the problem in a single probabilistic model that includes multiple sub-components: associating the features with particular sticks, determining the proper number of sticks, and finding which sticks are physically joined. We test the algorithm on challenging datasets of 2D projections of optical human motion capture and feature trajectories from real videos.

Papers

- Learning Articulated Structure and Motion

David Ross, Daniel Tarlow, and Richard Zemel. International Journal of Computer Vision, 88 (2), 2010. [PDF] - Unsupervised Learning of Skeletons from motion

David Ross, Daniel Tarlow, and Richard Zemel. 10th European Conference on Computer Vision (ECCV 2008), 2008. [PDF] - Learning Articulated Skeletons From Motion

David Ross, Daniel Tarlow, and Richard Zemel. Workshop on Dynamical Vision at ICCV, 2007. [PDF]

Videos

Code and Data

The human motion capture data used in this project was provided from the CMU Graphics Lab Motion Capture Database, and the Biomotion Lab, Queens University. The giraffe data is available here: giraffe.zip.