|

I am a first-year graduate student in Machine Learning Group at the University of Toronto and Vector Institute, studying under Jimmy Ba and Roger Grosse. I recieved my bachelors at the University of Toronto in 2017. |

|

|

My research interests focus on the development of efficient learning algorithms for deep neural networks. In particular, how to properly regularize deep neural networks such that it can continuously learn a number of tasks and generalize better. |

|

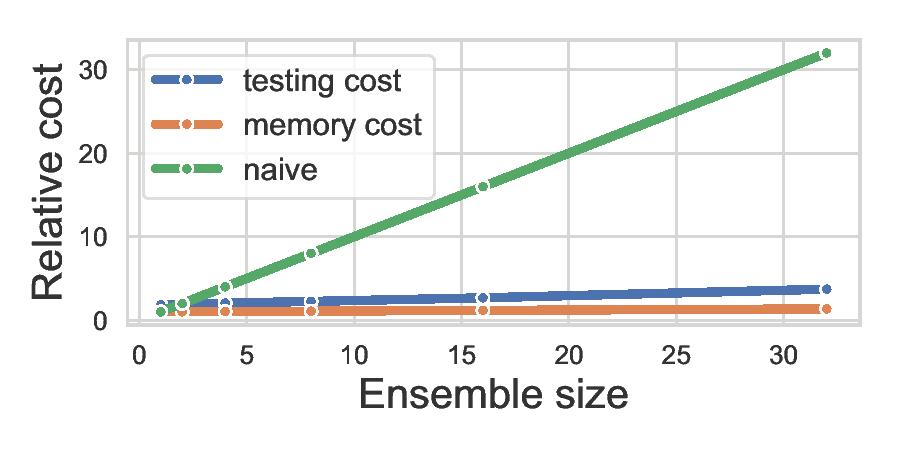

Yeming Wen, Dustin Tran & Jimmy Ba 8th International Conference on Learning Representations (ICLR), 2020 Bayesian Deep Learning Workshop at NeurIps, 2019 How to efficiently ensemble deep neural networks efficiently in both computation and memory. |

|

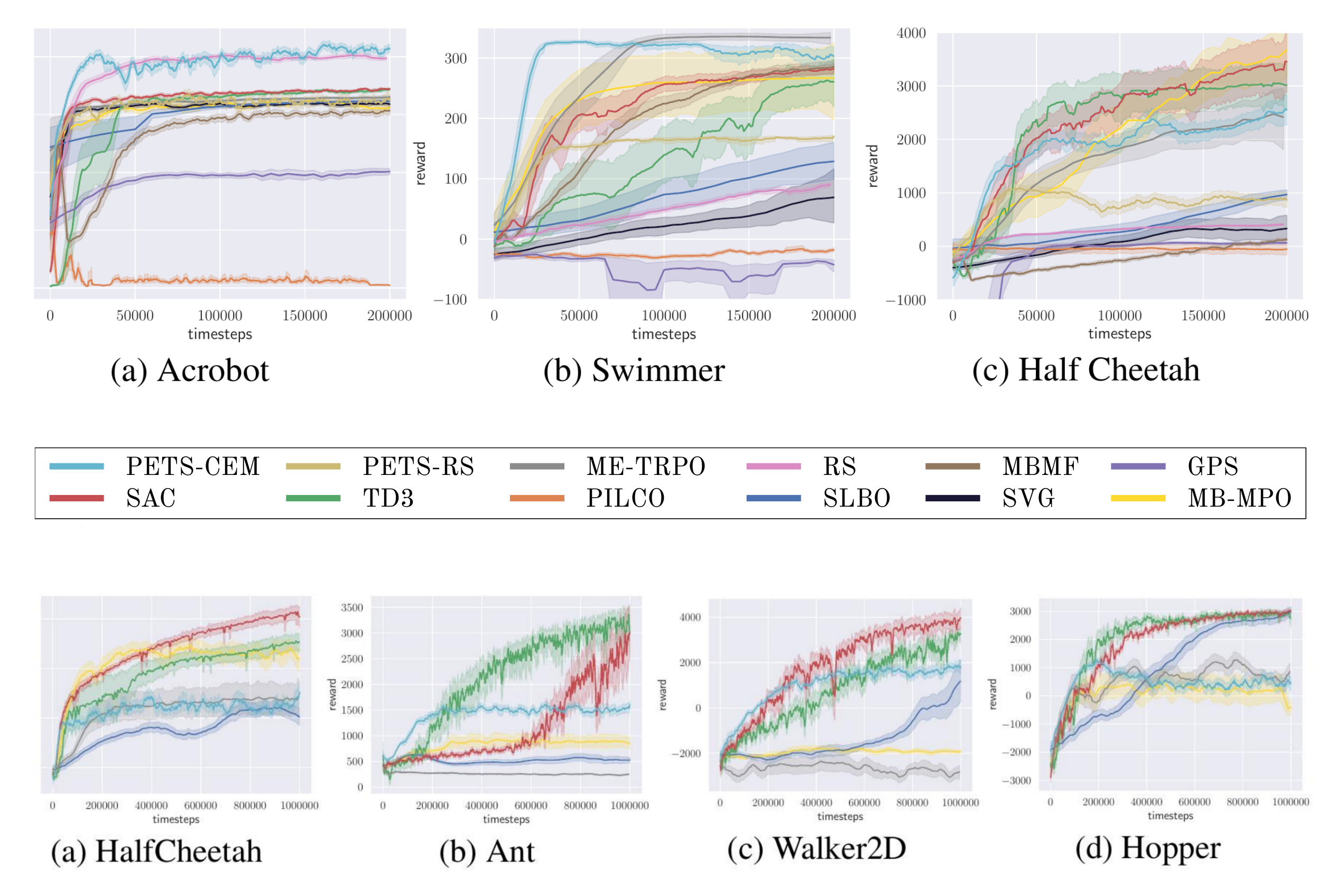

Tingwu Wang, Xuchan Bao, Ignasi Clavera, Jerrick Hoang, Yeming Wen, Eric Langlois, Shunshi Zhang, Guodong Zhang, Pieter Abbeel & Jimmy Ba Arxiv, 2019 Benchmarking several commonly used model-based algorithms. |

|

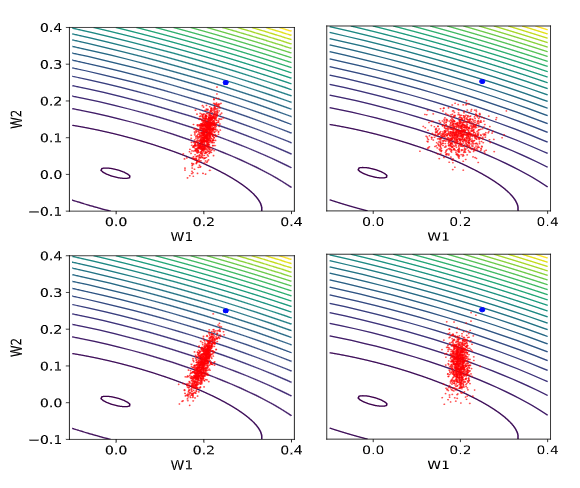

Yeming Wen*, Kevin Luk*, Maxime Gazeau*, Guodong Zhang, Harris Chan & Jimmy Ba 23rd International Conference on Artificial Intelligence and Statistics (AISTATS), 2020 How to add a noise to gradients with correct covariance structure such that large-batch training genenalizes better without longer training. |

|

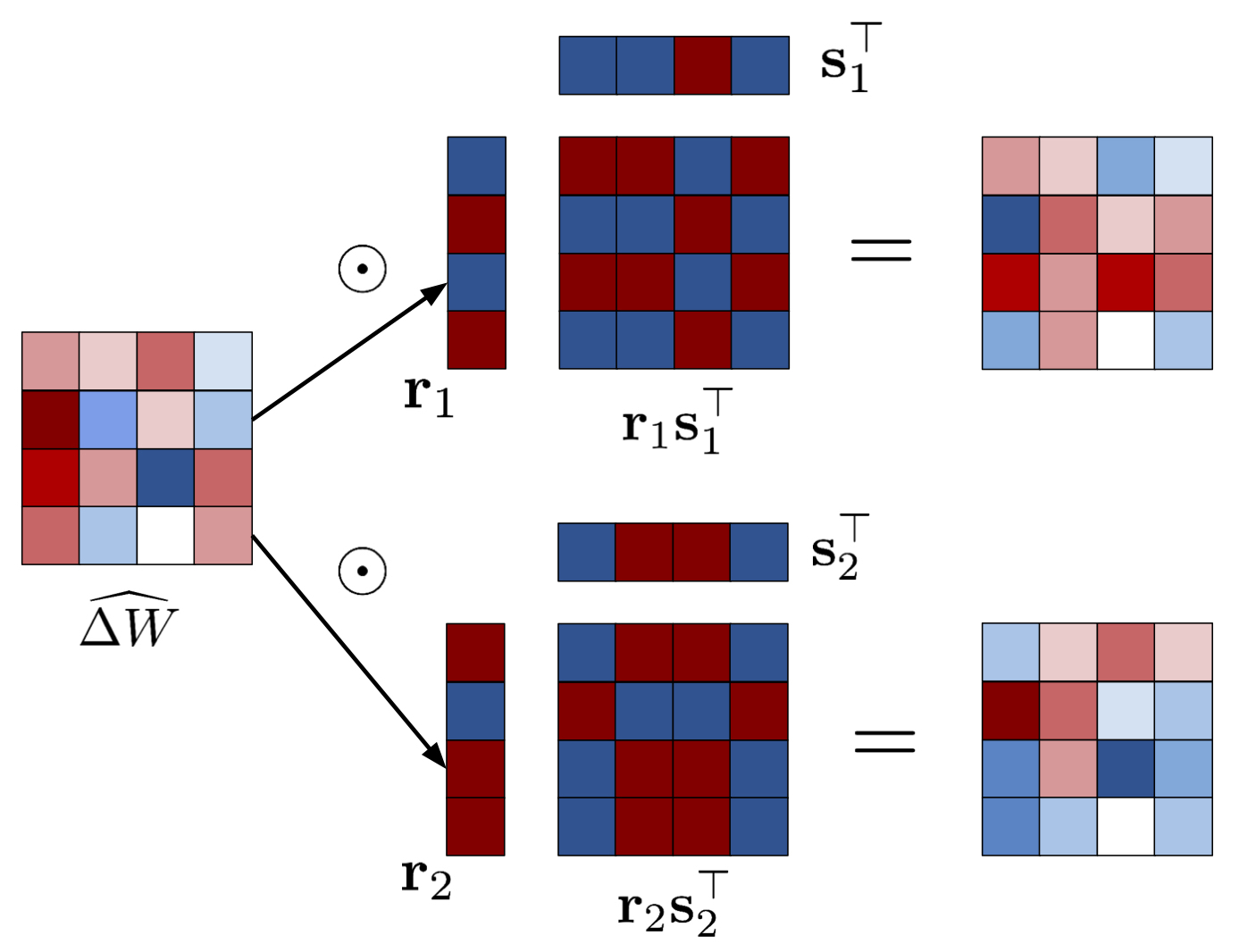

Yeming Wen, Paul Vicol, Jimmy Ba, Dustin Tran & Roger Grosse 6th International Conference on Learning Representations (ICLR), 2018 How to efficiently make psedo-independent weight perturbations on mini-batches in evolution strategies and variational BNNs as activation perturbations in dropout. |

|