Satya Krishna Gorti

MSc. in Applied Computing

satyag [at] cs [dot] toronto [dot] edu

MSc. in Applied Computing

satyag [at] cs [dot] toronto [dot] edu

I graduated with MSc. in Applied Computing from University of Toronto in 2018. My main interests lie in the area of Machine Learning, Deep Learning and Computer Vision. I am currently a Senior ML Research Scientist at Layer6 AI where I work on Representation Learning and Multi-Modal Understanding. I also lead the ML Frameworks team where we build ML frameworks for training, deploying and monitoring ML models in production on the cloud. Previous to this, I was a Research Intern at Uber ATG working on multi-object tracking using LIDAR and RADAR sensors for self-driving vehicles.

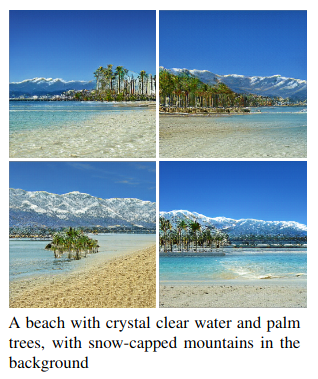

| Ground Truth Caption | Generated Image | Generated Caption |

|---|---|---|

| the flower has long yellow petals that are thin and a yellow stamen |  |

this flower has petals that are yellow and very thin |

| there are many long and narrow floppy pink petals surrounding many red stamen and a green stigma on this flower |  |

this flower has petals that are red with pointed tips |

You can find my full resume here.