[Interpretability, Healthcare] Extracting Clinician's Goals by What-if Interpretable Modeling

Although reinforcement learning (RL) has tremendous success in many fields, applying RL to real-world settings such as healthcare is challenging when the reward is hard to specify and no exploration is allowed.

In this work, we focus on recovering clinicians' rewards in treating patients.

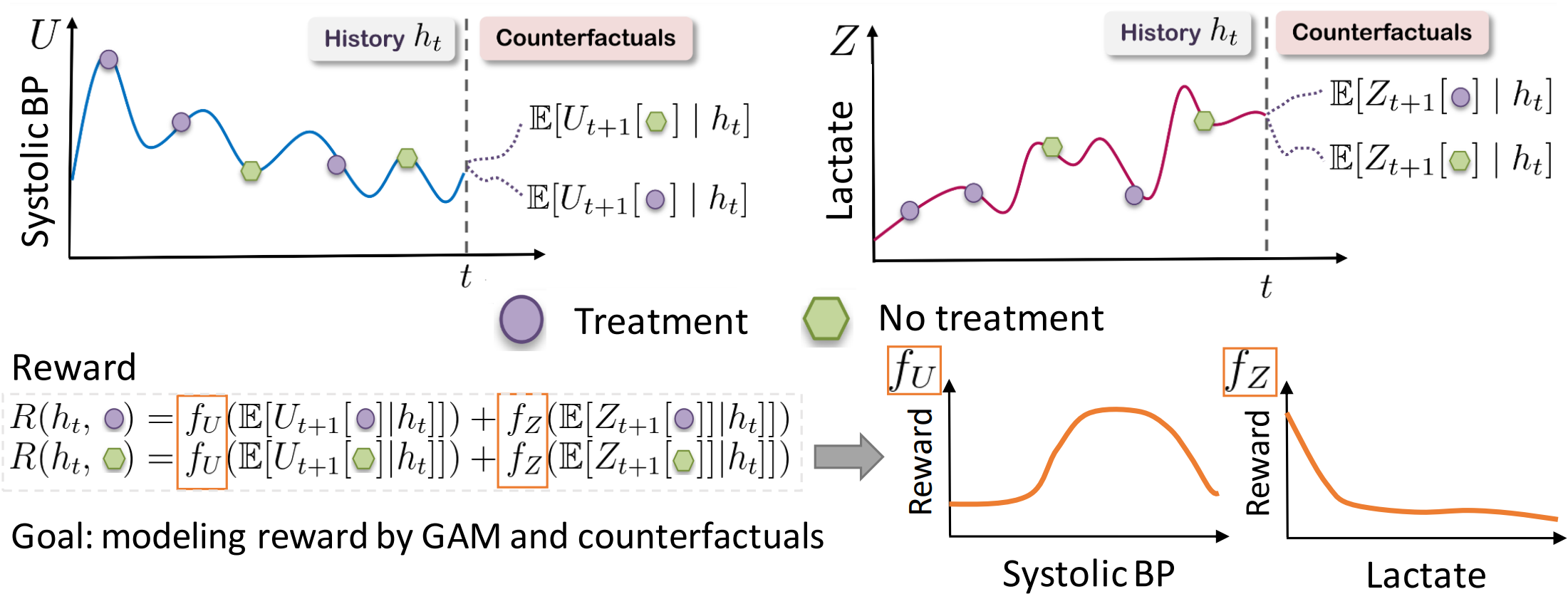

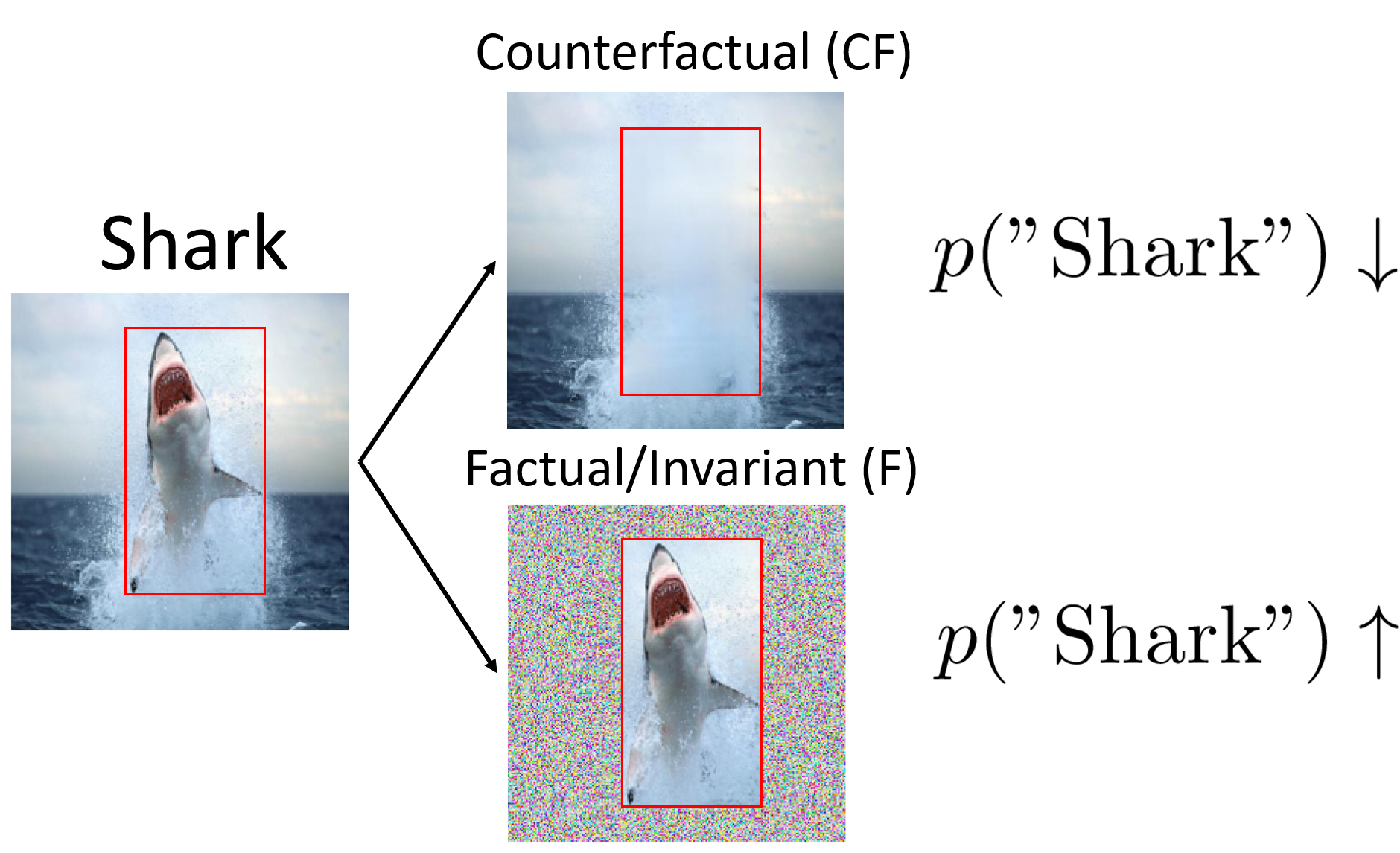

We incorporate the what-if reasoning to explain clinician's actions based on future outcomes.

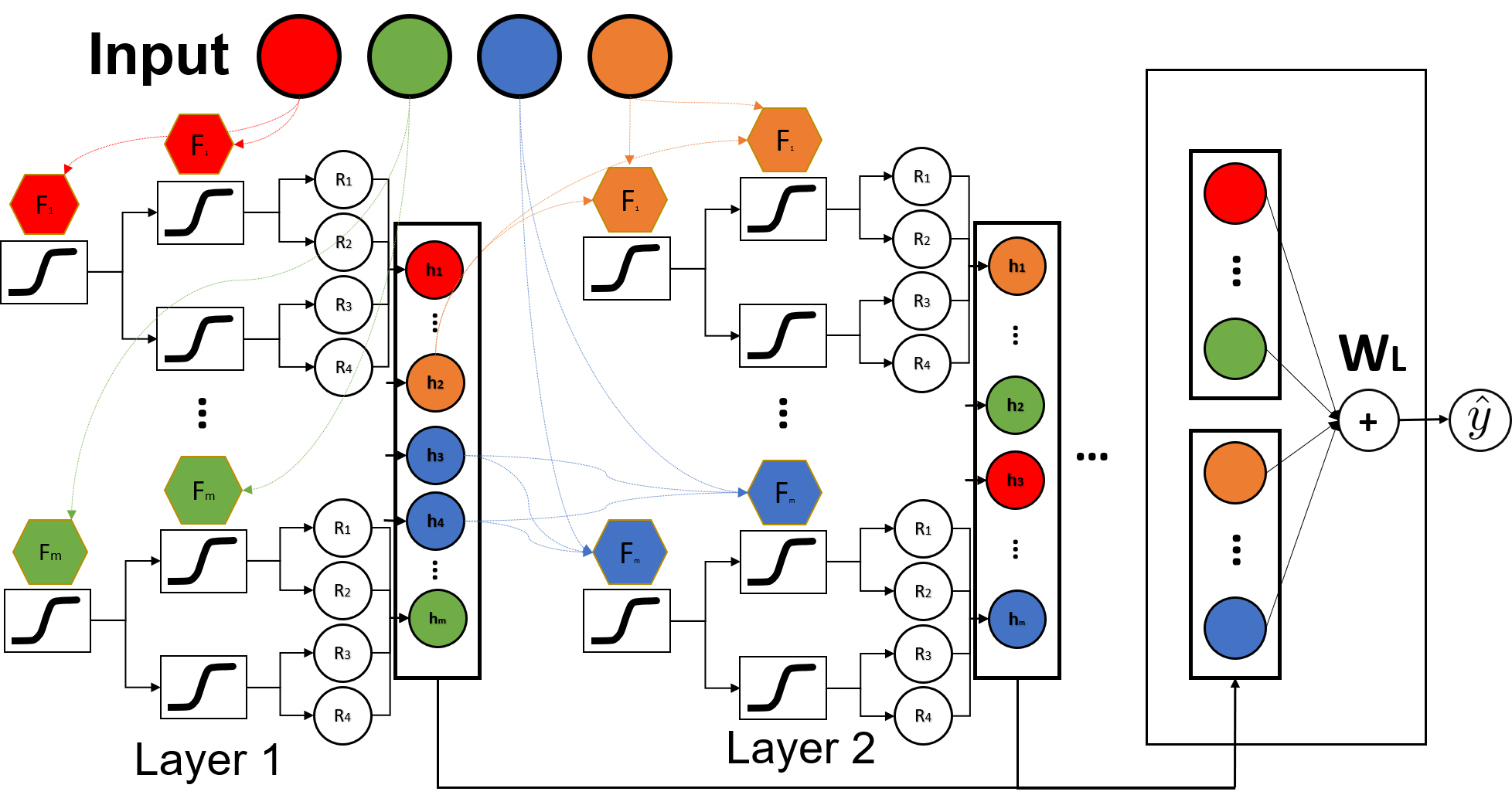

We use generalized additive models (GAMs) - a class of accurate, interpretable models - to recover the reward.

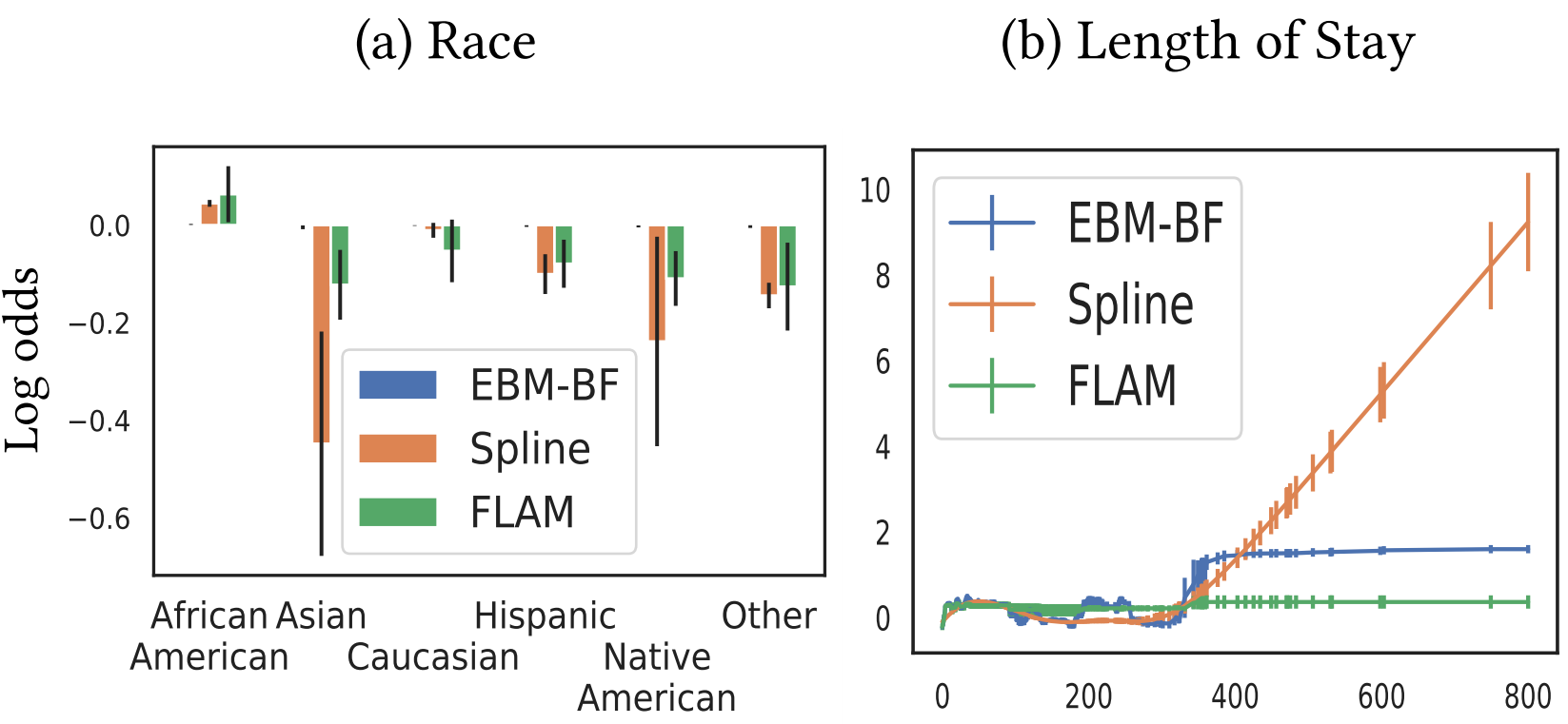

In both simulation and a real-world hospital dataset, we show our model outperforms baselines.

Finally, our model's explanations match several clinical guidelines when treating patients while we found the previously-used linear model often contradicts them.

TLDR: We extract clinicians' treatment goals by interpretable GAM modeling and what-if reasoning

Chun-Hao Chang, George Alexandru Adam, Rich Caruana, Anna Goldenberg

Subbmitted to ICML 2022