In this project, you will learn to work with TensorFlow, Google's new machine learning framework.

In this project, you will learn to work with TensorFlow, Google's new machine learning framework.

You will work with the same dataset as in Project 1 (but you should experiment with resizing the input to a larger size, and using colour information; you can also experiment with using larger bounding boxes). Unlike with Project 1, for this project, you should make sure to check the SHA-256 hashes, and make sure to only keep faces for which the hashes match. You should set aside 70 images per faces for the training set, and use the rest for the test and validation set.

I am providing two pieces of code: code for training a single-hidden-layer fully-connected network with TF and code for running AlexNet on images. We are also providing a TensorFlow translation of the original Deep Dream code.

Using TensorFlow, make a system for classifying faces from the 6 actors in Project 1. Use a fully-connected neural network with a single hidden layer. In your report, include the learning curve for the test, training, and validation sets, and the final performance classification on the test set. Include a text description of your system. In particular, describe how you preprocessed the input and initialized the weights, what activation function you used, and what the exact architecture of the network that you selected was. I got about 80-85% using a single-hidden-layer network. You might get slightly worse results.

You may use my code.

Exctract the values of the activations of AlexNet on the face images. Use those as features in order to perform face classification: learn a fully-connected neural network that takes in the activations of the units in the AlexNet layer as inputs, and outputs the name of the person. In your report, include a description of the system you built and its performance, similarly to part 1. It is possible to improve on the results of Part 2 by reducing the error rate by at least 30%. I recommend starting out with only using the conv4 activations (for Part 2, only using conv4 is sufficient.)

You should modify the AlexNet code so that the image is put into a placeholder variable.

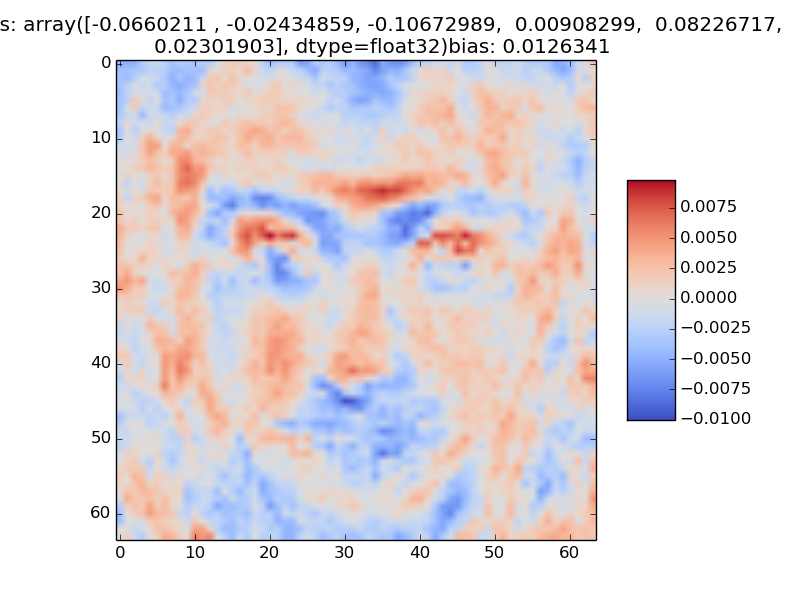

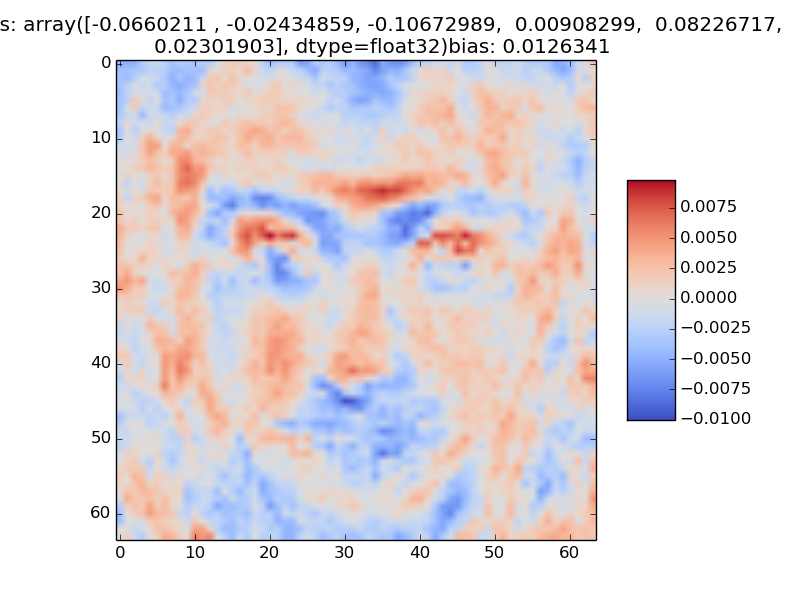

Train two networks the way you did in Part 1. Use 300 and 800 hidden units in the hidden layer. Visualize 2 different hidden features for each of the two settings, and briefly explain why they are interesting. A sample visualization of a hidden feature, discussed in class, is shown below. (Note: you probably need to use L2 regularization while training to obtain nice weight visualizations.)

In Part 2, you used the (intermediate) outputs of AlexNet as inputs to a different network. You could view this as adding more layers to AlexNet (starting from e.g. conv4). Modify the handout AlexNet code so that you have an implementation of a complete network whose outputs are as in Part 2. Include the modifications that you made to AlexNet in the report. In your report, include an example of using the network (i.e., plugging in an input face image into a placeholder, and getting the label as an output.)

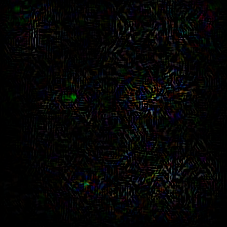

For a specific picture of an actor other than Gerard Butler, compute and visualize the gradient of the output of the network in Part 4 for that actor with respect to the input image. Visualize the positive parts of the gradient and the input image in two separate images. Include the code that you used for this part in your report. Use tf.gradients. An example with a picture of Gerard Butler is below. It seems that the nose eye shape and the stubble to some extent are strong cues for recognizing him.

|

|

Train the new network constructed in Part 4 on the same training set and with the same test and validation sets as before to improve the peroformance of the system, initializing the network with the weights that you obtained previously (otherwise, the training would take way too long). In the report, display the learning curves and the final performance of the system, and compare it to the performance with the initial weights. Describe any modifications that you made in order to make the system work in the report.

Implement guided backpropagation in TensorFlow, and display the results of applying it on the deep network you build in Part 4. (Note: tf.gradients() can help, but you have to apply it multiple times.)

The project should be implemented using Python 2. Your report should be in PDF format. You should use LaTeX to generate the report, and submit the .tex file as well. A sample template is on the course website. You will submit three files: tf_faces.py, tf_faces.tex, and tf_faces.pdf. You can submit other Python files as well: we should have all the code that's needed to run your experiments.

Reproducibility counts! We should be able to obtain all the graphs and figures in your report by running your code. Submissions that are not reproducible will not receive full marks. If your graphs/reported numbers cannot be reproduced by running the code, you may be docked up to 20%. (Of course, if the code is simply incomplete, you may lose even more.) Suggestion: if you are using randomness anywhere, use numpy.random.seed().

You must use LaTeX to generate the report. LaTeX is the tool used to generate virtually all technical reports and research papers in machine learning, and students report that after they get used to writing reports in LaTeX, they start using LaTeX for all their course reports. In addition, using LaTeX facilitates the production of reproducible results.

Using my code

You are free to use any of the code available from the course website.

Important: