ISBI 2013 Tutorial

8:00am-11:30am, Monday April 8th, Metropolitan 2

|

Deep Learning ISBI 2013 Tutorial 8:00am-11:30am, Monday April 8th, Metropolitan 2 |

|

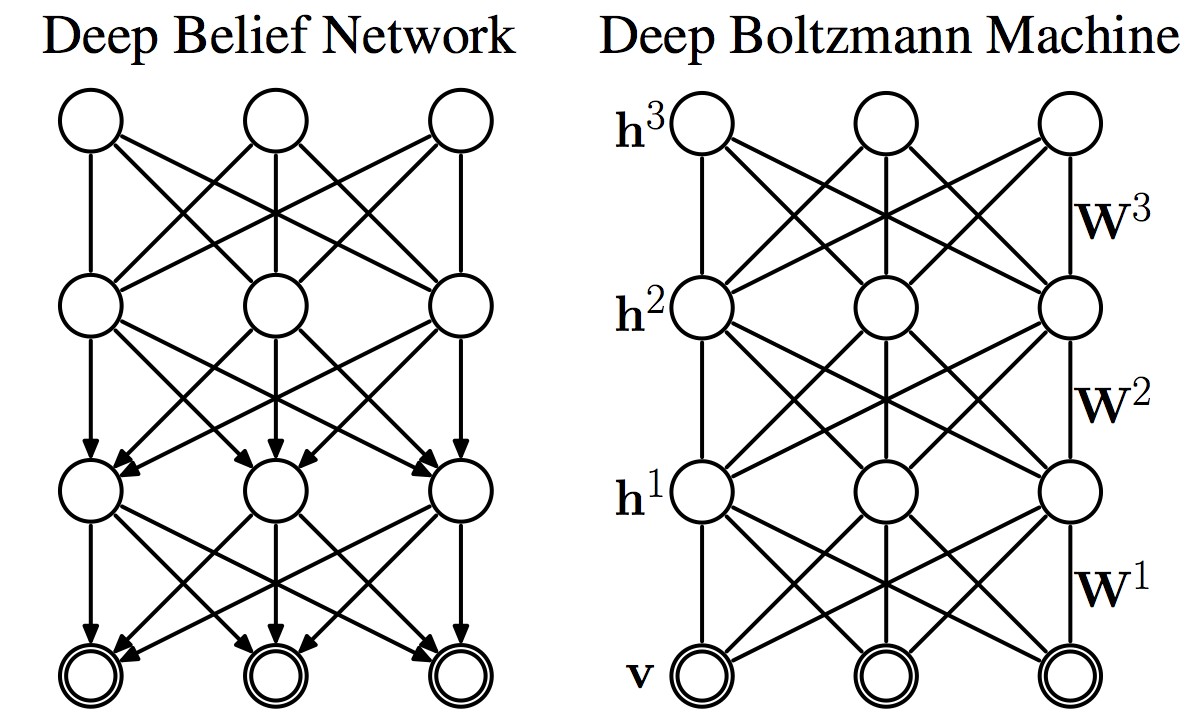

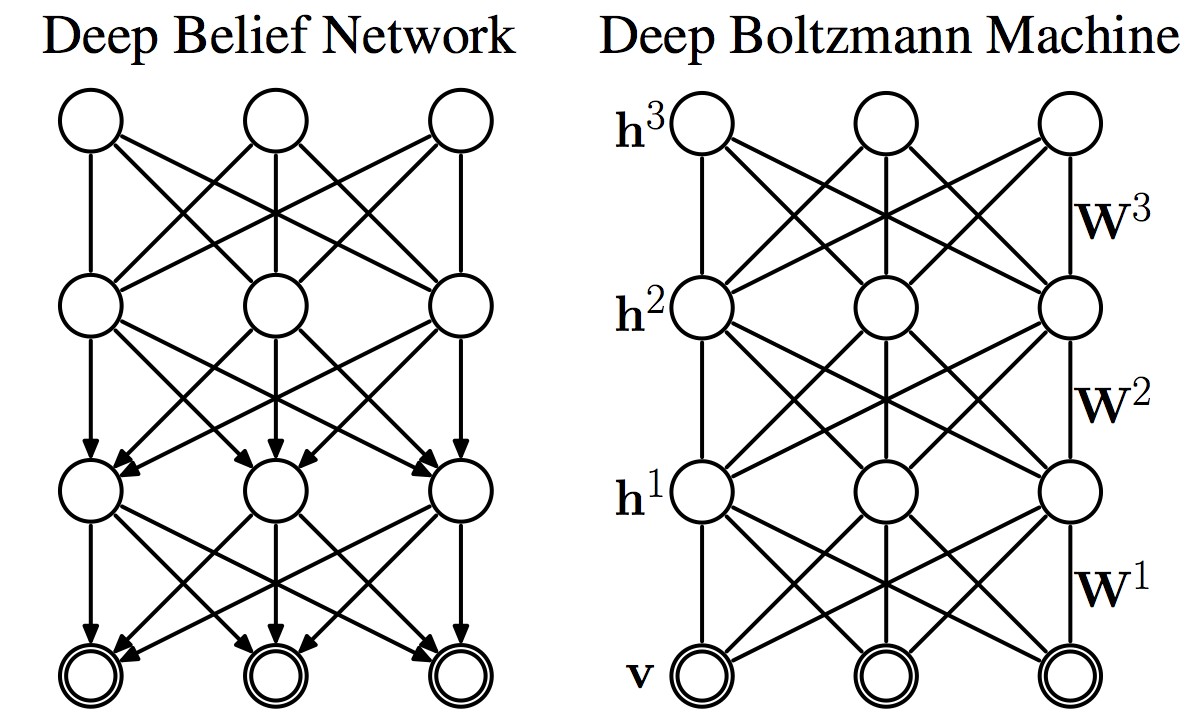

Overview Building intelligent systems that are capable of extracting high-level representations from high-dimensional sensory data lies at the core of solving many AI related tasks, including visual object or pattern recognition, speech perception, and language understanding. Theoretical and biological arguments strongly suggest that building such systems requires deep architectures that involve many layers of nonlinear processing. Many existing learning algorithms use shallow architectures, including neural networks with only one hidden layer, support vector machines, kernel logistic regression, and many others. The internal representations learned by such systems are necessarily simple and are incapable of extracting some types of complex structure from high-dimensional input. In the past few years, researchers across many different communities, from applied statistics to engineering, computer science and neuroscience, have proposed several deep (hierarchical) models that are capable of extracting useful, high-level structured representations.An important property ofthese models is that they can extract complex statistical dependencies from high-dimensional sensory input and efficiently learn high-level representations by re-using and combining intermediate concepts, allowing these models to generalize well across a wide variety of tasks. The learned high-level representationshave been shown to give state-of-the-art results in many challenging learning problems,where data patterns often exhibit a high degree of variations, and have been successfully applied in a wide variety of application domains, including visual object recognition, information retrieval, natural language processing, and speech perception. A few notable examples of such models include Deep Belief Networks, Deep Boltzmann Machines, Deep Autoencoders, and sparse coding-based methods. The goal of the tutorial is to introduce the recent and exciting developments of various deep learning methods to the ISBI community. The core focus will be placed on algorithms that can learn multi-layer hierarchies of representations. %, emphasizing their applications in computer vision. The tutorial will be split into two parts. The first part will provide a gentle introduction into graphical models and deep learning models. Topics will include:

The second part of the tutorial will introduce more advanced models, including Deep Boltzmann Machines and Deep Belief Networks. We will also address mathematical issues, focusing on efficient large-scale optimization methods for inference and learning, as well as training density models with intractable partition functions. The final part of the tutorial will also highlight the current performances obtained by deep approaches on various applications in image/video analysis and various other domains. |

Schedule

| 8:00 - 9:30am | Deep Learning I pdf | ||

| 9:50 - 11:20am | Deep Learning II pdf |

|

Ruslan Salakhutdinov

Ruslan Salakhutdinov received his PhD in computer science from the University of Toronto in 2009. After spending two post-doctoral years at the Massachusetts Institute of Technology Artificial Intelligence Lab, he joined the University of Toronto as an Assistant Professor in the Departments of Statistics and Computer Science. Salakhutdinov's primary interests lie in artificial intelligence, machine learning, deep learning, and large-scale optimization. He is the recipient of the Sloan Research Fellowship, Microsoft Faculty Fellowship, Early Researcher Award, Connaught New Researcher Award, and a Scholar of the Canadian Institute for Advanced Research. |