A Machine Learning-based Invariant Generation Technique

Chenguang Zhu, Arie Gurfinkel, Temesghen Kahsai, and Jorge Navas

This project is to implement the ICE decision tree learning model [1] - a machine learning-based invariant generation technique, in the SeaHorn Verification Framework [2].

Automatic invariants synthesis (pre/postconditions and loop invariants) is an essential ingredient in various program verification and analysis methodologies. The ICE decision tree learning model, proposed by P. Garg et al., is a black-box invariant generation algorithm. In this algorithm, The synthesizer of invariants are split into two parts: a program verifier as a teacher, and a decision tree component as a learner.

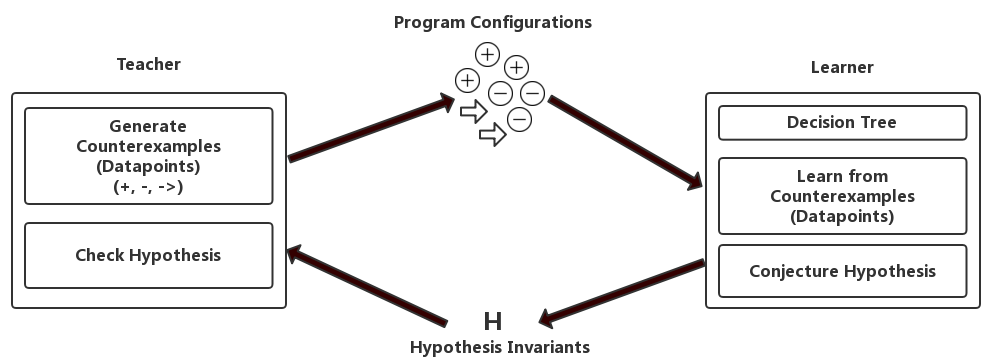

Fig. 1. High-level overview of the ICE decision tree learning invariant generation algorithm.

In each round, the learner proposes an invariant hypothesis, and the teacher checks if the invariant hypothesis is adequate to verify the program; if not, it returns concrete program configurations, which are used in the next round by the learner to refine the conjecture. The learner is completely agnostic of the program and the specification. The learner is simply constrained to learn some predicate that is consistent with the sample configurations given by teacher.

The teacher can generate three kinds of datapoints: positive examples, negative examples, and implication examples. Positive examples represent the good states that the program can reach. Negative examples represent the bad states that the program is forbidden from reaching. The implication examples are of the format (p, p'), where both p and p' are program configurations (program states). The implication examples demand that the learnt invariants satisfies the property that if p is included in H, then so is p'.

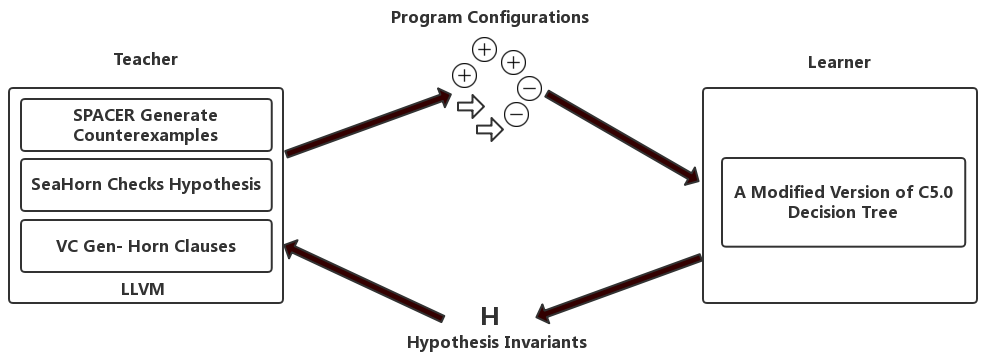

Fig. 3. An overview of the implementation of ICE in SeaHorn Framework.

We implement the overall approach in SeaHorn Verification framework. We implement the teacher as an LLVM pass. The counterexamples are generated by Spacer [3], an algorithmic framework for SMT-based software model checking. For the learner, we reuse the learner component of the work of P. Garg et al., which was implemented by modifying an open-source version of C5.0 decision tree algorithm.

References:

[1] P. Garg, D. Neider, P. Madhusudan, and D. Roth, "Learning invariants using decision trees and implication counterexamples," in Proceedings of the 43rd Annual ACM SIGPLAN-SIGACT Symposium on Principles of Programming Languages, POPL 2016.

[2] A. Gurfinkel, T. Kahsai, A. Komuravelli, and J. A. Navas, "The seahorn verification framework," in Computer Aided Verification - 27th International Conference, CAV 2015.

[3] A. Komuravelli, A. Gurfinkel, and S. Chaki, "SMT-based model checking for recursive programs," in Computer Aided Verification - 26th International Conference, CAV 2014.

SeaHorn Github repository: LINK