Visualizing Likert scale data from course evaluations

Table of Contents

The longer you teach, the more data you get from course evaluations. At the University of Toronto, students are asked a series of questions that are mostly answered on a Likert scale. And the data is nicely summarised in a report that visualizes the data for each question in a given section. But what if you wanted to see how the data looked across courses, terms, years, decades (okay, I’m not quite there yet)?

In this post, I’m going to show you how I organized the data so that I could visualize it. This has proven useful in awards packages and dossiers where my performance is reviewed. In both these cases, some form of reflection on course evaluation data is expected. So, if you’re an instructor, or you’re just interested in how to visualize Likert scale data, I hope you find this useful!

Storing the prompts #

Students are asked a variety of questions in a course evaluation. And these questions (prompts) have been conveniently categorised by the university. There are six “core” (e.g., CORE1, CORE2) prompts that always appear. On top of that, in the Faculty of Arts and Science, there are another three prompts that are asked (e.g., FAS1, FAS2). Finally, there are up to three prompts that instructors may choose to add on to the end of their evaluations, which are coded with a letter followed by a number (e.g., A-2 or I-5).

I decided to store these prompts in a small csv file. This way, I could look up them up whenever I needed to. Here’s an excerpt of what the table looks like (I’ve skipped over things with “…” to keep the table short):

| Tag | Prompt |

|---|---|

| CORE1 | I found the course intellectually stimulating. |

| CORE2 | The course provided me with a deeper understanding of the subject matter. |

| CORE3 | The instructor (Mario Badr) created an atmosphere that was conducive to my learning. |

| CORE… | … |

| FAS1 | The instructor (Mario Badr) generated enthusiasm for learning in the course. |

| FAS… | … |

| A-2 | During the course, the course instructor (Mario Badr) was approachable when students sought guidance. |

Storing the Likert scale data #

Course evaluation data is typically provided by section. For example, if I taught two sections of CSC110Y1F, then I would have two course evaluation reports. In addition, I may teach the same course across multiple terms. So, when organizing the data, I would like each row to be unique identified by the: course (e.g., CSC110), term (e.g., 20209), section (e.g., LEC0101), and question (e.g., CORE). This gives me data that looks like:

| Course | Term | Section | Question | 5 | 4 | 3 | 2 | 1 |

|---|---|---|---|---|---|---|---|---|

| CSC110 | 20209 | LEC0101 | CORE1 | 10 | 5 | 9 | 9 | 2 |

| CSC110 | 20209 | LEC0101 | CORE2 | 14 | 6 | 5 | 9 | 2 |

| … | ||||||||

| CSC110 | 20219 | LEC0101 | CORE1 | 19 | 9 | 8 | 3 | 1 |

| … |

The numbers under columns 5, 4, 3, 2, and 1 (the 5-point Likert scale) come from the course evaluation report.

The very first time I created this table, it took a while to manually extract all the data from the reports.

Fortunately, after that initial investment, I am able to update it every term in a few minutes.

Those who use pivot tables, the R programming language, or something similar may already see the utility behind this data layout.

For example, I can use the Course column to aggregate all data (across sections and terms) for a given course.

Or I can use the Question column to aggregate all data for a specific set of questions.

In the next two sections, I show how I use R to transform and visualize this data.

Transforming the data in R #

To get things visualized, we’ll use R’s tidyverse. My first step is to convert the Likert scale data into a form I’ll use (i.e., plot) in the script. Since I typically don’t care how different sections of the same course perform, I only group by course, term, and quesiton:

library("tidyverse")

raw.data <- read_csv("likert-data.csv")

likert.data <- raw.data %>%

pivot_longer(-c(Course, Term, Question, Section), names_to="Rating", values_to="Count") %>%

group_by(Course, Term, Question, Rating) %>%

summarize(Count=sum(Count), .groups="keep")

To get a better sense of what’s happening above, the pivot longer produces a tibble of the form:

Course Term Section Question Rating Count

<chr> <dbl> <chr> <chr> <chr> <dbl>

1 CSC110 20209 LEC0101 CORE1 5 9

2 CSC110 20209 LEC0101 CORE1 4 5

3 CSC110 20209 LEC0101 CORE1 3 0

So the 5, 4, 3, 2, 1 columns from the original csv are now in the Rating column, with their corresponding values in the Count column.

Afer that, we sum up the Count column for each group, ignoring the Section.

Now we are ready to visualize!

Visualizing the data with ggplot2 #

Before visualizing the data, I need to setup colours and the labels for different axis. I can’t quite remember where I found this colour scheme. But the labels simply correspond to the course evaluation report.

likert.colours <- c("5"="#217BBE", "4"="#6392CD", "3"="#939599", "2"="#F69274", "1"="#F37252")

standard.scale <- c("5" = "A Great Deal", "4" = "Mostly", "3" = "Moderately", "2" = "Somewhat", "1" = "Not At All")

Finally, I’d like to create a function that, given the Likert scale data and one specific prompt, produces a bar chart.

Since a prompt may apply to several different courses, I would like to visualize each course’s data independently.

To that end, I use facet_wrap so that multiple bar charts appear in a single plot.

plot_likert_question <- function(data, prompt, scale.labels) {

data %>%

filter(Question==prompt) %>%

mutate(Group = paste(Term, Course, sep=" ")) %>%

ggplot(aes(x=Count, y=Rating, fill=Rating, label=Count)) +

geom_bar(stat="identity") +

facet_wrap(~Group, scales="free_x") +

scale_y_discrete(labels=scale.labels) +

scale_fill_discrete(type=likert.colours, guide="none") +

geom_text(hjust=-0.5, size=5) +

scale_x_continuous(expand = expansion(mult = c(0, .15))) +

xlab("Number of Responses") +

theme_bw() +

theme(

panel.grid=element_blank(),

text=element_text(family="NimbusRom"),

strip.text=element_text(face="bold", size=24, hjust=0),

axis.ticks=element_blank(),

axis.text.x=element_blank(),

axis.text.y=element_text(size=24),

axis.title.x=element_text(size=24),

axis.title.y=element_blank()

)

}

With this function in hand, I can repeatedly call it to produce PDF files of my bar plots. As mentioned earlier, I do this for each prompt I’m interested in.

ggsave("CORE3.pdf", plot_likert_question(likert.data, "CORE3", standard.scale), width=16, height=9)

# ...

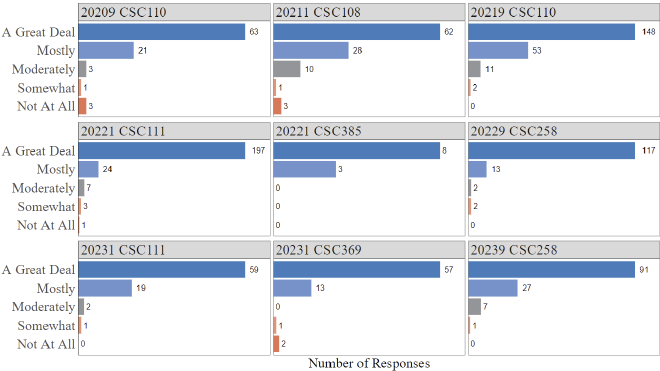

Finally, CORE3.pdf looks like:

Notice that numbers beside each bar show the actual number of responses. This is very important since course sizes vary substantially. Notice, for example, 20221 CSC385 where there are only 11 responses in total and compare that with 20219 CSC110 where there are hundreds of responses.

Conclusion #

I find the visualization above to be more useful than simply reporting the average score of a prompt. The pitfalls of using averages are relatively well known, but I’d like to be more concrete on why the above visualization is useful. Recall that the CORE3 prompt asks students: “The instructor (Mario Badr) created an atmosphere that was conducive to my learning.”

One example is if something was different in one course to another, I could talk about how that may have impacted the distribution. The 20209 CSC110 offering occurred during the pandemic, but the 20219 CSC110 offering did not. Similarly, the 20221 CSC385 course offering was the “last” term impacted by the pandemic, which made in-person sessions optional until after reading week. For a lab-based course like CSC385, this caused (in my opinion) a significant drop in course enrolment. I would contrast this with the 20241 course offering (not shown above), which ended with over 50 students in the course (and had a higher response rate for course evaluations).

Do you need to go these lengths of data collection and visualization? I’ve found that, in general, the answer is no. But one place where I have found it very useful is for the “extra” questions instructors can choose on their course evaluations. This is because the data for those questions are, if I am correct, only visible to you (the instructor and not, e.g., the department). So, when submitting a dossier for review or promotion, including that data in a way that does not hide the underlying distribution (e.g., a mean or median) can be very useful when providing evidence for teaching excellence.